Nvidia's Instant-NGP creates NeRFs in a few seconds. A new release makes it easy to use - even without coding knowledge.

Neural Radiance Fields (NeRFs) can learn a 3D scene from dozens of photos and then render it photo-realistically. The technology is a hot candidate for the next central visualization technology and is being developed by AI researchers and companies such as Google and Nvidia. Google uses NeRFs for Immersive View, for example.

The technology has arrived outside the research labs among photographers and other artists who are eager to experiment. But access is still full of hurdles, often requiring code knowledge and strong computing power.

Nvidia's Instant NGP and open-source Nerfstudio make access easier

Tools such as the open-source Nerfstudio toolkit attempt to simplify the process of NeRF creation, offering tutorials, a Python library, and a web interface. Among other things, Nerfstudio relies on an implementation of Instant-NGP (Instant Neural Graphics Primitives), a framework from Nvidia researchers that can learn NeRFs in a few seconds on a single GPU.

Now the Instant-NGP team has released a new version that requires only one line of code for custom NeRFs - everything else is done via a simple executable file starting an interface.

Even better: If you just want to try out the included example or are willing to download another small file, you don't need any code at all. Instant-NGP runs on all Nvidia cards from the GTX-1000 series onwards and requires CUDA 11.5 or higher on Windows.

Instant-NGP: First steps to the Fox NeRF

To use Instant-NGP to train the included fox example, all you need to do is load the Windows binary release of Instant-NGP that matches your Nvidia graphics card. Start the interface via instant-ngp.exe in the downloaded folder. Then you can drag the fox folder under data/nerf/ into the Instant-NGP window and start the training. After a few seconds, a fox head should be visible on a wall.

Now you can use the settings to create meshes, for example, or use the camera interface to plan a camera path and then render a video. This is saved in the Instant NGP folder. Jonathan Stephens from EveryPoint shows how this works in his short video tutorial on YouTube.

How to turn your own video into a NeRF

For a custom NeRF, Instant-NGP supports two approaches: COLMAP to create a dataset from a set of photos or video you've taken, or Record3D to create a dataset using an iPhone 12 Pro or later (based on ARKit).

Both methods extract images from videos and estimate the camera position for each image in the training dataset, as this is necessary for NeRF training.

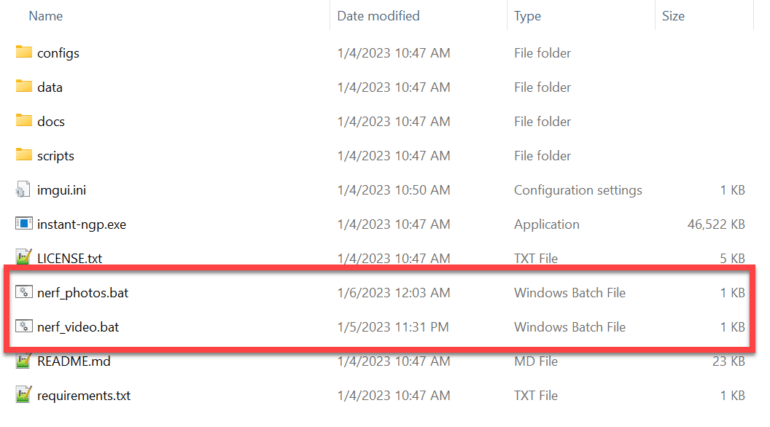

For those with no coding knowledge, two simple .bat files can be downloaded from Stephens' InstantNGP batch GitHub - one for photos and one for videos. Simply drop the files into the Instant NGP folder and drag a video or folder of images onto the appropriate .bat file.

For a video, you still need to set a value that determines how many frames per second of video footage will be extracted. The value should end up being between 150 and 300 frames for the entire video. A value of 2 will yield about 120 frames for a 60-second clip.

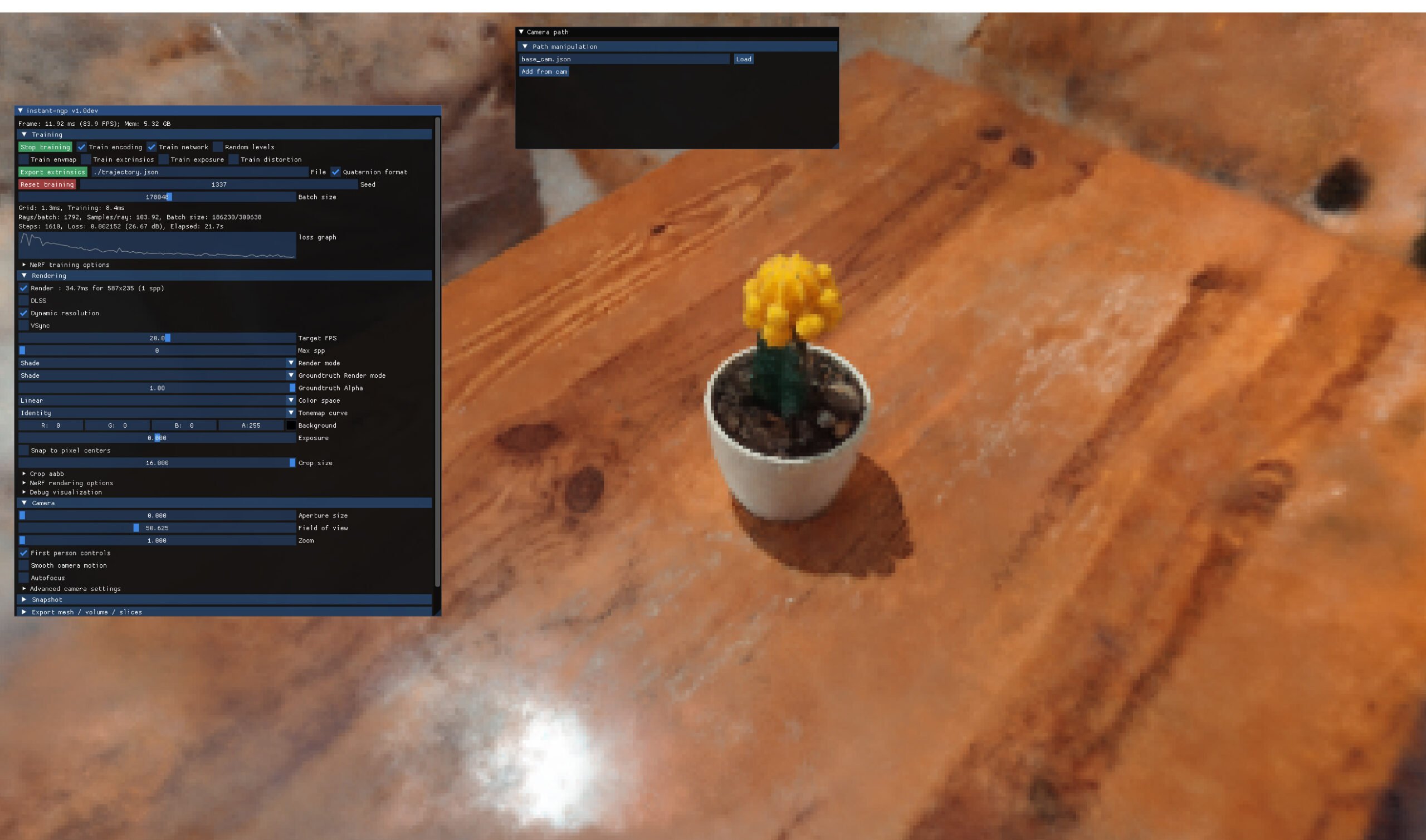

Cactus-NeRF sucks - but it's my first try

Once the process is complete, the Instant NGP window opens and the training process starts automatically. Now you can change training parameters as described above, turn on DLSS or render a video.

My first attempt is full of artifacts, which can probably be easily fixed - for example via an adjusted aabb_scale value which affects how far the NeRF implementation tracks rays. But the whole process including video recording took me under five minutes. I used an RTX 3060 Ti as GPU.