Intel takes aim at Nvidia with new Gaudi 3 AI chip

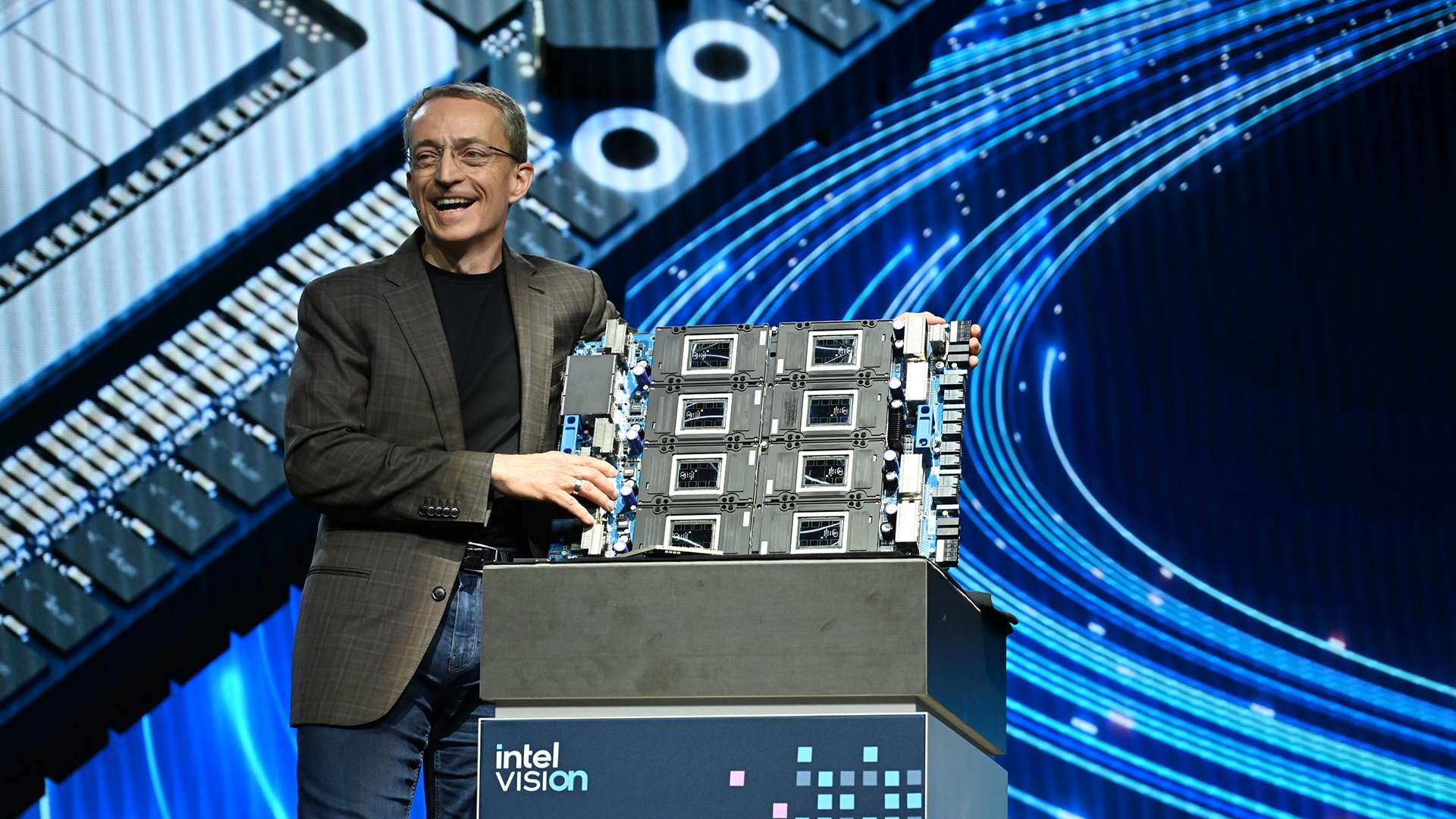

Intel officially introduced its new AI accelerator Gaudi 3 at Vision 2024.

According to the company, Gaudi 3 is expected to reduce training time for the 7B and 13B parameter Llama2 models and the 175B parameter GPT-3 model by about 50% compared to the Nvidia H100. Gaudi 3 is also expected to outperform the H100 and H200 GPUs in terms of inference throughput by about 50% and 30% on average, depending on the model.

The standard Gaudi 3 features 96 MB of onboard SRAM cache with 12.8 TB/s bandwidth and 128 GB of HBM2e memory with 3.7 TB/s peak bandwidth. According to Intel, the chip offers twice the FP8 and four times the BF16 processing power, as well as twice the network bandwidth and 1.5 times the memory bandwidth of its predecessor. The 5 nm AI accelerator is also said to be significantly cheaper than the H100. However, Nvidia already has a new offering with the Blackwell architecture.

Intel plans open platform for AI

Gaudi 3 will allow companies to flexibly scale AI systems with up to tens of thousands of accelerators - from single nodes to mega clusters. Intel is relying on open, community-based software and standardized Ethernet networking. Gaudi 3 will be available to OEMs including Dell, HPE, Lenovo, and Supermicro beginning in Q2 2024. New customers and partners for the Intel Gaudi Accelerator including Airtel, Bosch, IBM, NAVER, and SAP were also introduced.

Intel also plans to create an open platform for enterprise AI with partners including SAP, RedHat, and VMware. The goal is to accelerate the adoption of secure GenAI systems based on the RAG (Retrieval-Augmented Generation) approach. This allows proprietary data sources to be combined with open-source language models.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.