LinkedIn shares insights into the challenges of putting LLMs to work

LinkedIn has spent the last six months working on new generative AI features for job and content search. The team describes the obstacles they have encountered and the solutions they have found.

A team at LinkedIn has been working on a new LLM-based product for the last six months. The goal was to fundamentally transform the way members search for jobs and browse job-related content.

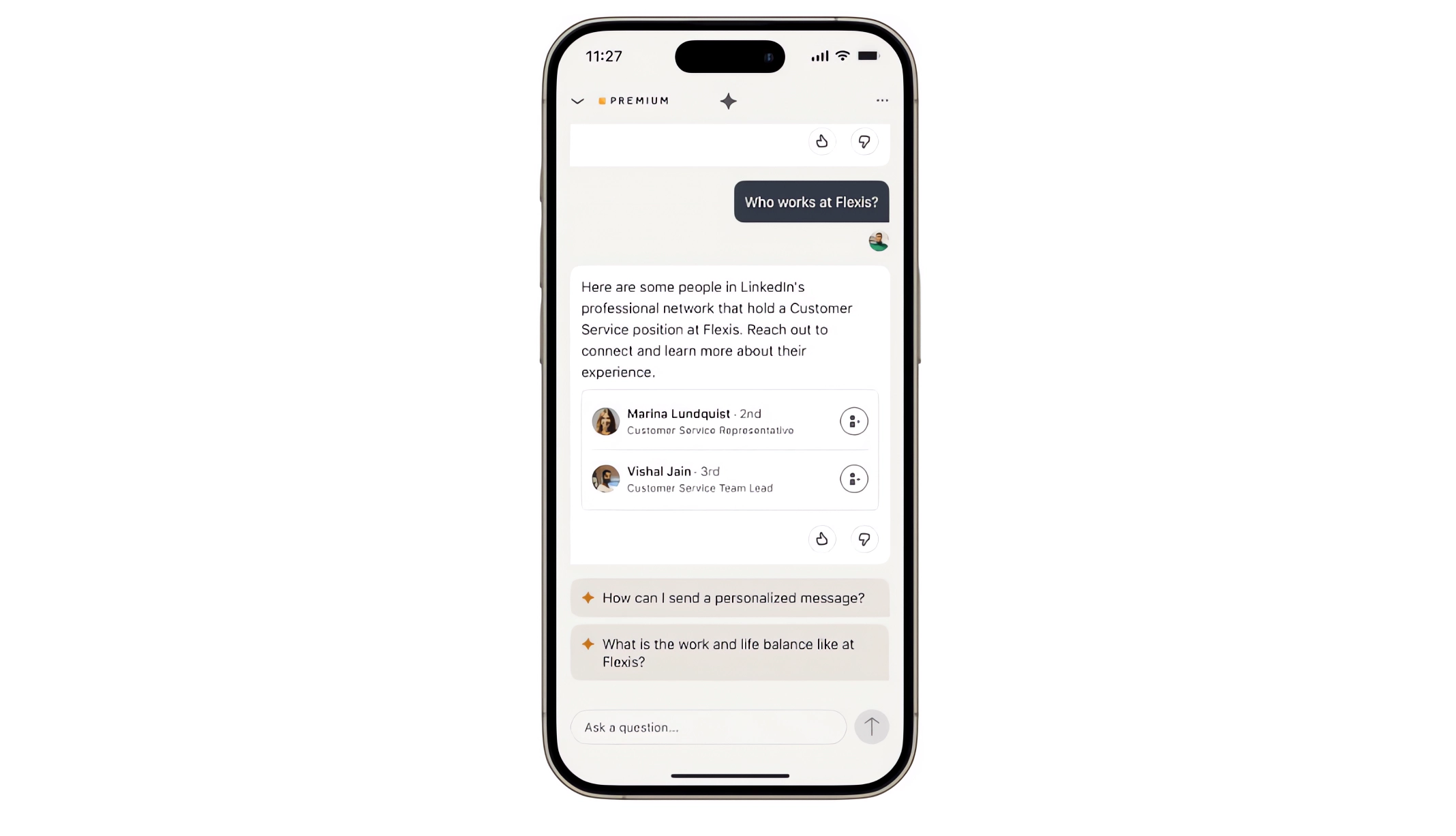

The developers wanted to make every feed and job listing a starting point to access information faster, make connections, and get advice on how to improve your profile or prepare for an interview.

According to the team, it was easy to build a simple pipeline with a routing step to select the right AI agent, a retrieval step to retrieve relevant information (RAG), and a generation step to generate the response.

Splitting into independent agent teams developed by different people allowed for quick development, but issues such as fragmentation of the user experience had to be addressed. Shared prompt templates were helpful here, among other things.

The difficulty came in evaluating the quality of the AI's responses based on human scores. The team needed consistent guidelines and a scalable scoring system. Automatic scoring is not yet good enough, the team says, so it is used only to support a developer's initial rough evaluation.

Another challenge was that LinkedIn's own APIs were not designed to be processed by an LLM. LinkedIn solved the problem by building LLM "skills" around the APIs, such as a description of the API function and its use that LLMs could understand. The same technique is used for calling non-LinkedIn APIs such as Bing search and news.

The team wrote some code manually to correct recurring LLM errors. According to the team, this is cheaper than writing prompts for the LLM to correct itself. By correcting the code manually, the error rate in output formatting could be reduced to 0.01 percent.

The long road to optimal LLM performance

Another problem was ensuring consistent output quality. The initial rapid progress—80 percent of the product worked in the first month—slowed as they approached 100 percent.

Each additional percent became a challenge, according to the team. Aspects such as capacity, latency, and cost had to be balanced.

For example, complex prompting methods such as Chain of Thought reliably improve the result but increase latency and costs. It took the team another four months to reach 95 percent.

"When the expectation from your product is that 99%+ of your answers should be great, even using the most advanced models available still requires a lot of work and creativity to gain every 1%," the team

Overall, the report shows that while the use of generative AI seems tantalizingly simple, there are many challenges when it comes to putting it to productive use. The LinkedIn team is still working to optimize the product, which is expected to launch soon.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.