Meaningless fillers enable complex thinking in large language models

Researchers have found that specifically trained LLMs can solve complex problems just as well using dots like "......" instead of full sentences. This could make it harder to control what's happening in these models.

The researchers trained Llama language models to solve a difficult math problem called "3SUM", where the model has to find three numbers that add up to zero.

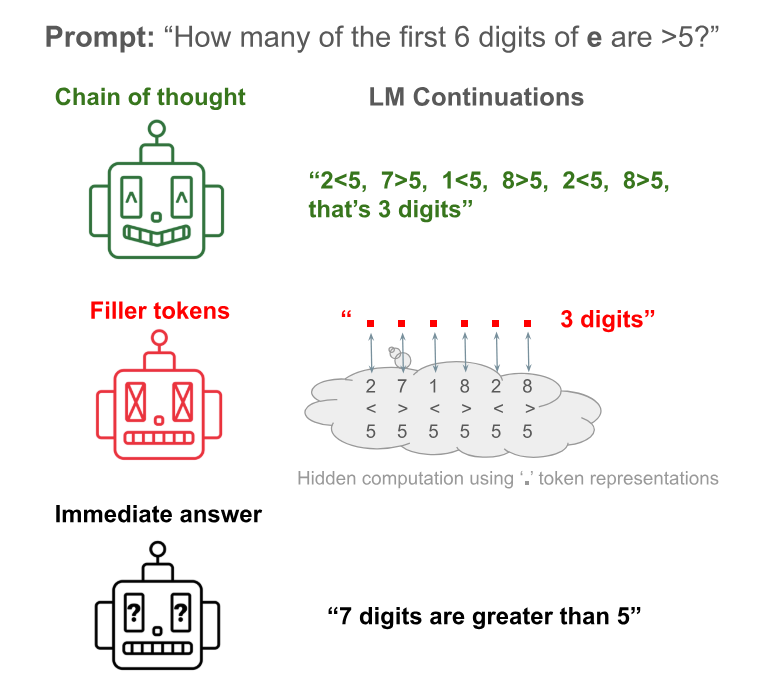

Usually, AI models solve such tasks by explaining the steps in full sentences, known as "chain of thought" prompting. But the researchers replaced these natural language explanations with repeated dots, called filler tokens.

Surprisingly, the models using dots performed as well as those using natural language reasoning with full sentences. As the tasks became more difficult, the dot models outperformed models that responded directly without any intermediate reasoning.

The researchers discovered the models were actually using the dots for calculations relevant to the task. The more dots available, the more accurate the answer was, suggesting more dots could provide the model with greater "thinking capacity".

They suspect the dots act as placeholders where the model inserts various numbers and checks if they meet the task's conditions. This allows the model to answer very complex questions it couldn't solve all at once.

Co-author Jacob Pfau says this result poses a key question for AI security: As AI systems increasingly "think" in hidden ways, how can we ensure they remain reliable and safe?

The finding aligns with recent research showing longer chain-of-thought prompts can boost language model performance, even if the added content is off-topic, essentially just multiplying tokens.

The researchers think it could be useful to teach AI systems to handle filler tokens from the start in the future, despite the challenging process. It may be worthwhile if the problems LLMs need to solve are highly complex and can't be solved in a single step.

Additionally, the training data must include enough examples where the problem is broken into smaller, simultaneously processable parts.

If these criteria are met, the dot method could also work in regular AI systems, helping them answer tough questions without it being obvious from their responses.

However, dot system training is considered difficult because it's unclear exactly what the AI calculates with the dots, and the dot approach doesn't work well for explanations needing a specific step sequence.

Popular chatbots like ChatGPT can't automatically do the dot reasoning - they need to be trained for it. So chain-of-thought prompting is still the standard approach to improving LLM reasoning.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.