Meta's new high-tech Aria Gen 2 glasses are the ultimate AI training data collector

Meta has released a deep technical breakdown of its Aria Gen 2 research glasses, offering the first comprehensive look at the hardware since the device was announced in February.

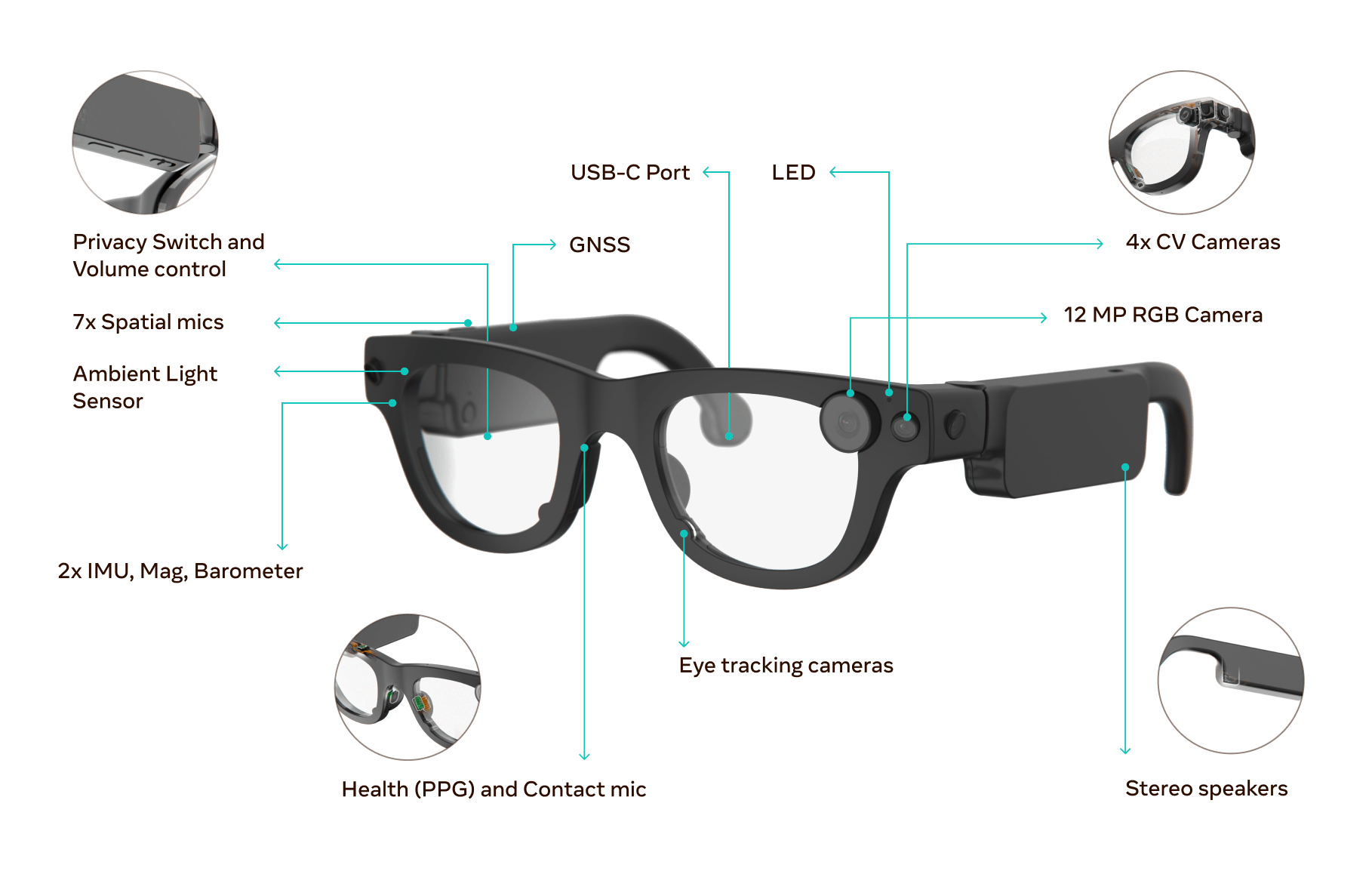

The company says Aria Gen 2 represents a major leap from the original 2020 model. The updated glasses weigh between 74 and 76 grams, depending on the size, and come in eight different frame variants to fit a range of face and head shapes. Foldable temples make them more compact and portable.

One of the most significant changes is the jump from two to four computer vision cameras. The new global shutter sensors expose every pixel at the same time, which eliminates motion distortion when the headset moves quickly.

The cameras now support a high dynamic range (HDR) of 120 decibels—up from 70 decibels in the first generation. This improvement lets Aria Gen 2 capture scenes with both very bright and very dark areas in sharp detail, such as when someone steps from a dark room into sunlight.

Video: Meta

Stereo overlap between the main cameras has also increased, from 35 to 80 degrees. This wider overlap allows the system to capture more of the same scene from both cameras, which translates to more accurate depth measurements.

Video: Meta

New sensors for heart rate and voice detection

Meta has added three new sensors to Aria Gen 2. A calibrated ambient light sensor (ALS) can distinguish between different light sources and includes an ultraviolet mode to separate sunlight from artificial light. This lets the system automatically adjust camera settings as lighting conditions change.

Two more sensors are built into the nose pad. A contact microphone picks up the user's voice through vibrations in the nose, a method designed to work even in loud environments by relying on structure-borne sound instead of air conduction.

The nose pad also houses a photoplethysmography (PPG) sensor that measures heart rate by detecting subtle changes in blood flow beneath the skin.

A wind tunnel demonstration shows that the contact microphone can even pick up whispers when conventional microphones fail.

The Aria Gen 2 uses SubGHz radio for synchronizing multiple devices with sub-millisecond precision—less than a thousandth of a second. Meta says this hardware-based approach is much more accurate than the software-based sync used in the previous generation.

On-device machine learning with a dedicated coprocessor

AI processing on Aria Gen 2 happens on a dedicated coprocessor designed for energy-efficient machine learning tasks. All machine perception algorithms, software that helps the glasses interpret their surroundings, run directly on the device.

Video: Meta

The Visual Inertial Odometry (VIO) system fuses camera images with motion sensor data to track the headset's position and orientation in all six degrees of freedom (6DoF). This gives the device a constant sense of where it is and which way it's facing.

Aria Gen 2 also features eye tracking that detects where the user is looking, captures gaze direction for each eye, calculates the vergence point (where both eyes focus), tracks blinks, and measures pupil size. Hand tracking follows hand movement in 3D and reconstructs the articulated positions of finger joints in real time.

Research access in 2025

Meta will open applications for access to Aria Gen 2 throughout the year. Interested researchers and developers can sign up for updates here. Before the device becomes widely available, Meta plans to show interactive demos at CVPR 2025, a major computer vision conference scheduled for June 11-15.

Aria Gen 2 was officially announced in February 2025, with a claimed battery life of six to eight hours of continuous use. Project Aria itself has been running since 2020, producing datasets like Ego-Exo4D for training computer vision and robotics models. Dialogues recorded through the glasses' microphones have also been turned into training data.

Meta likely will use first-person video from Aria devices to train AI assistants that can help people with everyday tasks. Like Google and OpenAI, the company aims to create a general-purpose AI assistant that people will use to interact with both the physical and digital worlds.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.