Meta's new Llama 3.2 brings tiny models to mobile devices and adds image understanding

Just a few months after the last release, Meta has unveiled the next addition to the Llama series. Following the large 405B model of Llama 3.1, version 3.2 introduces two tiny models for smartphones and two larger models capable of understanding images.

Meta's latest language model output initially includes two text models with one and three billion parameters, respectively, which can run on smartphones and are designed to summarize texts, rewrite content, or invoke specific functions in other apps.

Video: Meta

To achieve this, Meta worked closely with major hardware manufacturers such as Qualcomm, MediaTek, and Arm. According to Meta, local processing primarily offers advantages in terms of speed and data protection.

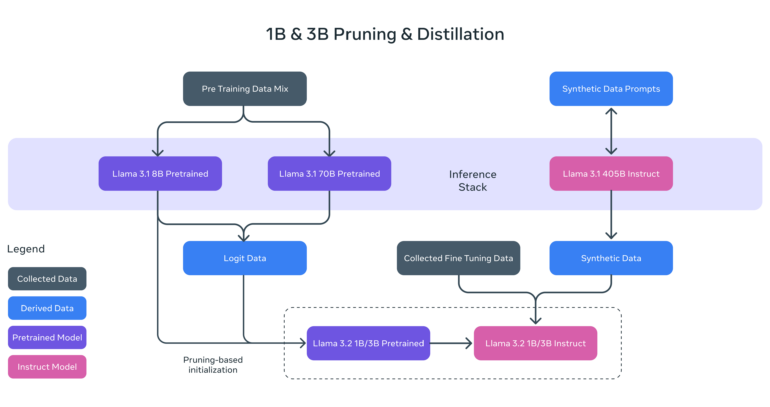

To optimize the lightweight 1B and 3B models, Meta employed a combination of pruning and knowledge transfer through larger teacher models. Structured pruning in a single pass from the previous Llama 3.1-8B model systematically removed parts of the network and adjusted the weights.

Vision models with 11 and 90 billion parameters

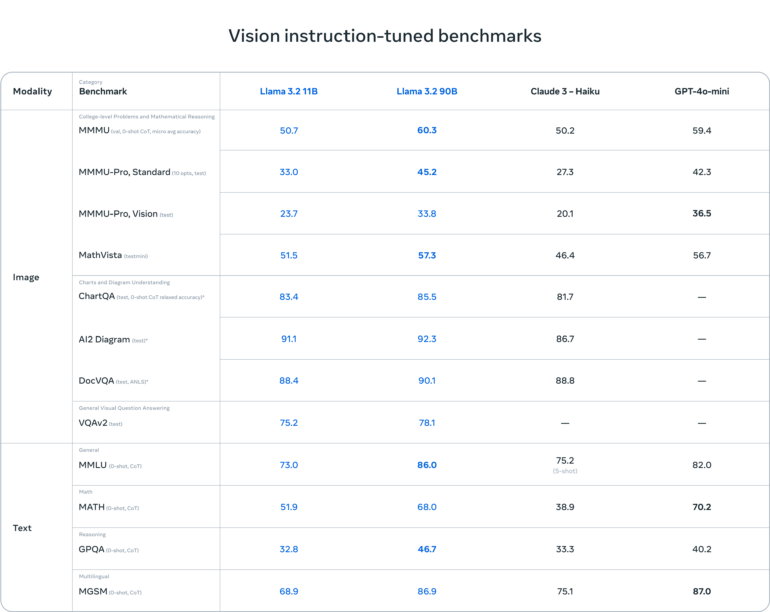

In addition to the lightweight models, Meta is releasing its first vision models with 11 and 90 billion parameters. According to Meta's benchmarks, Llama 3.2 11B and 90B can keep pace with leading closed models such as Claude 3 Haiku and GPT-4o mini in image understanding tasks. Open source competitor Mistral also recently unveiled its first vision model, Pixtral, which has a significantly smaller number of parameters.

Video: Meta

To enable image input, Meta has equipped the Llama 3.2 vision models with a new type of architecture. This involves training additional adapter weights that integrate the pre-trained image encoder into the pre-trained language model.

Unlike other open multimodal models, the Llama 3.2 vision models are available in both pre-trained and aligned versions for fine-tuning and local deployment. In terms of performance, they are on par with recently released models such as Mistral's Pixtral or Qwen 2 VL.

Llama stack API to simplify RAG and more

To simplify development with Llama models, Meta is introducing the first official Llama stack distributions. These enable the turnkey provision of applications with Retrieval Augmented Generation (RAG) and tool integration in various environments.

For the API, Meta is collaborating with AWS, Databricks, Dell, and Together AI, among others. There is also a command line interface (CLI) and code for various programming languages.

Meta lacks the home advantage on mobile

While the release of Llama 3.2 represents another step in Meta's efforts to make open source AI - or it's interpretation of it - the standard, it remains to be seen whether it will gain traction on smartphones, as Android with Gemini Nano and iOS with Apple Intelligence have their own deeply integrated solutions for local AI processing.

With the vision upgrade for the Llama models, Meta has given its AI assistant, Meta AI, an important function that will benefit many users on the company's numerous social media platforms. In the long term, this could impact competitors like ChatGPT, which received similar capabilities around a year ago.

The Llama 3.2 models are available for download at llama.com and Hugging Face, as well as through a broad ecosystem of partner platforms.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.