Meta's "Transfusion" blends language models and image generation into one unified model

Meta AI introduces "Transfusion," a new approach that combines language models and image generation in a unified AI system. The model achieves similar results to specialized systems in image generation while improving text processing.

Researchers from Meta AI have developed "Transfusion," a method that integrates language models and image generation into a single AI system. According to the research team, Transfusion combines the strengths of language models in processing discrete data like text with the capabilities of diffusion models in generating continuous data like images.

Current image generation systems often use pre-trained text encoders to process input prompts, which are then combined with separate diffusion models for image generation, Meta explains. Many multimodal language models work similarly, connecting pre-trained text models with specialized encoders for other modalities.

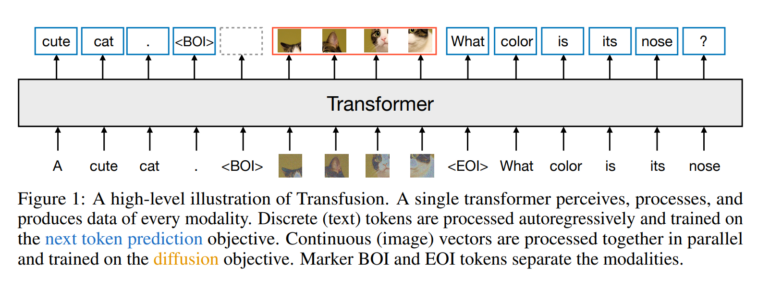

Transfusion, on the other hand, uses a single, unified Transformer architecture for all modalities, trained end-to-end on text and image data. Different loss functions are used for text and images: next token prediction for text and diffusion for images.

To process text and images together, images are converted into sequences of image patches. This allows the model to process both text tokens and image patches in a single sequence. A special attention mask also enables the model to capture relationships within images.

This integrated approach also differs from methods like Meta's Chameleon, which convert images into discrete tokens and then treat them like text. According to the research team, Transfusion preserves the continuous representation of images, avoiding information loss due to quantization.

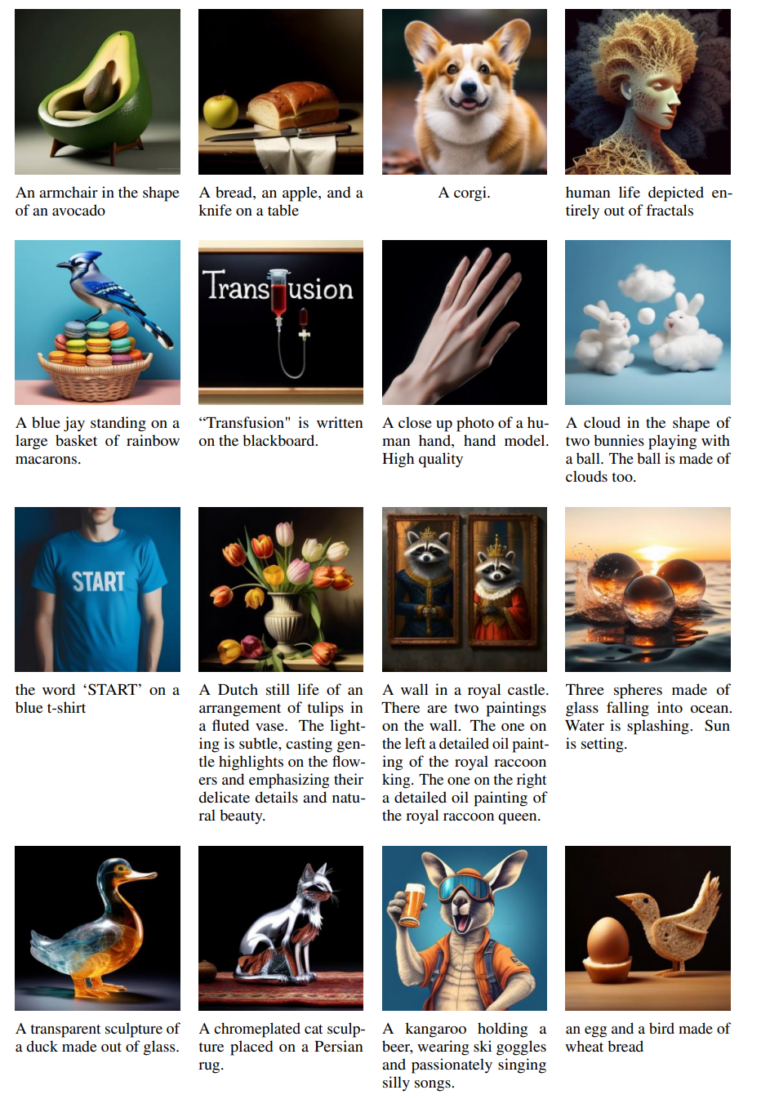

Transfusion achieves high image and text quality in initial tests

Experiments also show that Transfusion scales more efficiently than comparable approaches. In image generation, it achieved similar results to specialized models with significantly less computational effort. Surprisingly, integrating image data also improved text processing capabilities.

The researchers trained a 7-billion-parameter model on 2 trillion text and image tokens. This model achieved similar results in image generation to established systems like DALL-E 2 while also being able to process text.

The researchers see potential for further improvements, such as integrating additional modalities or alternative training methods.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.