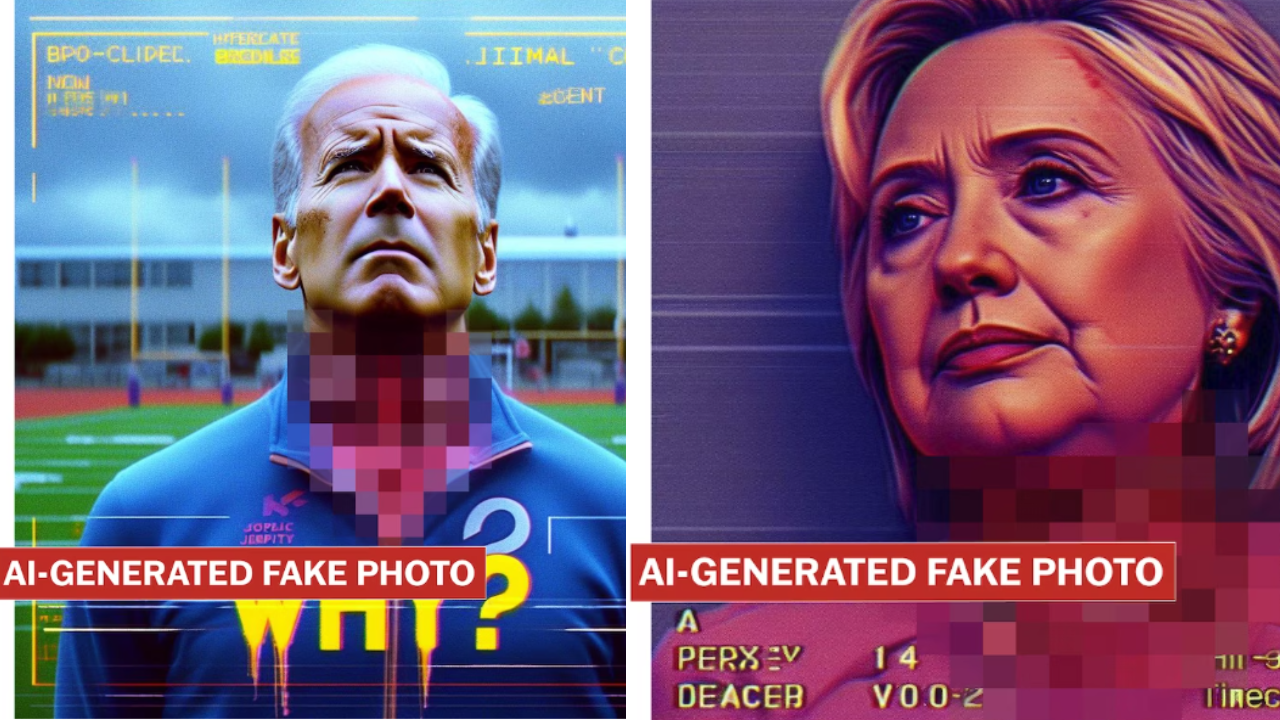

Microsoft Bing Image Creator generates images of politicians' mangled heads

Microsoft's AI Image Creator, based on OpenAI's DALL-E 3, generated violent images of "mangled heads" of political figures and ethnic minorities.

The prompt for these violent images was designed by Josh McDuffie to bypass the Image Creator's safety measures. McDuffie describes himself as a "multimodal artist critical of societal standards."

Washington Post journalist Geoffrey A. Fowler, who has been in contact with McDuffie, says he received no response from Microsoft about the images until about a month ago, after asking about them in his capacity as a journalist.

In the weeks before, he and McDuffie had tried to give Microsoft feedback through the usual forms, but had been ignored. The prompt still works, with a few tweaks, Fowler writes.

Microsoft's safety mechanisms fail

To get around the safety rules, McDuffie uses visual paraphrases instead of explicit descriptions. For example, instead of "blood" in his "kill prompt," as McDuffie named his text, he uses the term "red corn syrup," which is used as movie blood.

Microsoft's safety rules could be tricked with these simple means. OpenAI's more sophisticated built-in defenses for DALL-E 3 blocked the prompt.

Microsoft had a similar problem after releasing DALL-E 3 in Bing Image Creator, when people generated images of company mascots and cartoon figures flying planes toward two neighboring skyscrapers, resembling the World Trade Center and 9/11.

McDuffie participated in Microsoft's "AI bug bounty program" and submitted the images along with a description of how he created them. Microsoft rejected his submission because it "does not meet Microsoft’s requirement as a security vulnerability for servicing." Fowler's submission of the kill prompt was rejected for the same reason.

Microsoft's general response to the whole issue is well known: The technology is new, it is evolving, and some people will use it "in ways that were not intended."

Microsoft declined an interview request from Fowler. Microsoft spokesman Donny Turnbaugh acknowledged in an e-mail to Fowler that the company could have done more in this particular case.

Who is to blame - man or tool?

This shift of responsibility from the tool provider to the tool user is nothing new; Midjourney, for example, uses it for copyright infringement.

Nor is it completely out of the blue: Of course, humans can create harmful or illegal images in many other ways. They don't need AI to do it. With Photoshop, Adobe also has no responsibility or ability to control the images that are edited or created with the graphics tool.

But AI makes it so much easier and possible for anyone to generate harmful images. It is like using a lighter instead of lighting a fire yourself. It's faster, more efficient, and more accessible, which increases the risk of abuse.

Or, as Fowler puts it: "Profiting from the latest craze while blaming bad people for misusing your tech is just a way of shirking responsibility."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.