Mistral AI launches Ministral 8B, a much more capable successor to its popular Mistral 7B LLM

French AI startup Mistral AI has announced two new language models designed for use on edge devices and in edge computing scenarios.

The new models, called Ministral 3B and Ministral 8B, are part of the "Ministraux" family. According to Mistral, these are currently the most powerful AI systems in their class for edge use cases. Both models support context lengths of up to 128,000 tokens.

Applications range from translation to robotics

The Ministraux models are designed for use cases where local processing and privacy are critical. Mistral says they are well-suited for tasks such as on-device translation, offline intelligent assistants, local data analysis, and autonomous robotics.

When combined with larger language models such as Mistral Large, the company says Ministraux can also act as efficient intermediaries for function calls in multi-step workflows.

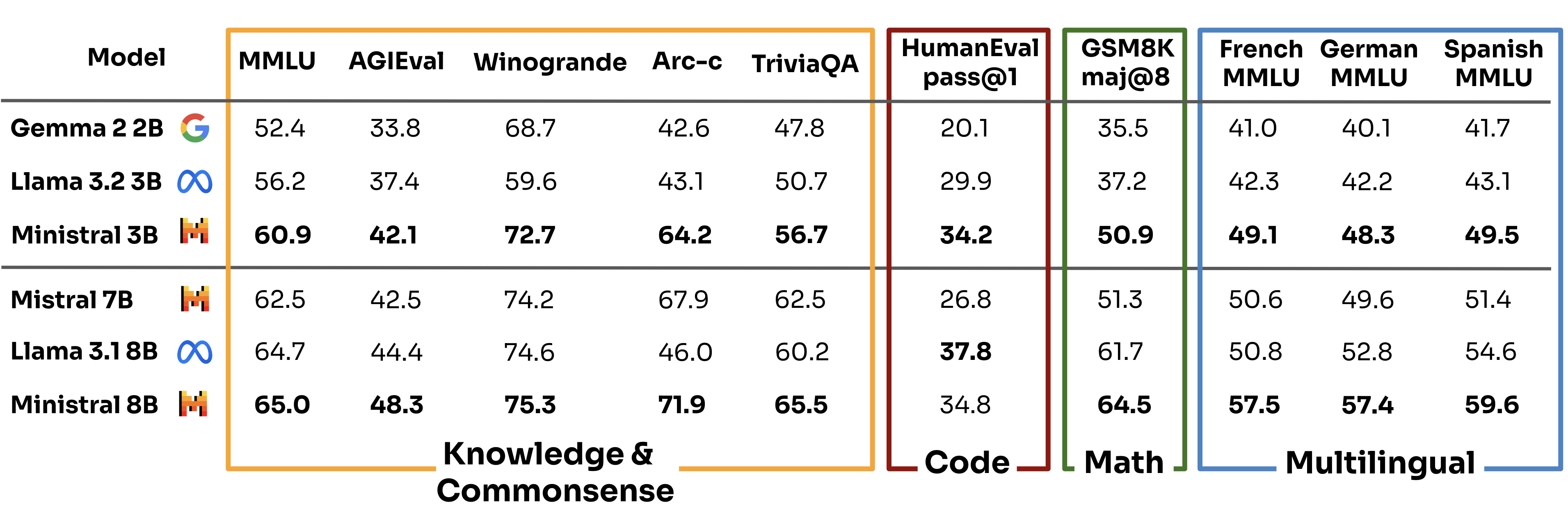

Benchmarks provided by Mistral indicate that Ministral 3B and 8B outperform comparable models like Google's Gemma 2 2B and Meta's Llama 3.1 8B in most categories. The company highlights the performance of the smaller Ministral 3B, which surpasses its larger predecessor, Mistral 7B, on some benchmarks. Mistral 7B is widely considered one of the most successful open-source models.

The larger Ministral 8B is clearly outperforming the 7B model across all benchmarks. Mistral AI reports that Ministral 8B excels particularly in areas such as knowledge, common-sense, function-calling, and multilingual capabilities. It didn't compare it to the newer Llama 3.2 11B, which is likely a bit better (73 in MMLU), but outside the 10-billion-parameter class.

Pricing and availability

The new models are available now. Mistral AI offers Ministral 8B via API for $0.10 per million tokens, while Ministral 3B costs $0.04 per million tokens. Commercial licenses are available for on-premises use.

For research purposes, Mistral AI provides the model weights for Ministral 8B Instruct. The company says both models will soon be available through cloud partners such as Google Vertex and AWS.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.