News readers are open to AI assisting journalists, but not replacing them entirely

A recent study reveals that news consumers are becoming more open to artificial intelligence in journalism, but with clear boundaries and specific conditions.

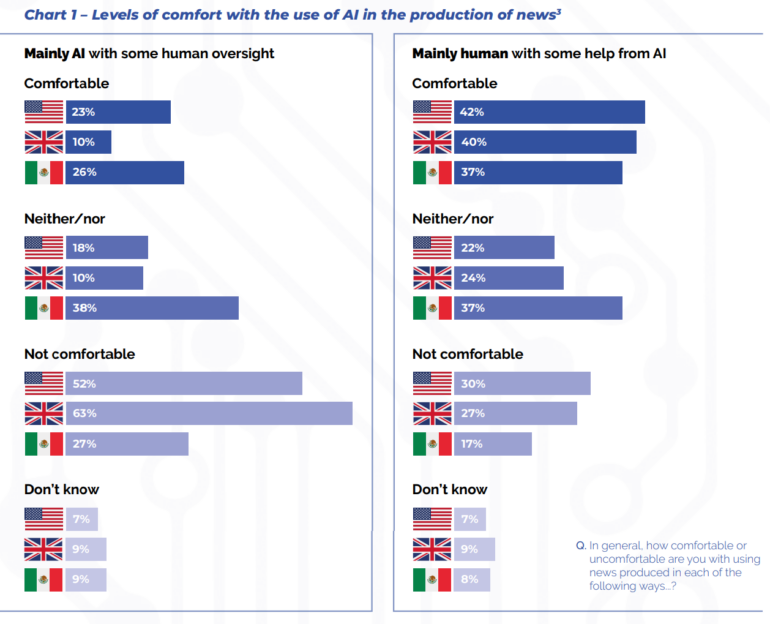

The study, conducted by market research firm CRAFT with the Reuters Institute for the Study of Journalism, found that acceptance of AI in news production varies widely depending on its application. Researchers surveyed people in Mexico, the UK, and the US.

"Audiences are most comfortable with generative AI being used behind the scenes, to aid journalistic practice that is not visible to the audience but aids news production" says Konrad Collao, head of the study. Many also see benefits in presenting news in new formats, such as summaries or personalization.

No good journalism without emotion

People are far more skeptical of AI fully automating content creation. "News consumers are least comfortable with AI being used to generate synthetic content," says Collao.

Respondents worry this could reduce journalistic quality and remove human perspectives and emotion. The exception is purely factual information like sports scores or stock prices.

Most agree AI use should be disclosed, but not in every case. Many think disclosure isn't needed for background tasks, but is essential for content production.

The study also found people reject different standards for AI use across topics. "Although some topics are considered more or less consequential, news consumers do not accept that news should be more or less truthful or accurate across different topics. The same good journalistic principles should be applied to all topics," notes Collao.

"Check, check and check again"

Based on the results, researchers recommend news organizations use AI mainly behind the scenes and for new presentation formats, while being cautious about AI-generated content. They should also be transparent about AI use without overstating it. The study recommends five principles for the use of AI in news production:

- Human review: all content should be reviewed by a human regardless of AI use. This is considered a basic good working practice.

- Acceptable AI use: AI is most acceptable in assisting journalists with simple tasks and rephrasing content. It is least acceptable in the synthetic creation of content.

- Exception for objective facts: AI use is acceptable for generating content with objectively verifiable facts if this is disclosed. One example is the BBC's automated reporting of election results.

- Acceptability for illustrative images: AI is acceptable for creating supporting, stylized images, but not for realistic depictions, especially for important topics such as war or politics.

- Exceptions for less important topics: For less consequential topics, where realism is not required and no people are depicted, the rules for realistic depictions apply less strictly.

Collao advises news organizations: "Check, check and check again – a basic principle of good working and journalistic practice thrown into sharp relief by the advent of generative AI. Ultimately, audiences feel that almost everything that is published should be checked by a human."

The study's authors describe a critical turning point closely tied to AI. In one scenario, people could lose trust in all information. In another, trust in news brands could rise or remain, if their status as responsible actors is strengthened.

This trust must be "earned, re-earned, and maintained," and news organizations' own handling of generative AI could have an impact on how this plays out.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.