Nvidia lands massive Meta deal and pushes into CPU market to fend off growing competition

Key Points

- Meta has signed a multi-year contract with Nvidia that covers millions of chips: in addition to Blackwell and Rubin GPUs, the independent Grace and Vera CPUs are also being used for the first time. Analysts estimate the value to be in the double-digit billion range.

- The deal marks a change in strategy for Nvidia, which is now selling its CPUs separately and targeting the growing inference market. While the training and inference of large AI models requires GPUs, CPUs, which are more cost-effective and energy-efficient, are sufficient for many smaller inference tasks.

- Meta is therefore taking a different approach to other hyperscalers such as Amazon and Google, which develop their own processors. The company relies on Nvidia hardware, while its own chip development is reportedly struggling with technical problems and delays.

The Facebook parent has signed a multiyear deal with Nvidia that includes not only GPUs but also, for the first time, standalone Nvidia processors. The agreement marks a strategic shift for both companies.

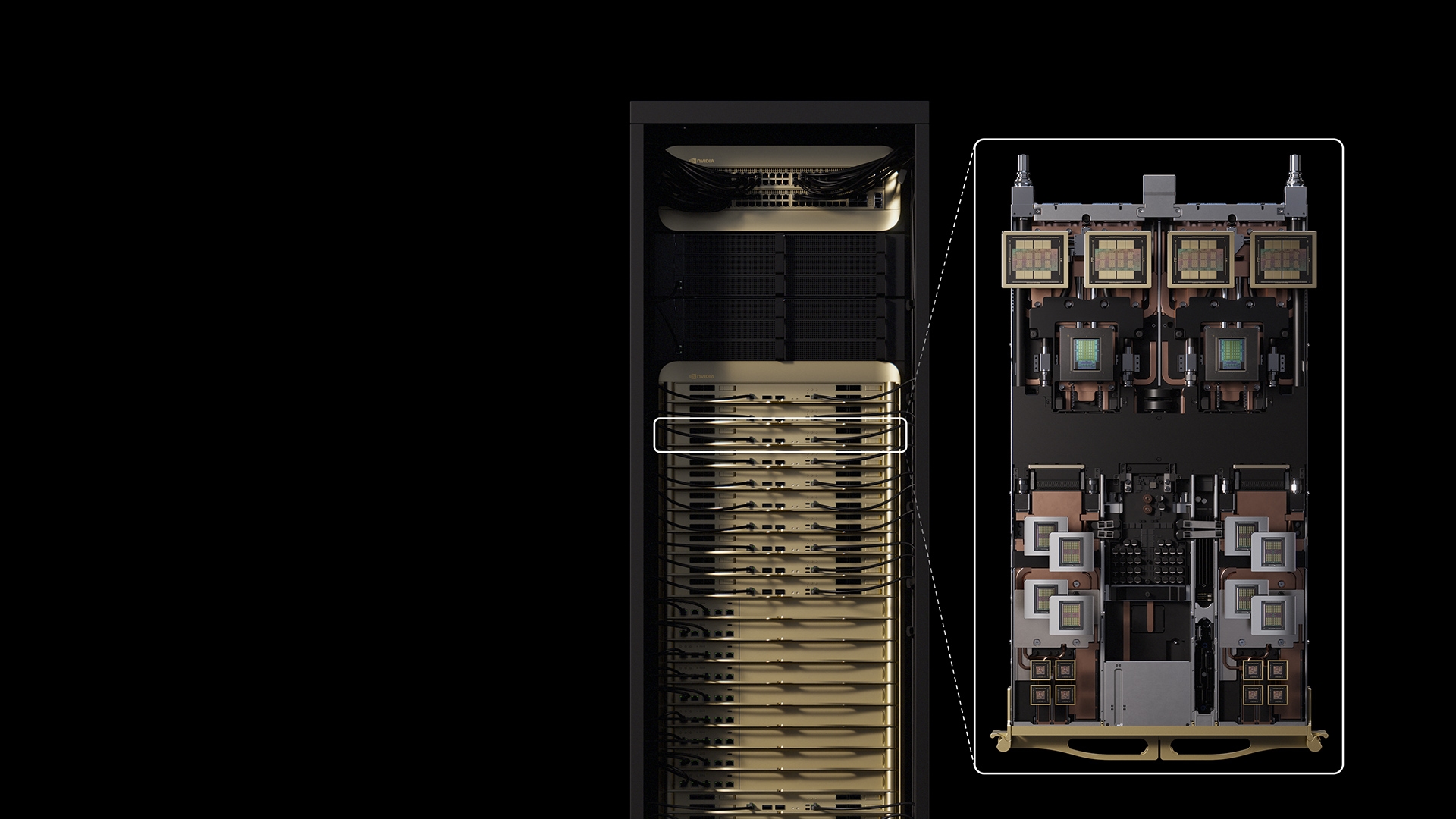

Meta has committed to purchasing millions of Nvidia chips in a multiyear agreement, including current Blackwell GPUs, upcoming Rubin GPUs, and, for the first time, standalone Grace and Vera CPUs. Neither company disclosed a price for the deal. Ben Bajarin, CEO and principal analyst at tech consultancy Creative Strategies, estimated it would be worth billions of dollars. According to The Register, the deal is likely to contribute tens of billions to Nvidia's bottom line.

Meta CEO Mark Zuckerberg had previously announced plans to nearly double the company's AI infrastructure spending in 2026 to as much as $135 billion.

Nvidia's CPU push targets the inference market

The most notable aspect of the deal is not the GPU purchase but Meta's decision to deploy Nvidia's CPUs as standalone products at large scale. Until now, Nvidia's Grace processors were almost exclusively available as part of so-called "Superchips" that combine a CPU and GPU on a single module. Nvidia officially changed its sales strategy in January 2026 and began offering the CPUs separately. The first named customer at that time was neocloud provider CoreWeave.

The company is targeting a growing market: while the AI industry in recent years was dominated by GPU-heavy training of large models, the focus is increasingly shifting toward inference, the process of running trained models. For many of these tasks, GPUs are overkill.

"We were in the 'training' era, and now we are moving more to the 'inference era,' which demands a completely different approach," Bajarin told the Financial Times.

Ian Buck, Nvidia's VP and General Manager of Hyperscale and HPC, said according to The Register that the Grace processor can "deliver 2x the performance per watt on those back end workloads" such as running databases. He added that "Meta has already had a chance to get on Vera and run some of those workloads, and the results look very promising."

The Grace CPU features 72 Arm Neoverse V2 cores and uses LPDDR5x memory, which offers advantages in bandwidth and space. Nvidia's next-generation Vera CPU brings 88 custom Arm cores with simultaneous multi-threading and confidential computing capabilities. According to Nvidia, Meta plans to use the latter for private processing and AI features in its WhatsApp encrypted messaging service. Vera deployment is planned for 2027.

Nvidia's decision to offer CPUs as standalone products also puts the company in direct competition with Intel and AMD in the server market.

Meta bucks the industry trend

By purchasing standalone Nvidia CPUs, Meta is taking a different path than most hyperscalers. Amazon relies on its own Graviton processors, Google on Axion. Meta, by contrast, is buying from Nvidia, even though the company is simultaneously working on its own AI chips. According to the Financial Times, however, Meta's in-house chip strategy had "suffered some technical challenges and rollout delays."

Nvidia, for its part, is facing mounting pressure. Google, Amazon, and Microsoft have all announced new in-house chips in recent months. OpenAI has co-developed a chip with Broadcom and struck a significant deal with AMD. Several startups such as Cerebras are offering specialized inference chips that could threaten Nvidia's dominance. In December, Nvidia acquired talent from inference chip company Groq in a licensing deal to shore up its position in the market.

Late last year, Nvidia's stock fell four percent after reports emerged that Meta was in talks with Google about using Google's Tensor Processing Units. No such deal has been announced.

Meta is also not exclusively an Nvidia customer. According to The Register, the company operates a fleet of AMD Instinct GPUs and was directly involved in the design of AMD's Helios rack systems, which are due out later this year.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now