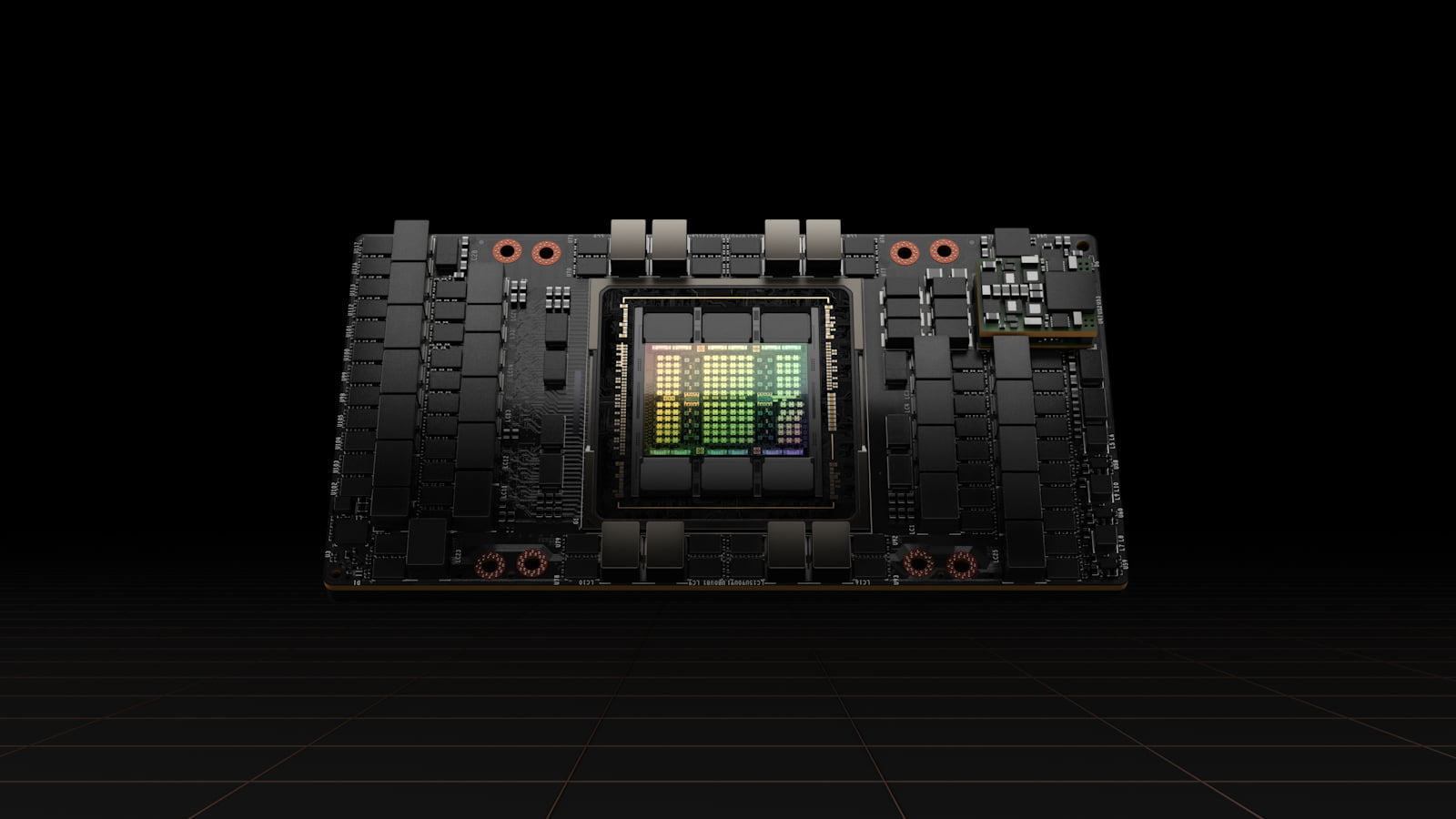

Nvidia's new H100 GPU for artificial intelligence is in high demand, generating sizable profits for the company. The AI chip is seeing unprecedented interest from researchers and tech firms racing to build the next big generative AI model.

Raymond James estimates that it costs Nvidia around $3,320 to manufacture an H100 GPU. With retail prices averaging between $25,000 and $40,000, this represents a profit of up to 1000%.

This should be taken with a pinch of salt, however, as it is not clear whether these figures include factors other than pure manufacturing costs. While the full cost after packaging and materials is likely to be higher, Nvidia is still making massive margins on each accelerator sold.

Raymond James estimates it costs Nvidia $3,320 to make a H100, which is then sold to customers for $25,000 to $30,000.

— tae kim (@firstadopter) August 16, 2023

According to the Financial Times, TSMC is on track to deliver 550,000 H100 GPUs to Nvidia this year, with potential H100 revenues of $13.75 billion to $22 billion in 2023. With estimated margins of 60-90% per chip, Nvidia could make over $10 billion in profits from H100 sales this year alone.

The AI accelerator is becoming a cash cow for the company and its value is already being used creatively, with CoreWeave using H100 GPUs as collateral for debt financing.

Generative AI boom fuels appetite for Nvidia's product

Driving the unprecedented demand is the boom in generative AI, as researchers from around the world race to develop the next ChatGPT. Major customers include Microsoft, Google, OpenAI in the US and tech giants in China and the Gulf.

The H100, which is in limited supply and is essentially sold out until 2024, has become the go-to chip for training and running these hungry generative models.

With companies such as Foxconn predicting that the AI acceleration market will be worth $150 billion by 2027, and Nvidia recently announcing another AI superchip for generative AI, we should expect to see a raft of new AI products once the new generation of supercomputers is up and running.