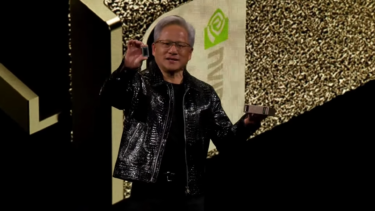

Nvidia's CEO Jensen Huang announces several innovations for Nvidia's AI business during his GTC keynote. Here is the most important information at a glance.

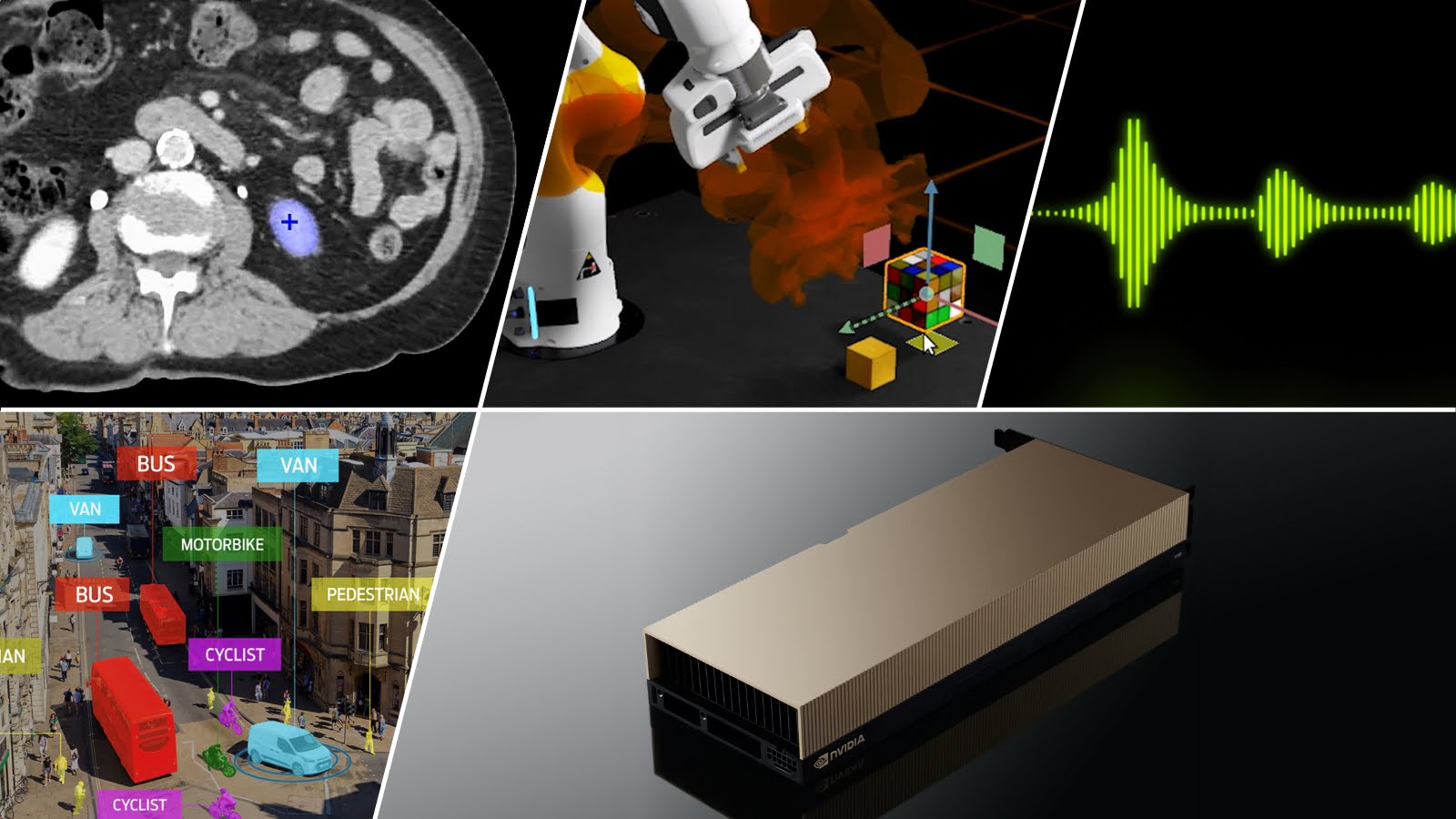

In March, Nvidia unveiled the Hopper H100 GPU for the first time. The AI accelerator is expected to replace Nvidia's successful A100 model and showed speeds up to 4.5 times faster than A100-based systems in the latest MLPerf benchmark.

During the GTC keynote, Nvidia CEO Jensen Huang now announced the sale of the first H100 systems via partners starting in October. The company's own DGX H100 systems are scheduled to ship in the first quarter of 2023.

With a mixed AI workload of training and inference of different AI models, an AI data center with 64 HGX H100 systems is expected to achieve the same performance as an A100 data center with 320 HGX A100 systems.

This leap is possible in part because of the integrated Transformer engine, which can process Transformer AI models faster.

Nvidia announces NeMo language model service

According to Nvidia, large-scale language models are taking on an increasingly important role in AI research and the AI market. The number of Transformer and language model papers has grown rapidly since 2017. Hopper was therefore explicitly designed for such models, Nvidia said.

In practice, Hopper is said to speed up the training of such models by five times and inference by 30 times. With ten DGX-A100 systems, it is currently possible to serve ten users simultaneously with large language models, and with ten DGX-H100 systems, it is 300, according to the company.

This performance enables the use of these models for specific applications, areas, and experiences, according to the company. Nvidia offers both the corresponding hardware and now a cloud service for large language models.

With Nvidia NeMo, companies can adapt and use large language models such as Megatron or T5 to their needs through prompt training. This puts Nvidia in direct competition with other providers of large language models. The focus is on enabling enterprises with concrete products, Nvidia said.

Nvidia brings AI hardware and models to medicine

An important application area for such large language models could be medicine. Specialized variants of the models predict molecules, proteins, or DNA sequences, for example. Chemistry and biology have their own language, according to Nvidia.

With the BioNeMo cloud service, the company brings pre-trained models for chemistry and biology applications, such as ESM-1b, a language model trained with protein databases, or OpenFold, a model that predicts protein folding.

BioNeMo launches in early access in October and is part of Nvidia's Clara framework for AI applications for healthcare. Nvidia is also bringing other models for specific applications in medicine, such as image recognition via Clara Holoscan.

The company also announced a collaboration with the Broad Institute to bring Clara to the Terra Cloud Platform, an open-source platform for biomedical researchers.

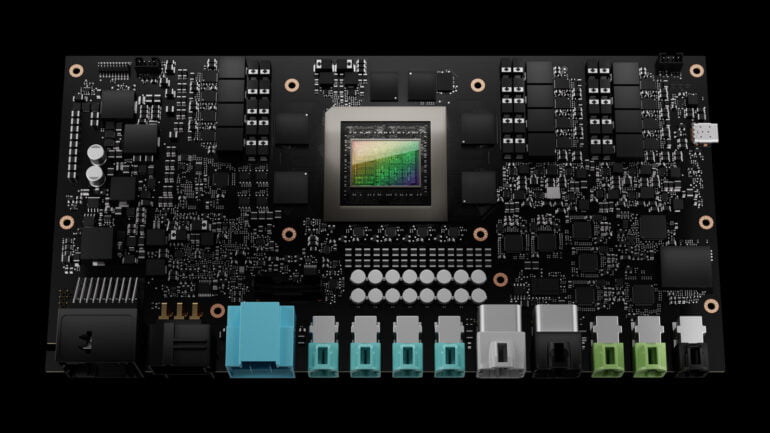

IGX systems for medicine and autonomous factories

For the medical market, Nvidia showed the IGX system, an all-in-one platform for developing and deploying AI applications in smart machines. IGX is said to enable real-time image analysis via Holoscan, for example. Companies such as Activ, Moon Surgical, and Proximie are expected to use Holoscan with IGX for their new medical platforms.

The IGX systems are also to be used in industrial automation, where they will enable smart spaces in which cameras, LIDAR, and radar sensors are processed simultaneously. Smart spaces in factories could reduce safety risks because companies no longer have to rely exclusively on sensor technology in robots, Nvidia said.

Jetson Orin Nano and Thor for the Edge

For edge computing, Nvidia pointed to the new Jetson Orin Nano, which can be installed in robots, for example. Orin Nano is supposed to offer 80 times higher performance compared to its predecessor. The AI models for Orin Nano can be trained in Nvidia's Isaac Sim robot environment.

In the autonomous vehicle market, Nvidia last year showed the Atlan chip for 2024 with 1,000 TOPS of computing power, four times the current Orin chip. However, autonomous vehicles need far more computing power to be even safer, Nvidia said. Therefore, the company decided to develop the Thor chip, which delivers a full 2,000 TOPS.

Thor is scheduled to hit the market in 2024 and will be installed in Nvidia Drive Thor system. Drive Thor is a centralized computer for autonomous vehicles that includes the Thor chip, a next-gen GPU, and a Grace CPU. Nvidia wants to make other computer systems redundant with it. Drive Thor is supposed to be able to run Linux, QNX, and Android and comes with the Transformer engine of the Hopper GPUs.

According to Nvidia, the first company to opt for Thor was car manufacturer Zeekr. In total, 40 companies use the Drive platform. It is also designed for training autonomous vehicles and receives updates such as a neural engine that can replicate real-world driving in a realistic simulation. This should simplify the training of edge cases, for example.