Ilya Sutskever, co-founder of OpenAI, explains why unsupervised learning works and how it relates to supervised learning. The core concept is compression - good compressors can become good predictors.

In a talk at the Simons Institute for the Theory of Computing at UC Berkeley, Ilya Sutskever, co-founder of OpenAI, presented research that tries to rigorously explain unsupervised learning through the lens of compression. While looking for topics he could talk about at the conference, he realized there was a lot of research he could not talk about, he said - referencing OpenAI's shift from being open to being more secretive about their projects.

Since Sutskever couldn't talk about OpenAI's current technical work, he revisited an old idea from 2016 about using compression to understand unsupervised learning, the underlying technique behind ChatGPT and GPT-4.

Stronger compressors find more shared structure

Supervised learning is well understood mathematically - if the training error is small, and the model capacity is right, learning results are guaranteed. However unsupervised learning lacks this formal understanding.

He suggested thinking about unsupervised learning through the lens of compression. A good compressor that compresses two data sets together will find and exploit patterns shared between them, just as unsupervised learning finds structure in unlabeled data that helps the main task, he suggested.

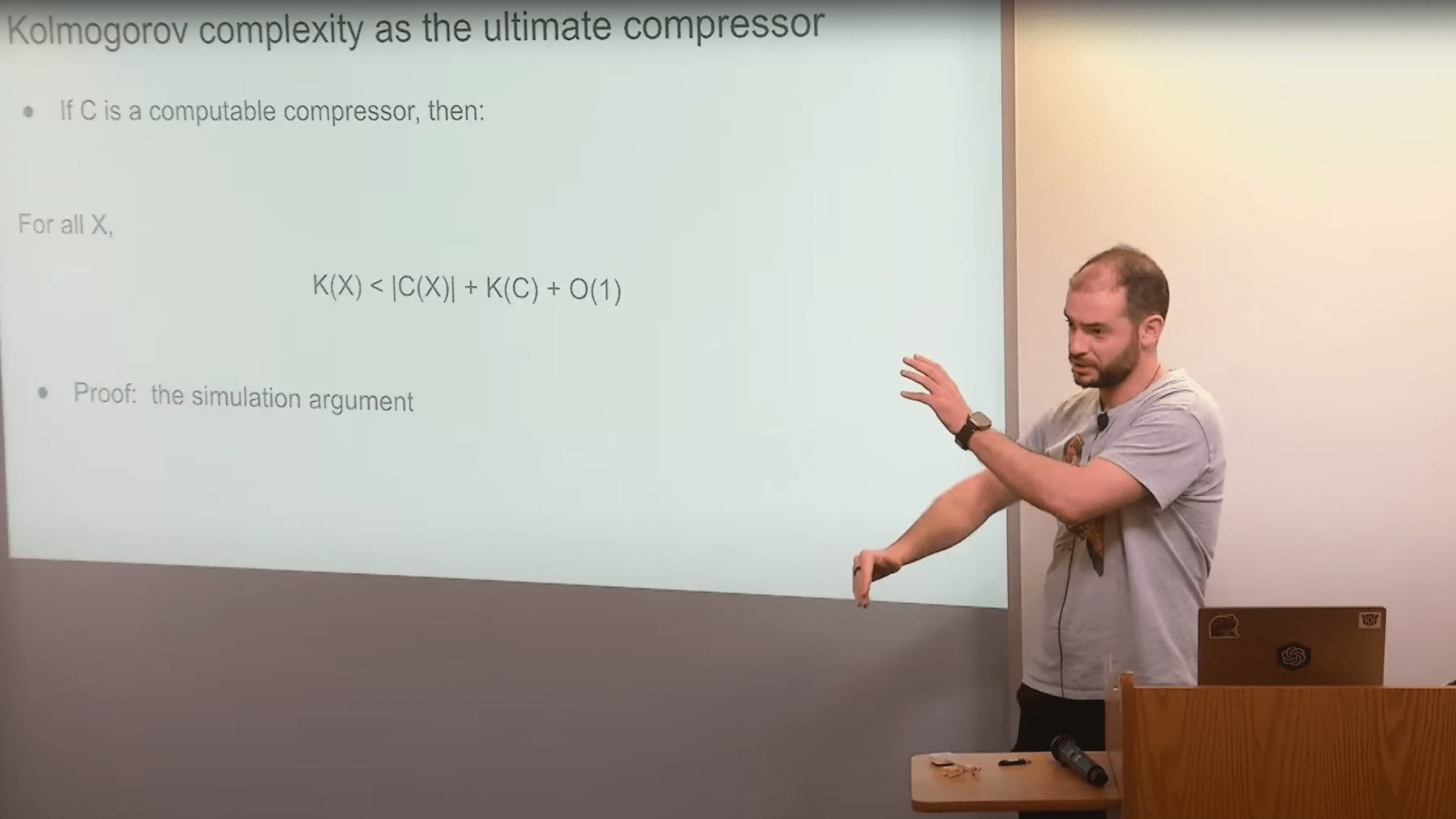

Stronger compressors find more shared structure, with Kolmogorov complexity, the length of the shortest program that outputs some data, providing a theoretical optimal compressor, Sutskever told the audience. He argued that large neural networks trained with gradient descent approximate this optimal compressor, so we can think of unsupervised learning as approximating optimal data compression.

iGPT's pixel prediction task as a compressor

As an example, he discussed iGPT, an OpenAI experiment from 2020, in which the team trained a transformer model to predict the next pixel in images.

This pixel prediction task, when performed on large image datasets, resulted in good representations that transferred well to supervised image classification tasks and generative capabilities.

According to Sutskever, this supports the view that compression goals like next-pixel prediction, which find regularities in the data, produce useful representations for downstream tasks and go beyond just modeling the data distribution because the pixel prediction task specifically encourages finding long-range dependencies in images to make accurate predictions.

Aside from providing a better framework for understanding unsupervised learning, these ideas can be seen as fundamental to OpenAI's way of building AI models, as they seem to believe that such techniques can push the limits of AI's capabilities, contrasting e.g. the stochastic parrot arguments.

Check out the full talk on YouTube.