OpenAI has a "highly accurate" ChatGPT text detector, but won't release it for now

Update –

- Added OpenAI's statement.

Update from August 5, 2024:

Following the Wall Street Journal's coverage, OpenAI revised an earlier blog post on AI content detection, confirming the existence of their watermarking detector.

The detector excels at detecting minor text changes such as paraphrasing, but struggles with major changes such as translations, rewrites using different AI models, or the insertion and removal of special characters between words.

Ultimately, this makes bypassing the detector "trivial," according to OpenAI. The company also mentions concerns that it could unfairly target certain groups, such as non-native English speakers who use ChatGPT to improve their writing.

While the watermarking method has a low false positive rate for individual texts, applying it to large volumes of content would still lead to a significant number of misidentifications overall.

OpenAI is researching metadata as an alternative method of verifying the provenance of text. This research is in the "early stages of exploration," and its effectiveness remains to be seen. Metadata is promising because, unlike watermarks, it can be cryptographically signed, eliminating false positives, according to OpenAI.

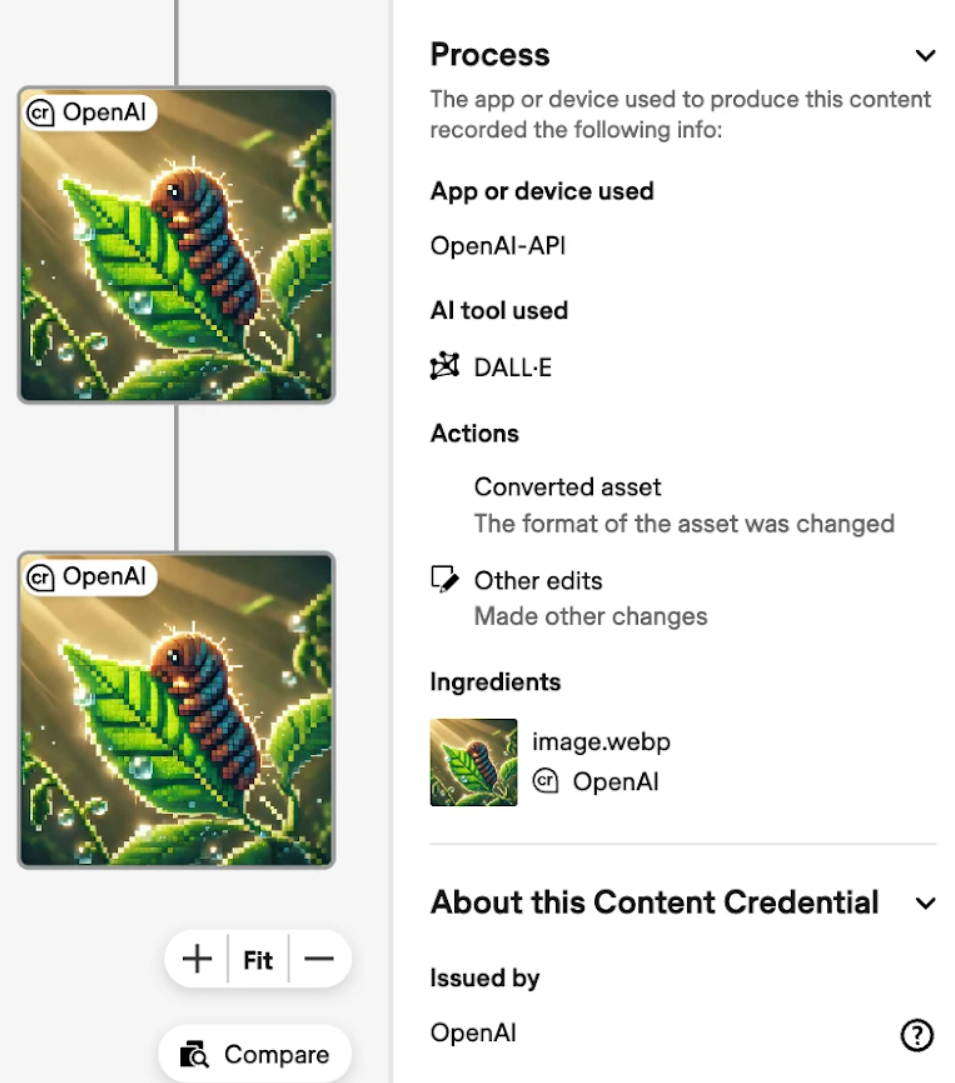

OpenAI says it is focusing on audiovisual content, which it considers higher risk. It's updated DALL-E 3 image provenance C2PA-based system now tracks if and how AI-generated images are edited after generation.

Original article from August 4, 2024:

OpenAI has developed technology to reliably detect AI-generated text, according to inside sources and documents reported by the Wall Street Journal. However, the company is reluctant to release it, likely due to concerns about its own business model.

The AI text detector project has been discussed internally for about two years and has been ready for use for around a year, the WSJ reports. Releasing it is reportedly "just a matter of pressing a button," but OpenAI is torn between transparency and user demands.

An internal survey found nearly one-third of ChatGPT users would be put off by a text detector. However, a global survey commissioned by OpenAI showed 4-to-1 support for AI detection and transparency.

The detector could particularly affect non-native English speakers, an OpenAI spokesperson said, and has "likely impact on the broader ecosystem beyond OpenAI." While "technically promising," it also has "important risks we’re weighing while we research alternatives," she said.

The detector provides an assessment of how likely it is that all or part of the document was written by ChatGPT. Given a sufficient amount of text, the method is said to be 99.9 percent effective.

It relies on watermarks that are invisible to humans but can be detected by OpenAI technology without affecting the quality of the text. However, it is unclear how reliable it is after extensive editing, back-and-forth translation, or the addition/removal of emoji.

In addition, OpenAI can only implement the method for ChatGPT and its own models, potentially driving users to other language models in the absence of an industry standard for AI transparency. So it could hurt OpenAI's business without solving the overall transparency problem.

OpenAI staff has also discussed offering the detector directly to educators, or to outside companies that help schools or businesses identify AI-authored work and plagiarism to mitigate the damage done by text generation.

OpenAI had already released an AI text detector, but it was unreliable. The company took it offline in the summer of 2023.

AI text recognition could threaten OpenAI's business model

The tool stems from work done by computer science professor Scott Aaronson during a two-year safety project at OpenAI. At the end of 2022, Aaronson said that OpenAI planned to present the work in more detail in the coming months, but nothing has happened yet.

OpenAI is said to have last discussed the watermarking method in early June, with the decision to evaluate less controversial alternatives. By fall, the company wants to have an internal plan in place to influence public opinion and legislation on the issue. Without this plan, "credibility as responsible actors" would be at risk.

A working text detector could threaten OpenAI's business model if people devalue AI-generated text. One study suggests that this happens in private messages, where the human touch is valued. Another study showed that even when AI text is of higher quality, people still prefer human advice when given the choice.

However, AI-generated text is becoming more common in search results, and some are betting that this kind of AI text could have a lot of commercial value as an omniscient answering machine - including OpenAI with SearchGPT.

So it may just be a matter of getting used to it. Over time, as AI writing becomes routine, the focus may shift from how the text was created to its content and the author's intent. If "AI-generated" becomes a neutral descriptor instead of a swear word, a reliable detector could prevent mainly harmful uses.

A detector could also help schools prove the use of ChatGPT in essays and slow the disruption of education by AI, which could be a good or bad thing, depending on your perspective, and it could help detect automated disinformation campaigns, which is certainly a good thing.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.