OpenAI has removed a section from its terms of service that explicitly prohibited the use of its technology for military purposes.

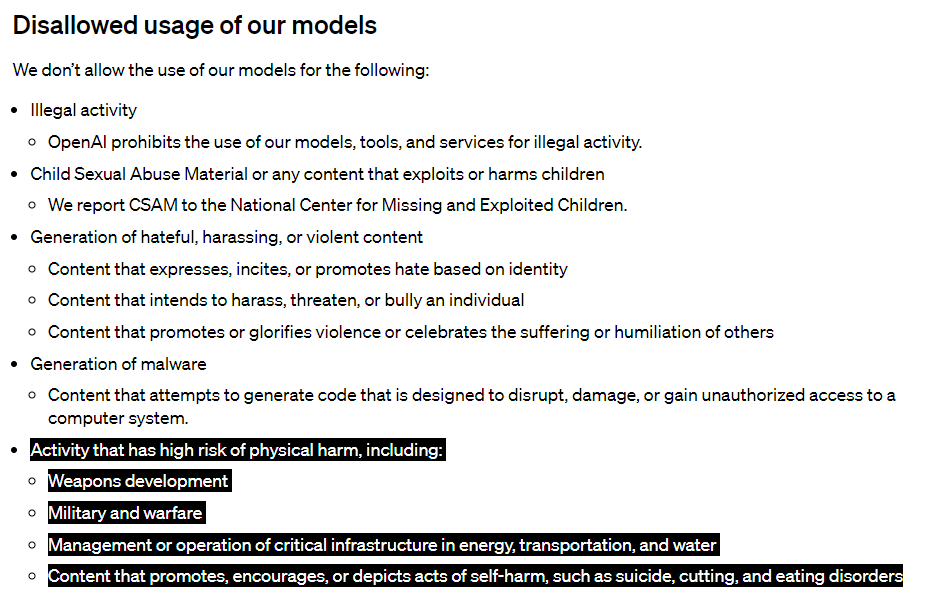

The previous policy explicitly prohibited the use of OpenAI technologies and ChatGPT for activities that pose a high risk to physical safety, including weapons development and military warfare.

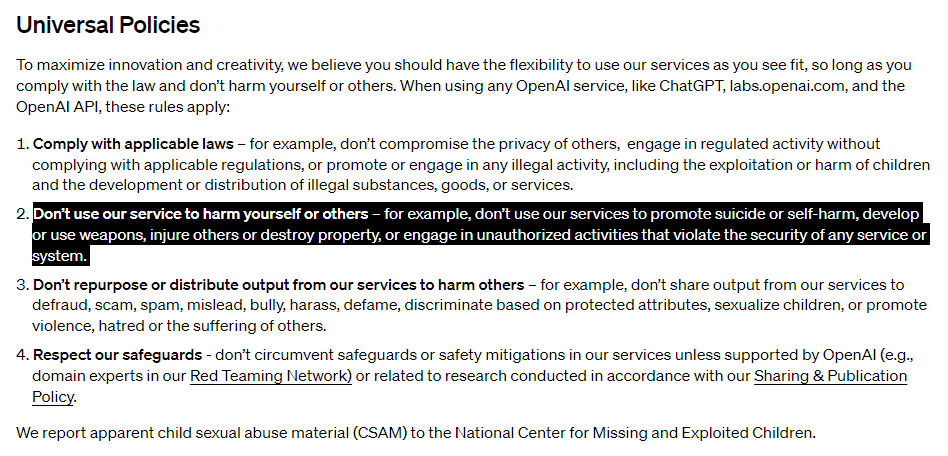

The revised policy includes a clause prohibiting the use of the service to harm oneself or others, citing weapons development as an example. However, military and warfare are no longer explicitly mentioned.

In the previous version, "military and warfare" was explicitly excluded.

The change is part of a larger revision to make the document clearer and easier to read, says OpenAI spokesperson Niko Felix in an email to The Intercept. The goal is to "create a set of universal principles that are both easy to remember and apply."

Felix would not comment on whether the ban on harming others generally excludes military use.

However, he emphasized that the rules include the military if it wants to use OpenAI technologies to develop or use weapons, harm others or destroy property, or engage in unauthorized activities that compromise the security of a service or system.

AI enters the battlefield

The implications of this policy change are unclear, but it comes at a time when the Pentagon and US intelligence agencies are showing a growing interest in AI technologies.

With this change, OpenAI could at least signal a willingness to talk and soften its previously strict stance, for example by opening the door to supporting military operational infrastructures. The military could potentially use a system like ChatGPT to generate text to speed up or automate the flow of information.

The use of AI in the military sector has been underway for years. In 2019, the US Pentagon announced in a strategy paper that AI would permeate all military domains in the future.

China's activities and the war in Ukraine are believed to have accelerated this development. Possible applications include autonomous drones, which are already being used as weapons in the Ukraine war, or propaganda using deepfakes.