OpenAI partners with US military on cybersecurity projects

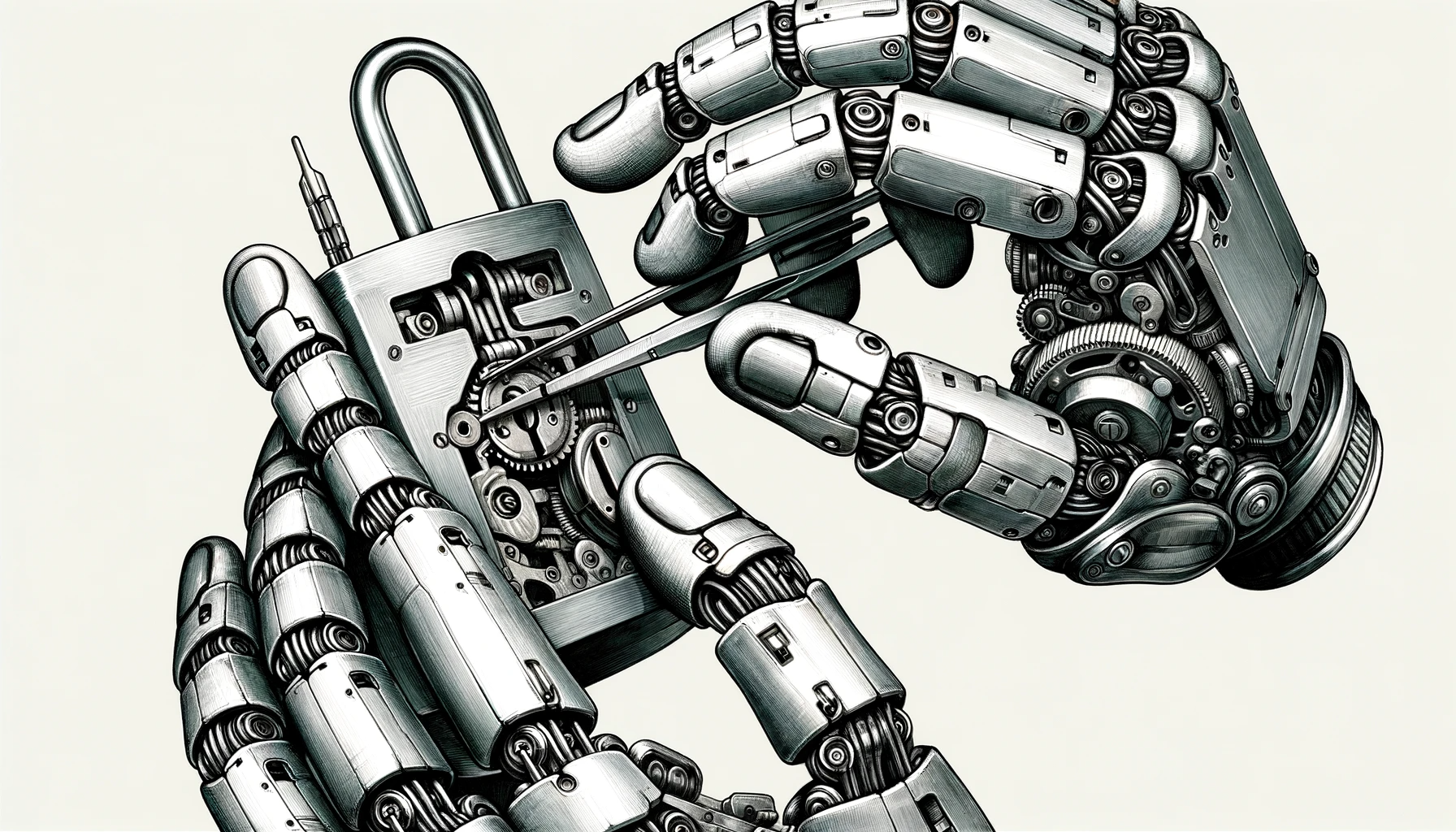

OpenAI is working with the Pentagon on several projects, including the development of open-source cybersecurity software.

A few days ago, OpenAI removed the language from its terms of service that prohibited the use of its AI for "military and warfare" applications.

Anna Makanju, vice president of global affairs at OpenAI, now tells Bloomberg that this decision is part of a broader policy update to account for new applications of ChatGPT and other tools.

According to Makanju, initial discussions are underway with the U.S. government about suicide prevention methods for combat veterans, meaning the use of ChatGPT in a therapeutic context, and OpenAI is working with the U.S. Department of Defense on open-source cybersecurity software.

Cybersecurity, medicine, or therapy, including in a military context, are in line with OpenAI's new guidelines, which no longer prohibit explicit military use, but do prohibit using AI to harm other people or develop weapons.

"Because we previously had what was essentially a blanket prohibition on military, many people thought that would prohibit many of these use cases, which people think are very much aligned with what we want to see in the world," Makanju says.

OpenAI addresses societal issues

OpenAI has also recently unveiled new initiatives to combat the malicious use of AI in elections, including an image identifier for the DALL-E 3 AI tool.

Another is to democratize AI models to reflect a broader political spectrum. OpenAI has also created a new team to develop systems to capture public opinion and feed it into OpenAI's AI models.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.