OpenAI pilots Aardvark for automated security reviews in code

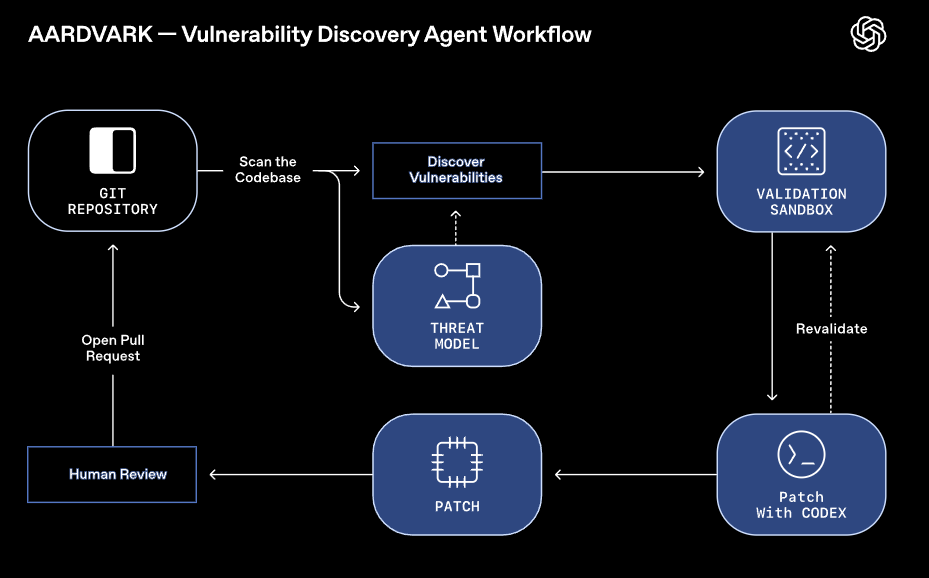

OpenAI is piloting Aardvark, a security tool built on GPT-5 that scans software code for vulnerabilities. The system is designed to work like a security analyst: it reviews code repositories, flags potential risks, tests whether vulnerabilities can be exploited in a sandbox, and suggests fixes.

In internal tests, OpenAI says Aardvark found 92 percent of known and intentionally added vulnerabilities. The tool has also been used on open source projects, where it identified several issues that later received CVE (Common Vulnerabilities and Exposures) numbers.

Aardvark is already in use on some internal systems and with selected partners. For now, it's available only in a closed beta, and developers can apply here. Anthropic offers a similar open source tool for its Claude model.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.