The OpenAI drama continues: Greg Brockman describes the process of his and Sam Altman's dismissal, which he says he is still trying to reconstruct. The driving force behind it was apparently OpenAI co-founder Ilya Sutskever, who is responsible for AI safety and alignment.

The current situation was triggered by an internal dispute over AI safety policy, The Information reports. OpenAI co-founder Ilya Sutskever, who was involved in the firing, is also the head of the team responsible for aligning potential artificial superintelligence.

Speculation is that Altman was pushing the commercialization of AI too hard at the expense of AI safety. The core goal of OpenAI as a research organization, to develop safe AGI for humanity, would conflict with commercialization.

OpenAI has had some recent security issues

Recent events support this theory. There have been cybersecurity incidents in which OpenAI has been attacked, causing outages, and OpenAI partner Microsoft even briefly blocked access to ChatGPT for its employees due to cybersecurity concerns.

One user showed how to bypass the rules of OpenAI's DALL-E 3 image generator with a simple text file, simulating a message from OpenAI CEO Sam Altman. OpenAI's GPT-4 vision system has security issues and was hacked by users shortly after its release.

OpenAI's new GPTs have also been criticized from a security perspective. Once uploaded, files can be downloaded by other users without the GPT creator's knowledge. A notice informing the creator of this was added only after the launch.

Safety advocates like Sutskever may see such incidents as confirmation that a rush to market is detrimental to safety.

Ilya Sutskever comments internally on Altman's firing

At an internal staff meeting about Altman's firing, Sutskever implicitly confirmed those rumors, reports The Information.

The firing was not a "coup" or a "hostile takeover," Sutskever said internally. The board had done its duty to ensure that OpenAI was developing a safe AGI for the benefit of all.

"This was the board doing its duty to the mission of the nonprofit, which is to make sure that OpenAI builds AGI that benefits all of humanity," Sutskever said.

He also acknowledged that there was "a not ideal element" to the way Altman was removed.

Sutskever believes that the goal of artificial general intelligence (AGI) is achievable and will change the course of humanity. In early October, he wrote on Twitter.com: "If you value intelligence above all other human qualities, you're gonna have a bad time."

Interim CEO Mira Murati, who has led many of the company's teams in various roles and as CTO, emphasized in a memo to employees "our mission and ability to develop safe and beneficial AGI together."

The strategic goal of this ouster could be to slow the pace of OpenAI in the commercial space. Altman has also recently expressed skepticism that the development of large language models is sufficient to achieve AGI. Sutskever may have a different perspective here, fearing that Altman is underestimating the potential of scaled LLMs.

Brockman and Altman "shocked and saddened"

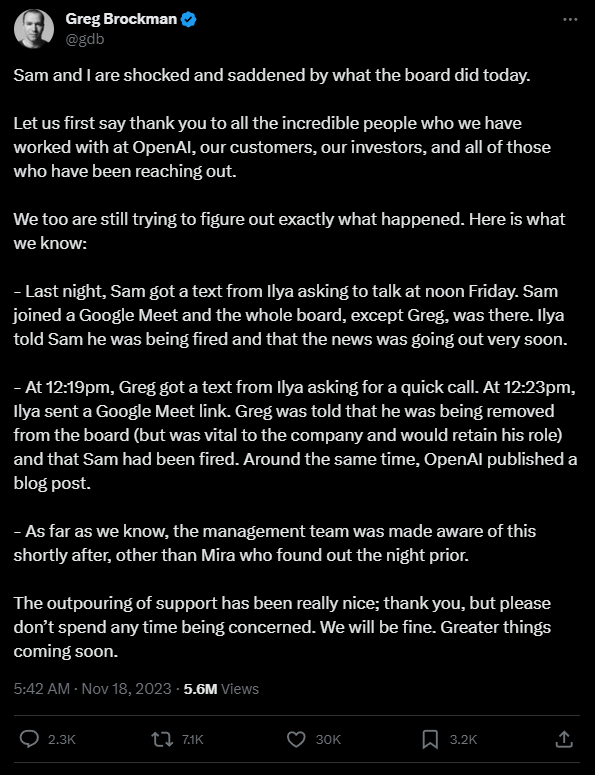

"Sam and I are shocked and saddened by what the board did today," Brockman writes. Altman calls it a "weird experience on many levels."

Brockman's side of the story goes like this: Sam Altman was invited by OpenAI chief scientist and board member Ilya Sutskever on Thursday evening to a meeting on Friday noon. At that Google meeting, the board was without Greg Brockman and fired Sam Altman, saying that the news would be made public soon. Altman did not participate in the vote.

Shortly thereafter, again at a Google meeting, Brockman was told that Sam Altman had been fired and that he had been removed from the board, but that he could stay with OpenAI because he was important to the company. The management team was then informed. The new interim CEO, Mira Murati, was informed the night before, Brockman writes.

Brockman left OpenAI a few hours later at his request. He already has his eye on the next step: "Greater things coming soon."

OpenAI loses more researchers

Following Altman's dismissal, Jakub Pachocki, the company's director of research, Aleksander Madry, head of a team that assesses the potential risks of AI, and long-time researcher Szymon Sidor are also said to have left OpenAI, The Information reports, citing well-informed sources.

Pachocki and Sidor were involved in the development of GPT-4, while Madry led a team working on AI risk assessment.

Microsoft was informed just before Altman was fired, but CEO Nadella remains "committed to our partnership"

Prominent investors, including Vinod Khosla and Reid Hoffman, were also surprised by Altman's ouster. Four sources with direct knowledge of the situation told Forbes that major venture capitalists were not given a heads-up by OpenAI.

Microsoft, which has invested more than $10 billion in OpenAI, learned of Altman's firing and departure from the board just a minute before the news was posted on the OpenAI blog, according to an Axios report.

Microsoft CEO Satya Nadella commented on Altman's firing on LinkedIn, but did not mention Altman in his post. Instead, he emphasized Microsoft's commitment to AI development, as demonstrated at Ignite, and highlighted the company's planned long-term collaboration with OpenAI.

"We have a long-term agreement with OpenAI with full access to everything we need to deliver on our innovation agenda and an exciting product roadmap; and remain committed to our partnership, and to Mira and the team. Together, we will continue to deliver the meaningful benefits of this technology to the world."

Satya Nadella, CEO Microsoft

Microsoft has repeatedly set a bad example for the safe use of AI in the past few months, such as the news portal MSN.com, which replaced human editors with AI that then spread fake news.

A recent study by AlgorithmWatch and AI Forensics, in collaboration with Swiss broadcasters SRF and RTS, found that Microsoft's Bing Chat gave incorrect answers to questions about the upcoming elections in Germany and Switzerland.

The numerous failures of the Bing chatbot, aka "Sydney," in its rushed rollout earlier this year to steal search market share from Google must also have been a thorn in the side of security advocates at OpenAI. OpenAI reportedly warned Microsoft against prematurely deploying the Bing chatbot with a then-unreleased GPT-4 without further training.