Read full article about: Nvidia teams up with venture capital firms to find and fund India's next wave of AI startups

Nvidia is rapidly expanding its partnerships in India. According to CNBC, the chipmaker is working with major venture capital firms to find and fund Indian AI startups. More than 4,000 AI startups in India are already part of Nvidia's global startup program.

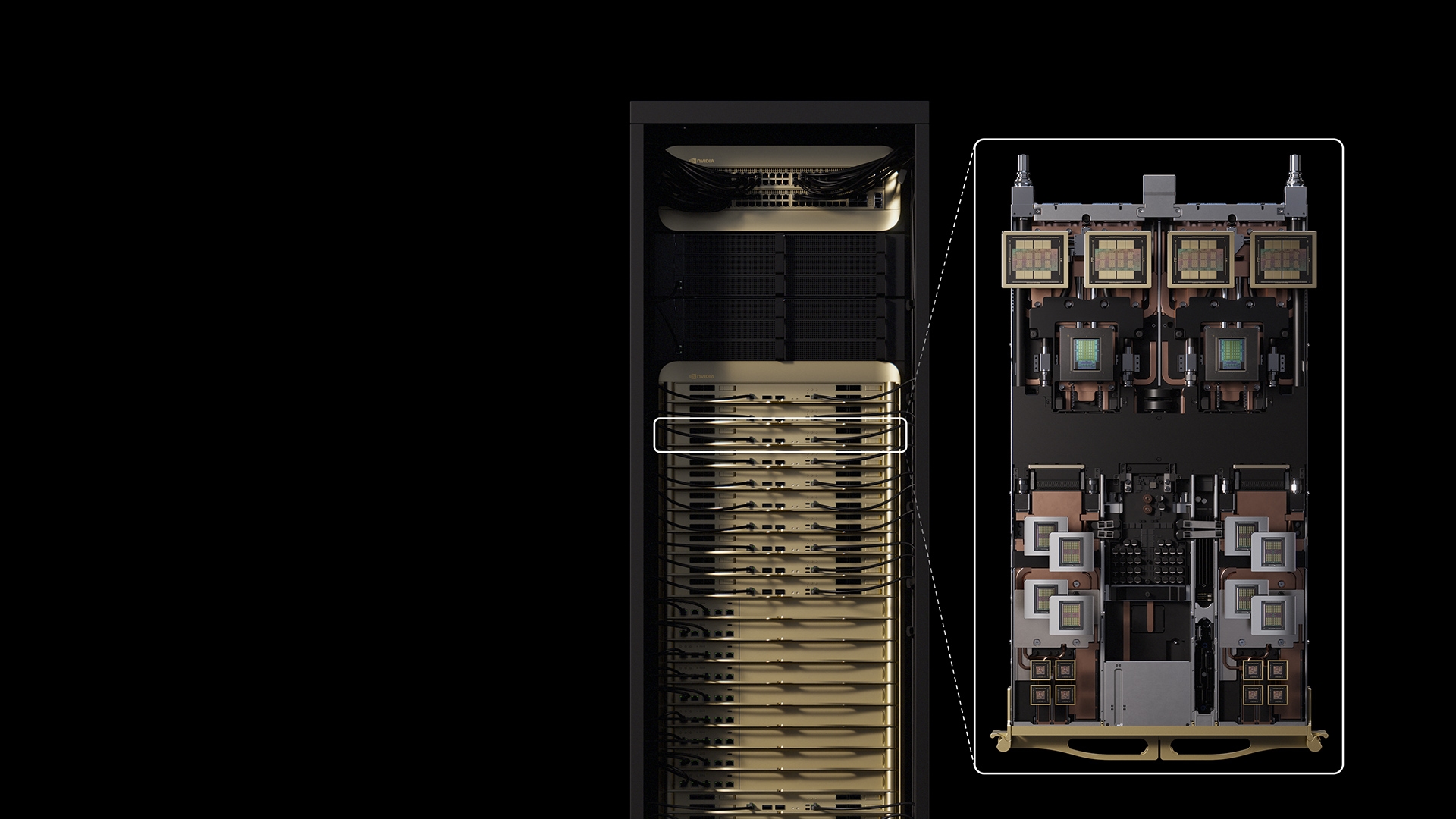

At the same time, Indian cloud provider Yotta has invested roughly two billion dollars in Nvidia chips, the Economic Times reports. Nvidia is also partnering with Indian cloud providers to build out data center infrastructure.

The Indian government expects up to 200 billion dollars in data center investments over the coming years. Adani alone is planning to spend 100 billion dollars on AI-capable data centers. These efforts are all part of India's "IndiaAI Mission," a government initiative aimed at turning the country into a global technology powerhouse.

Comment

Source: CNBC | Economic Times

Read full article about: Warner Bros. says Bytedance deliberately trained Seedance on its characters, adding to growing Hollywood backlash

Warner Bros. accuses ByteDance of copyright infringement with its new AI video service Seedance 2.0. The studio sent a letter on Tuesday to ByteDance's chief legal officer John Rogovin, who previously worked at Warner Bros. himself.

Users had been using Seedance to create AI videos featuring Superman, Batman, "Game of Thrones," "Harry Potter," "Lord of the Rings," and other Warner characters. Warner Bros. stresses that the users aren't the problem - Seedance came preloaded with the studio's copyrighted characters, which the company says was a deliberate choice by ByteDance.

Disney and Paramount had already sent cease-and-desist letters before Warner's move. ByteDance responded by announcing additional safeguards. Warner Bros. argues, however, that these easily implementable protections should have been in place from the start. This has become a familiar pattern - OpenAI keeps discovering copyright issues only after shipping its models, too.

Read full article about: Anthropic and Infosys team up to build AI agents for regulated industries

Anthropic and Indian IT giant Infosys are jointly developing AI agents for regulated industries. The partnership focuses on telecommunications, finance, manufacturing, and software development. The agents are designed to handle complex tasks autonomously - like processing insurance claims, running compliance checks, or automating network operations for telecom providers.

The project combines Anthropic's Claude models with Infosys Topaz, an enterprise AI platform. Anthropic CEO Dario Amodei said there's a big gap between an AI demo and real deployment in regulated industries, and that Infosys brings the domain expertise needed to close it.

India is Anthropic's second-largest market for Claude, according to the company. Infosys is one of the first partners for Anthropic's new office in Bengaluru.

Context files for coding agents often don't help - and may even hurt performance

Context files are supposed to make coding agents more productive. New research shows that only works under very specific conditions.

German Wikipedia bans AI-generated content while other language editions take a softer approach

The German-language Wikipedia community has passed a sweeping ban on AI-generated content. The move puts it at odds with other Wikipedia language editions and the Wikimedia Foundation, which favor a less restrictive approach.

Read full article about: Indian Adani group plans $100 billion bet on AI data centers powered by renewable energy

Indian conglomerate Adani plans to invest roughly $100 billion in AI-capable data centers powered by renewable energy by 2035. The Adani Group is a major conglomerate with business operations spanning ports to energy. According to Reuters, the company expects the investment to trigger an additional $150 billion in related industries like server manufacturing and cloud platforms - creating a $250 billion AI infrastructure ecosystem in India.

Adani aims to expand its data center capacity from 2 to 5 gigawatts. On top of that, the company is putting $55 billion into renewable energy. Adani already works with Google and is building a second AI data center with Walmart subsidiary Flipkart. Amazon, Meta, Microsoft, and Reliance are also investing in India's AI infrastructure. The announcement came during the India AI Impact Summit 2026.

Read full article about: Irish data protection authority opens investigation into AI-generated deepfakes on Musk's X

Ireland's Data Protection Commission (DPC) has launched a comprehensive investigation into Elon Musk's platform X. The probe focuses on AI-generated sexualized images of real people, including children, created using the Grok chatbot integrated into X.

The DPC is examining whether X violated core obligations under the EU's General Data Protection Regulation (GDPR) - including lawful data processing, data protection by design, and the requirement to conduct a data protection impact assessment before launching risky features. Deputy Commissioner Graham Doyle said the authority has been in contact with X since the first media reports surfaced several weeks ago.

In early January, users created thousands of sexualized deepfakes using Grok, sparking sharp criticism from users, security experts, and politicians, along with multiple regulatory investigations.

Comment

Source: Dataprotection