Dozens of protesters from the groups Pause AI and No AGI gathered at OpenAI's headquarters in San Francisco to rally against the creation of more advanced AI.

The demonstration was in part a response to OpenAI's recent removal of the clause prohibiting the use of AI for military purposes from its terms of service. The company is reportedly working with the Pentagon on cybersecurity projects.

Both groups are calling for an end to OpenAI's relationship with the military and for external regulation of AI development.

The protesters' main concern, however, is the development of Artificial General Intelligence (AGI), which could lead to a future in which machines surpass human intelligence. Both groups are calling on OpenAI to stop working on advanced AI systems and to slow or even stop the development of AGI.

They argue that AI could fundamentally change power dynamics and decision-making in society, leading to the loss of jobs and the meaning of human life, VentureBeat reports.

Reporting from the protest at OpenAI pic.twitter.com/SYbXKYq43b

— Garrison Lovely is in SF til Feb 12 (@GarrisonLovely) February 13, 2024

According to VentureBeat, the groups are planning further action, although they are not entirely united on the issue of AGI: Pause AI is open to the idea of AGI if it can be developed safely, while No AGI is strongly opposed to its development.

If you want to dive deeper into their arguments, you can read PauseAI's "Protester Guide" and their messaging guide.

The first time a pause in AI development was raised publicly was when a group of researchers and industry leaders published the AI Pause Letter. Currently, however, the pro-AI camp seems to be gaining the upper hand.

Governments are attempting to regulate the potential negative impacts of widespread AI deployment. The US is leading the way with its AI executive order and the EU with its recently passed EU AI Act.

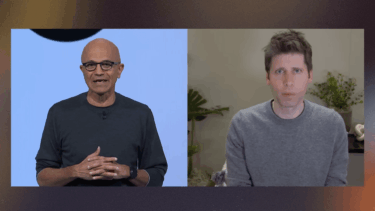

OpenAI CEO Sam Altman has pointed out in the past that the boundaries of defining AI as AGI are fluid: Systems like GPT-4 would have been considered AGI by many a decade ago. Today, they are just "nice little chatbots." Humanity needs to agree on a definition of AGI within the next decade, Altman said.

Altman also believes that scaling large language models is unlikely to lead to AGI, and that another breakthrough is needed. A true superintelligence must be able to discover new phenomena in physics, he said recently. According to Altman, this cannot be achieved simply by training on known data and imitating human behavior and text.

Among other things, OpenAI has established a "Preparedness Team" to improve the safety of AI and prevent potentially catastrophic risks from basic AI models. The team is addressing critical issues such as the risk of misuse of leading AI systems, the development of a robust framework for monitoring and evaluating these systems, and the potential impact of model weights falling into the hands of malicious actors.