People distrust headlines labeled as "AI-generated"

According to a recent study, people view headlines labeled as "AI-generated" as less trustworthy and are less likely to share them - even if the content is accurate or was created by humans. They assume the articles were written entirely by AI.

Researchers at the University of Zurich conducted two experiments with nearly 2,000 participants to examine how labeling headlines as "AI-generated" affects perceived accuracy and willingness to share.

The results show: Labeling reduced credibility and sharing likelihood, regardless of content accuracy or whether it was actually created by AI or humans. Even human-written headlines falsely labeled as AI-generated were viewed just as skeptically as genuine AI headlines.

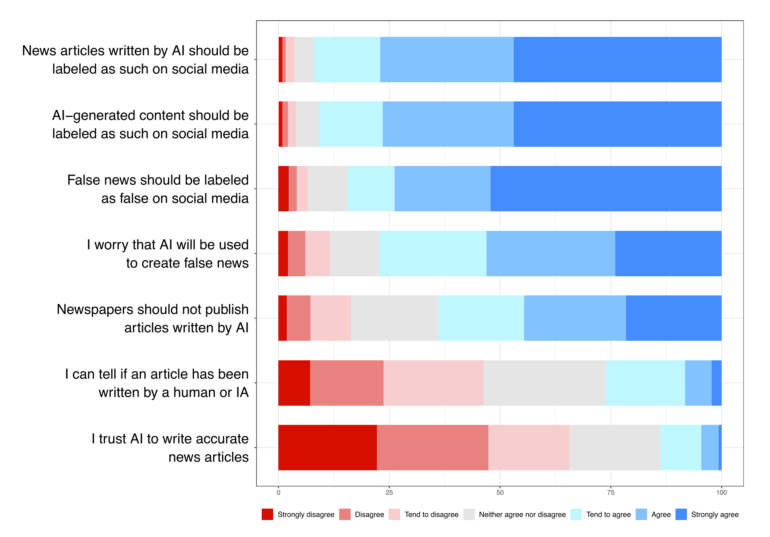

In a survey, very few participants said they could tell by eye whether an article was written by a human or machine, or that they trust AI to write accurate news articles.

The second study explored reasons for this mistrust. Researchers explained to participants what "AI-generated" means, from a weak definition where AI only improved clarity and style, to a strong definition where AI chose the topic and wrote the entire article.

Skepticism only occurred when participants weren't given a definition or were presented with the "strong" definition. The researchers conclude people assume headlines labeled as AI-generated were created entirely by AI.

Recommendations for news organizations

The study authors caution against simplistic use of AI labels, as these could deter useful AI content. They suggest harmful AI content should be explicitly marked instead of just labeled as AI-generated. Transparency about label meanings is also important to prevent false assumptions.

These findings align with other recent studies showing general skepticism toward AI-generated news. One study found similar sharing willingness for AI-generated and human-generated fake news, though both were seen as less credible than real news.

A Reuters Institute survey also revealed most people consider AI-generated news less valuable than human-written content.

Experts recommend news organizations use AI thoughtfully, especially for support tasks and new presentation formats. Caution is needed when creating content. Transparent communication about AI use is crucial - and all content, whether created with or without AI, should be human-verified. Otherwise, organizations risk losing audience trust.

AI content labeling is important not just in news, but also on social media. Meta now allows users to add an "AI info" tag to posts. However, it's unclear how much AI contributed to images - whether fully generated, sky enhanced, or minor elements removed.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.