Perplexity uses Deepseek-R1 to offer Deep Research 10 times cheaper than OpenAI

Perplexity has launched its version of Deep Research, joining Google and OpenAI in offering advanced AI-powered research capabilities.

Perplexity says the new tool automatically conducts comprehensive research, performing dozens of searches and analyzing hundreds of sources to produce detailed reports in one to two minutes - a process that typically takes humans several hours.

The system works through an iterative process: it searches for information, reads documents, and plans its next research steps based on what it finds. Users can export final reports as PDFs or share them via Perplexity Pages.

Video: Perplexity

The service launches first on web browsers, with iOS, Android, and Mac versions planned for later release. While basic access is free, daily query limits apply to non-subscribers. Perplexity says the tool works particularly well for finance, marketing, and technology research.

Deepseek enables cheaper Deep Research

Deep Research likely runs on a customized version of Deepseek's R1 reasoning model. According to CEO Aravind Srinivas, this allows Perplexity to "easily" offer the service at "10-100x lower pricing" than the competition: while OpenAI charges $200 per month for 100 queries, Perplexity offers 500 queries per day for $20 per month. OpenAI has already announced that a cheaper version of Deep Research will be released in the coming weeks.

Perplexity's Deepseek version of Deep Research scored 20.5 percent accuracy in "Humanity's Last Exam", a comprehensive AI benchmark with over 3,000 questions, placing it just behind OpenAI's Deep Research based on o3.

Unfortunately, Perplexity once again publishes misleading benchmark results for OpenAI's SimpleQA, which confronts language models with difficult questions that they are supposed to answer using the model's knowledge.

Perplexity measures its own service with Internet knowledge against other models that only answer the questions with trained knowledge, and accordingly achieves significantly better results. For a company whose product has been criticized for being less than truthful, advertising that is less than truthful is not a confidence-building measure.

Checking the AI's wall of text

Like all so-called "answer engines" - with or without Deep Research - Perplexity generates falsehoods and inaccuracies in its reports. It is up to humans to verify and validate these results.

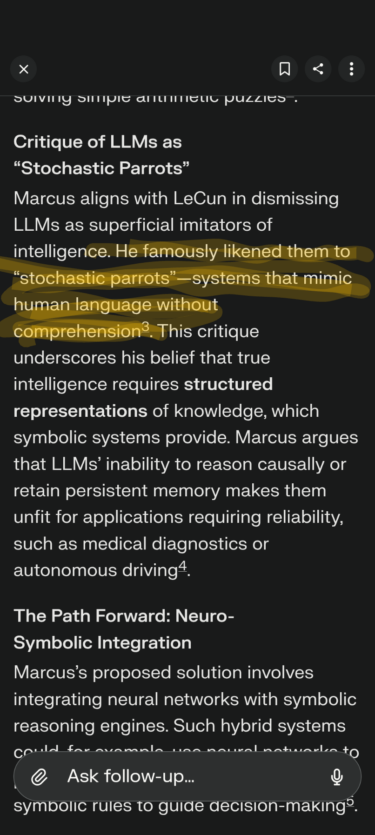

The challenge is that these errors can be very subtle and hidden in large amounts of text, as in this example: Here LLM critic Gary Marcus is credited with a paper that refers to LLMs as "stochastic parrots". This is certainly in line with Marcus' beliefs, and he may have used the term before. But he did not write the paper.

Perplexity does not respond to regular inquiries about whether error rates in AI responses are systematically studied and how high they are. Google, Microsoft, and OpenAI do not answer this question either.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.