Researchers advance generative AI for 3D modeling with 3D Gaussian Splatting

Advances in 3D reconstruction are enabling high-quality text-to-3D modeling, creating a 3D model from text in less than half an hour.

In August, a team presented 3D Gaussian Splatting, a new AI method that enables high-quality, real-time rendering of radiance fields. Using just a few dozen photos, it can render photorealistic 3D scenes in real time after a few minutes of training.

Since then, this method has attracted some attention in the field of 3D reconstruction and is accessible to everyone through applications such as Polycam.

Text-to-3D using diffusion and 3D Gaussian splatting

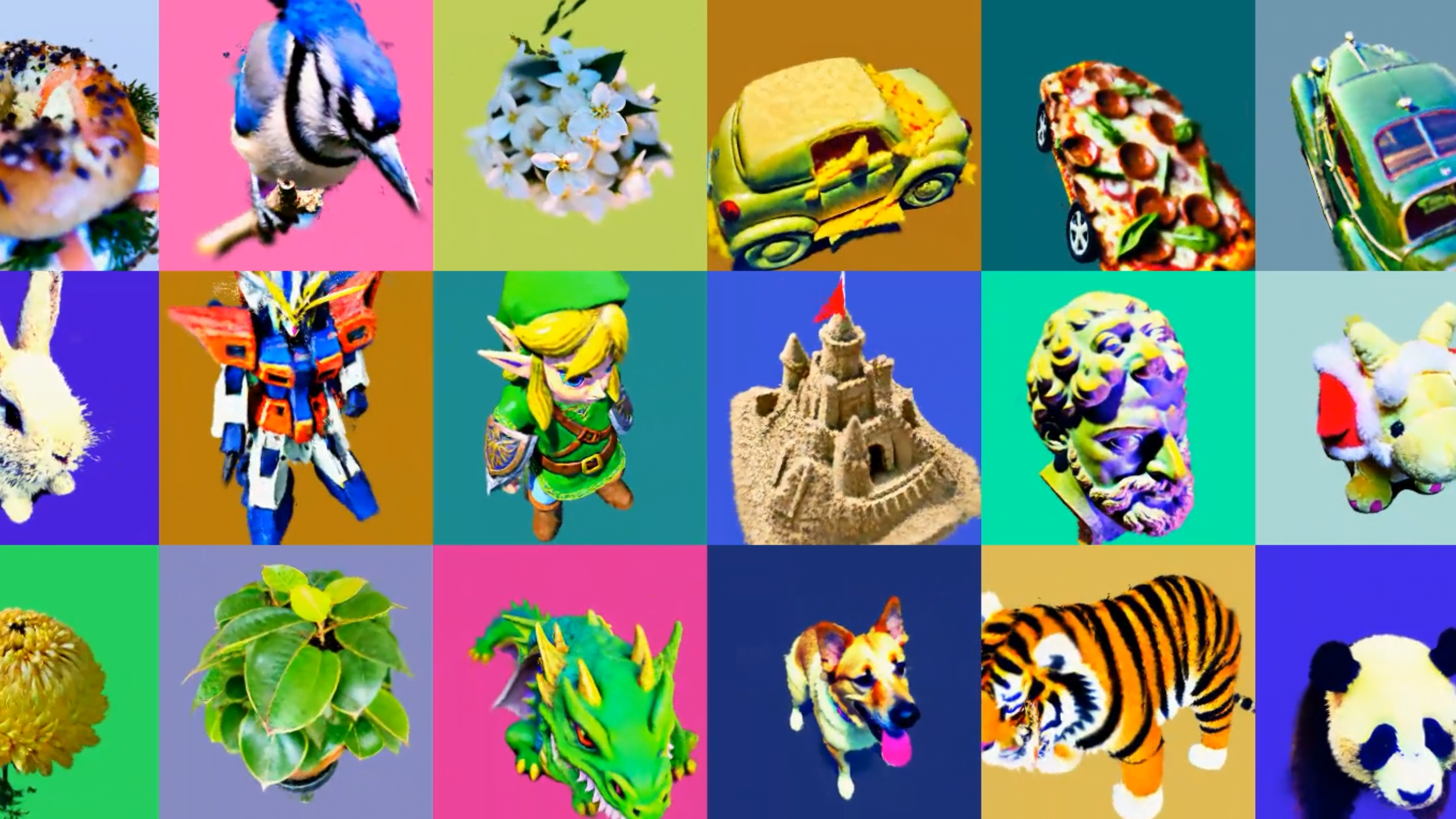

There is now some initial work using 3D Gaussian splatting to generate 3D models from text descriptions. They essentially follow known architectures, such as using a 3D diffusion model like OpenAI's Point-E to generate a point cloud from which to initialize the 3D Gaussians. Their geometry and appearance are then further refined using 2D diffusion models for image generation.

Source: Chen at al.

Similar approaches are used by DreamFusion or Magic3D. The 3D Gaussian methods such as GSGen3D or GaussianDreamer achieve similar or better results in much less time: while the old methods take several hours for an object, the new methods generate 3D objects in less than an hour.

GSGen3D and GaussianDreamer are just the beginning

Further improvements in quality and speed are expected. The ability of current models to handle complex prompts is still limited, with one of the authors citing the low text comprehension of Point-E and CLIP in Stable Diffusion as a reason. Better variants of these models could enable more complex 3D models with more precise prompting, similar to what OpenAI's DALL-E 3 demonstrates.

More examples, the code, and more information can be found on the GSGen3D and GaussianDreamer project pages.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.