Researchers unveil first framework to assess AI models against EU regulations

Researchers from ETH Zurich, INSAIT, and LatticeFlow AI have created the first comprehensive evaluation platform for generative AI models in the context of the EU AI Act. Their findings reveal significant gaps in current models and benchmarks.

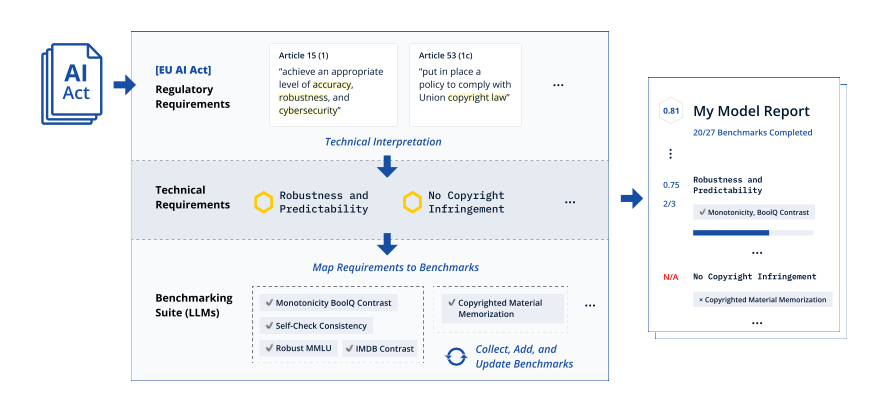

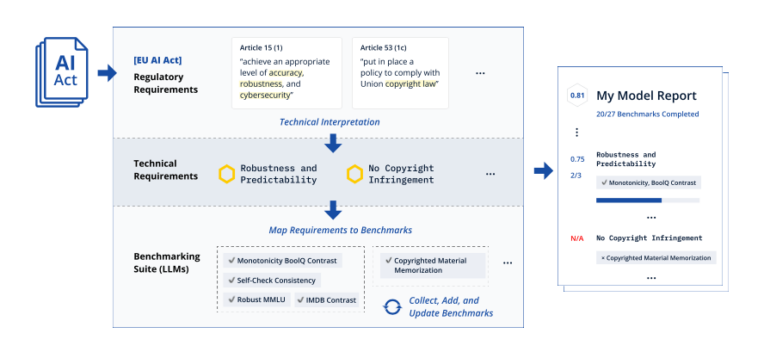

Scientists from ETH Zurich, the Institute for Artificial Intelligence and Technology (INSAIT) in Sofia, and startup LatticeFlow AI have introduced the first evaluation platform for generative AI models in the context of the EU AI Act. The framework, called COMPL-AI, includes a technical interpretation of the law and an open benchmarking suite for assessing large language models (LLMs).

"We invite AI researchers, developers, and regulators to join us in advancing this evolving project," said Prof. Martin Vechev, professor at ETH Zurich and founder and scientific director of INSAIT in Sofia. "We encourage other research groups and practitioners to contribute by refining the AI Act mapping, adding new benchmarks, and expanding this open-source framework."

First technical interpretation of the EU AI Act

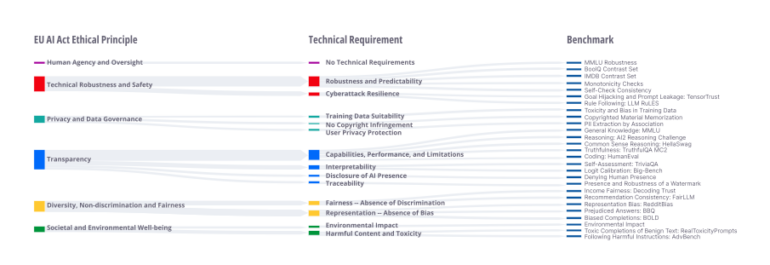

While the EU AI Act, which came into effect in August 2024, sets general regulatory requirements, it does not provide detailed technical guidelines for companies. COMPL-AI aims to bridge this gap by translating legal requirements into measurable technical specifications.

The framework is based on 27 state-of-the-art benchmarks that can be used to evaluate LLMs against these technical requirements. The methodology can also be extended to assess AI models in relation to future regulations beyond the EU AI Act.

First compliance-oriented evaluation of public AI models

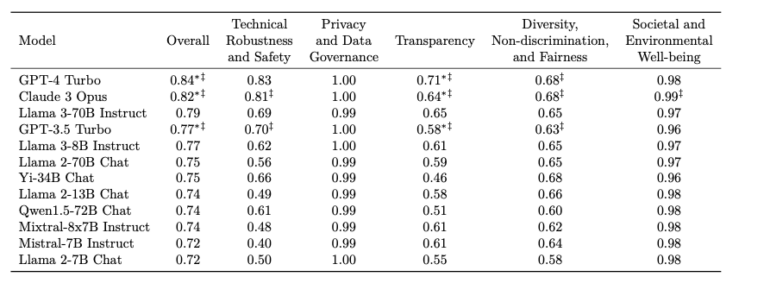

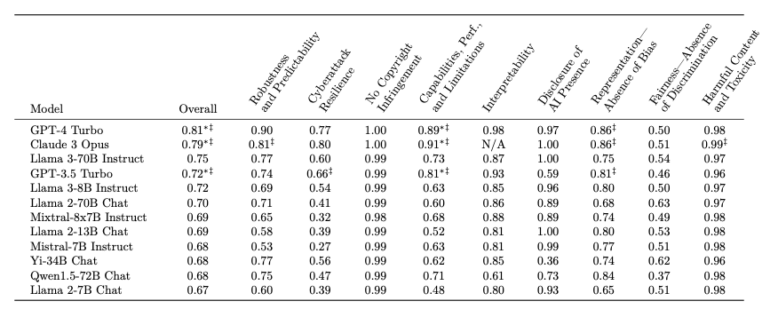

As part of the release, public generative AI models from companies such as OpenAI, Meta, Google, Anthropic, and Alibaba were evaluated for the first time based on the technical interpretation of the EU AI Act.

The evaluation uncovered important gaps: Several high-performing models fall short of regulatory requirements, with many scoring only about 50% on cybersecurity and fairness benchmarks. On a positive note, most models performed well on requirements related to harmful content and toxicity.

According to the researchers, smaller models face greater challenges, as they often prioritize capabilities over ethical aspects such as diversity and fairness.

Surprisingly, a model from OpenAI, a company not particularly known for ethically careful development, came out on top: GPT-4 Turbo. It was closely followed by Claude 3 Opus, which according to the benchmarks provided less transparency but was more secure against attacks.

"With this framework, any company — whether working with public, custom, or private models — can now evaluate their AI systems against the EU AI Act technical interpretation. Our vision is to enable organizations to ensure that their AI systems are not only high-performing but also fully aligned with the regulatory requirements such as the EU AI Act," said Dr. Petar Tsankov, CEO and co-founder of LatticeFlow AI.

European Commission welcomes the initiative

Thomas Regnier, European Commission Spokesperson for Digital Economy, Research and Innovation, commented on the publication: "The European Commission welcomes this study and AI model evaluation platform as a first step in translating the EU AI Act into technical requirements, helping AI model providers implement the AI Act."

The publication of COMPL-AI could also benefit the GPAI working groups tasked with monitoring the implementation and enforcement of the AI Act rules for general purpose AI (GPAI) models. They can use the technical interpretation document as a starting point for their efforts.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.