Runway’s Aleph model allows filmmakers to edit existing video using text prompts

Runway's new Aleph model takes a different approach to AI video editing, letting users manipulate existing footage through text prompts instead of generating everything from scratch. The goal is to streamline post-production and give filmmakers more control over their material.

One of Aleph's standout features is the ability to generate entirely new camera angles for a video. With prompts like "Generate a medium full shot of the subject," Aleph creates fresh perspectives, giving editors what Runway calls "endless coverage."

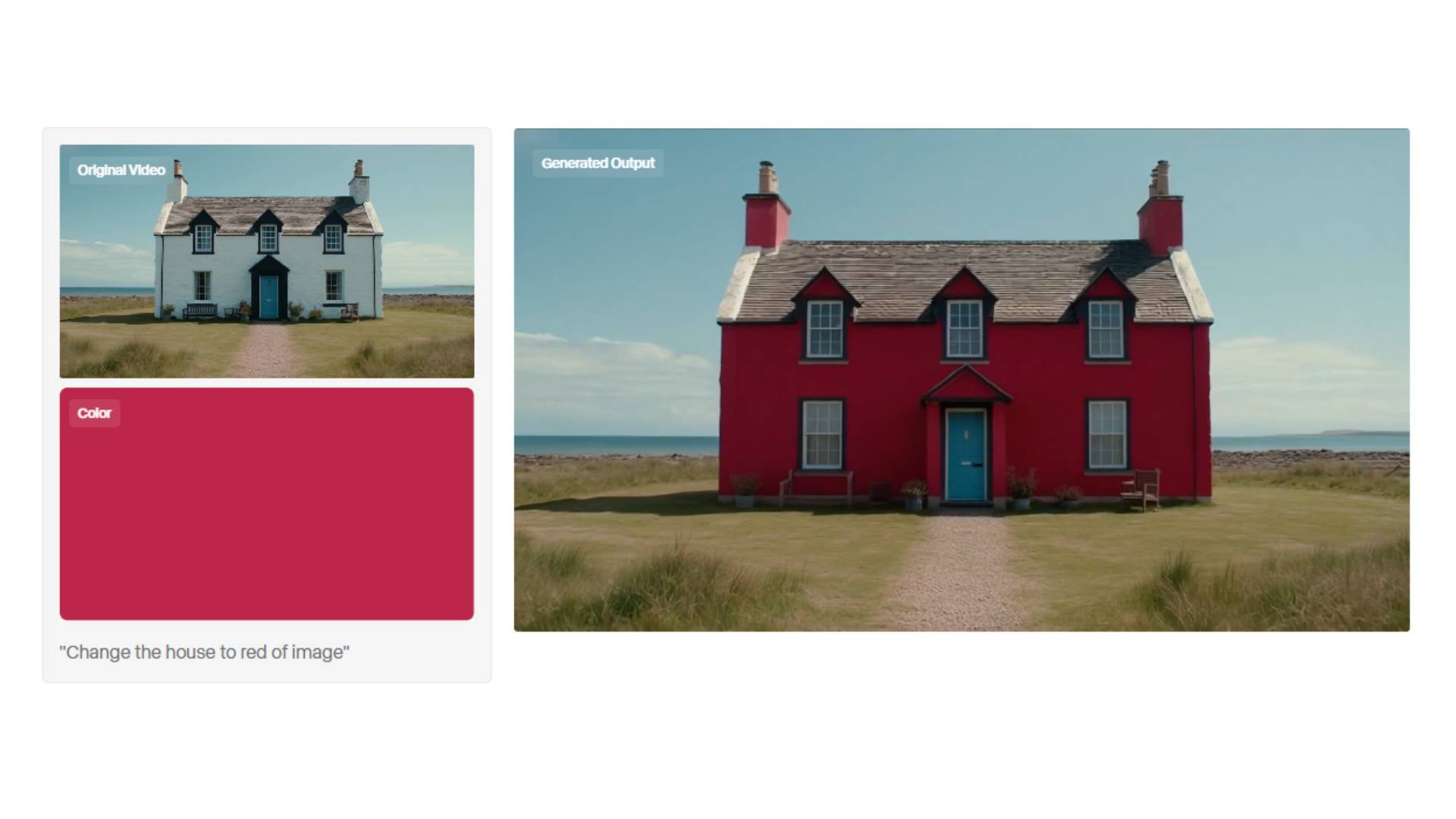

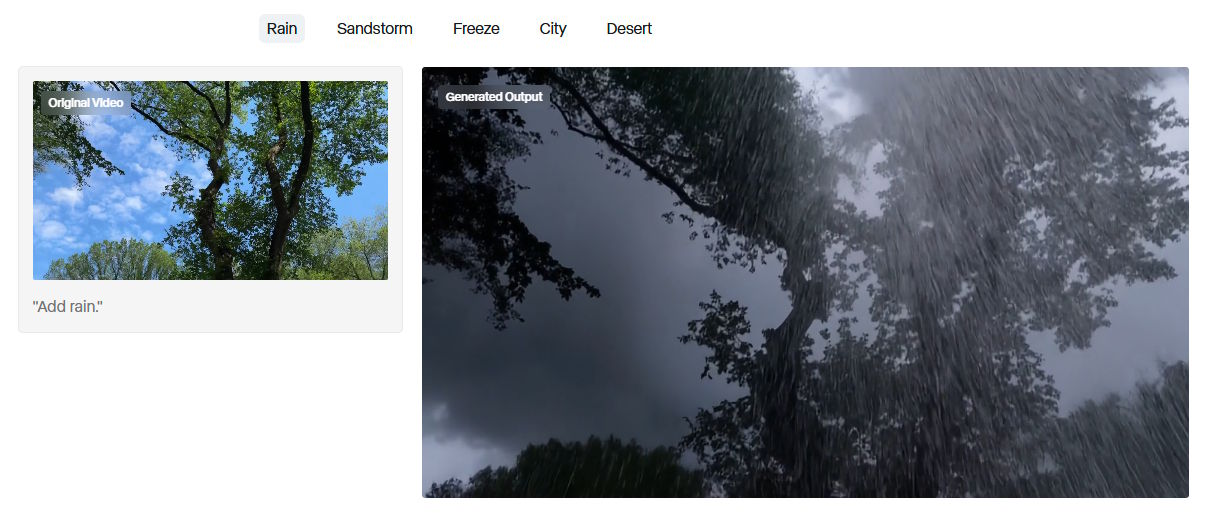

Aleph can also clean up scenes by removing smoke or reflections, add elements like fireworks or crowds, and even change the setting by adding rain or shifting the time of day. The lighting adjusts automatically to match the new look.

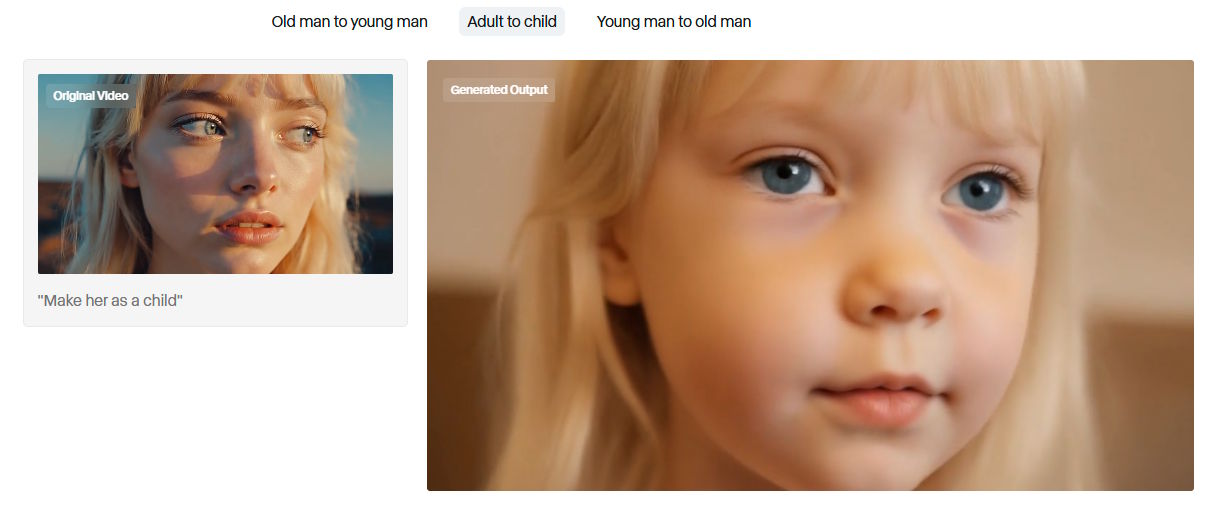

The model goes further by transforming characters, changing their age ("Make her as a child"), recoloring objects, and generating green screen effects. It can even transfer motion from live video onto static images.

Limited rollout for now

Aleph is aimed at professional filmmakers who want to use real footage as a foundation. This reflects a shift toward more comprehensive AI platforms that handle several post-production tasks in one place, instead of relying on specialized models for each job. Google's Veo 3 is heading in a similar direction, generating audio to match video clips.

While video models have lagged behind pure image models, Aleph shows they're closing the gap. In May, German startup Black Forest Labs introduced Flux.1 Kontext, a model that can partially edit images without generating them from scratch.

Right now, Aleph is only available to Enterprise and Creative Partners, with broader access planned for the future. Runway has already lined up partnerships with Hollywood studio Lionsgate.

Competitors like Midjourney, OpenAI, MiniMax, ByteDance, and Tencent are likely to introduce similar tools but Runway might have an edge with its polished interface and deep set of editing tools.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.