Stable Virtual Camera generates 3D videos from single images

Stability AI has unveiled "Stable Virtual Camera," a new AI system that transforms regular photos into 3D videos without requiring complex 3D reconstructions or scene optimizations.

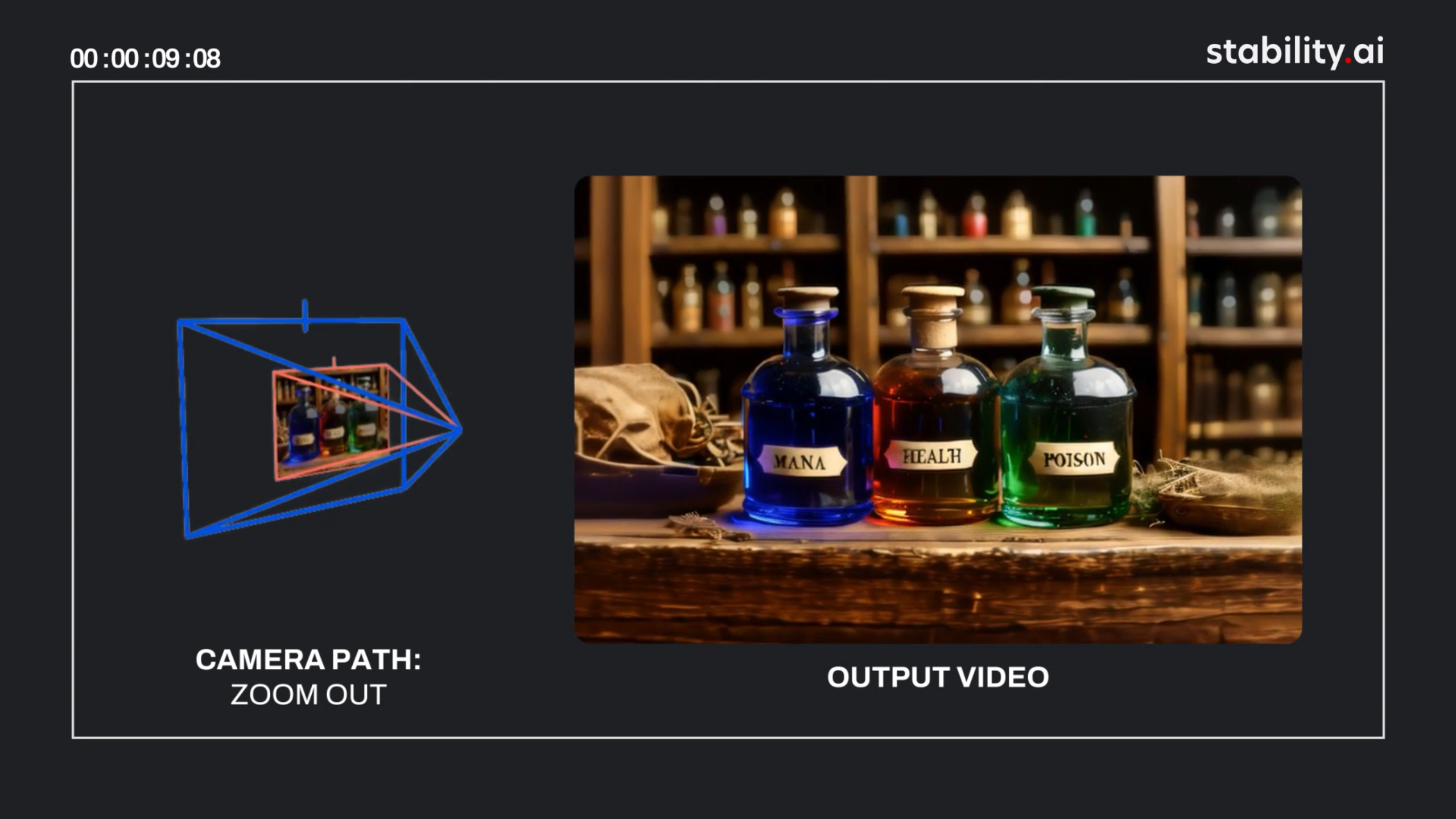

The system can create 360-degree videos lasting up to 30 seconds using just one photo or up to 32 input images. It supports 14 different camera movements, including 360-degree rotations, spirals, zoom effects, and more complex patterns like lemniscates (loop-shaped paths). When all cameras form a trajectory, Stability AI says the generated views are three-dimensional, temporally consistent, and - as the name suggests - "stable".

Handling multiple formats

The system works with various image formats including square (1:1), portrait (9:16), and landscape (16:9). This capability came as a surprise to the researchers since the model was only trained on 576x576 pixel square images. The team believes the model somehow learned to handle different image sizes on its own.

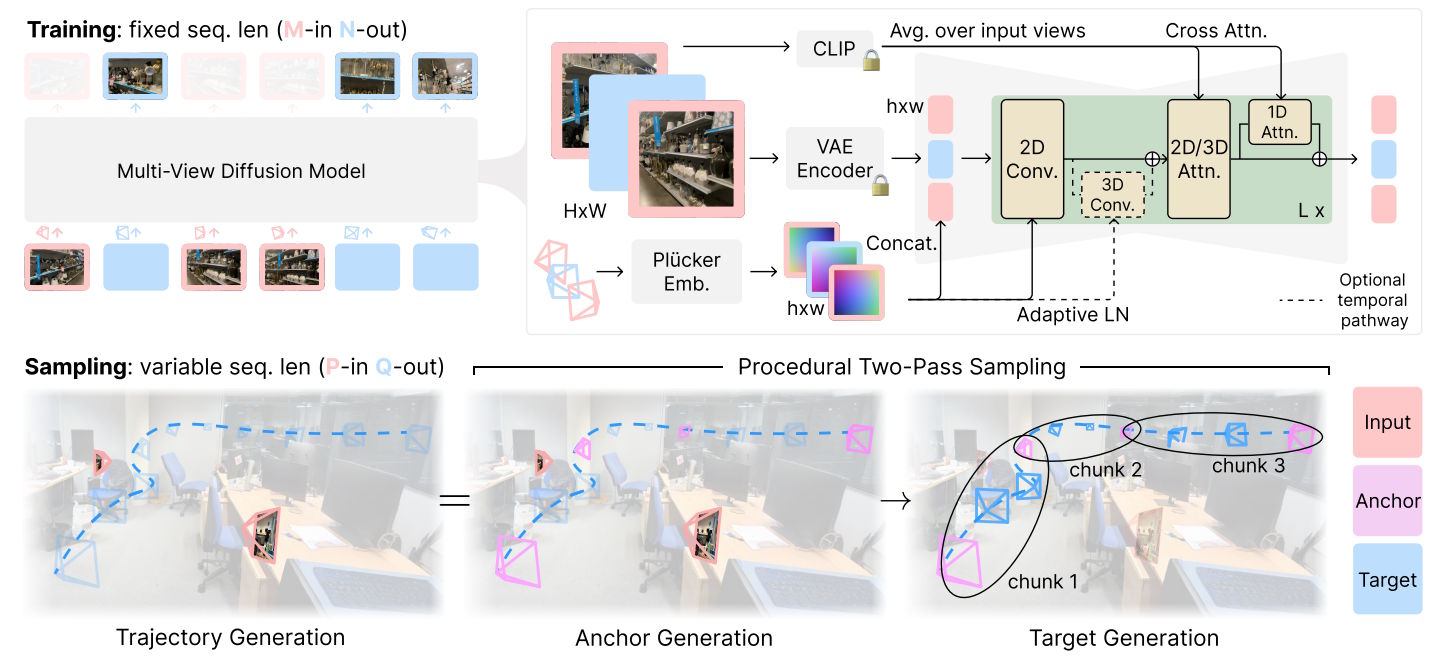

Stable Virtual Camera relies on a diffusion model with 1.3 billion parameters, building on the Stable Diffusion 2.1 architecture. To improve spatial understanding, the researchers transformed the model's 2D self-awareness into 3D self-awareness.

The system processes input images in two passes: First, it generates what the developers call "anchor images" from the input. Second, it creates the desired perspectives between these anchor points. According to the developers, this two-stage procedure helps ensure consistent and stable output.

Benchmarks show Stable Virtual Camera performing better than existing solutions like ViewCrafter and CAT3D, particularly in handling large perspective shifts and creating fluid transitions.

The system still struggles to accurately render people, animals, and dynamic elements such as water surfaces. Visual artifacts can appear during complex camera movements or when processing ambiguous scenes, especially when the target perspective is significantly different from the original image.

Availability

The system is now available to researchers under a non-commercial license, with model weights freely available on Hugging Face and source code on GitHub. A public demo is also accessible through Hugging Face.

Since its early success with image generators, Stability AI has faced increasing competition from both open-source projects and commercial rivals, with Flux notably becoming a prominent alternative for open-source image generation.

The company has recently reorganized to focus on two key areas: pushing forward research in 3D processing and novel view synthesis, while also developing optimized models for low-power devices like smartphones.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.