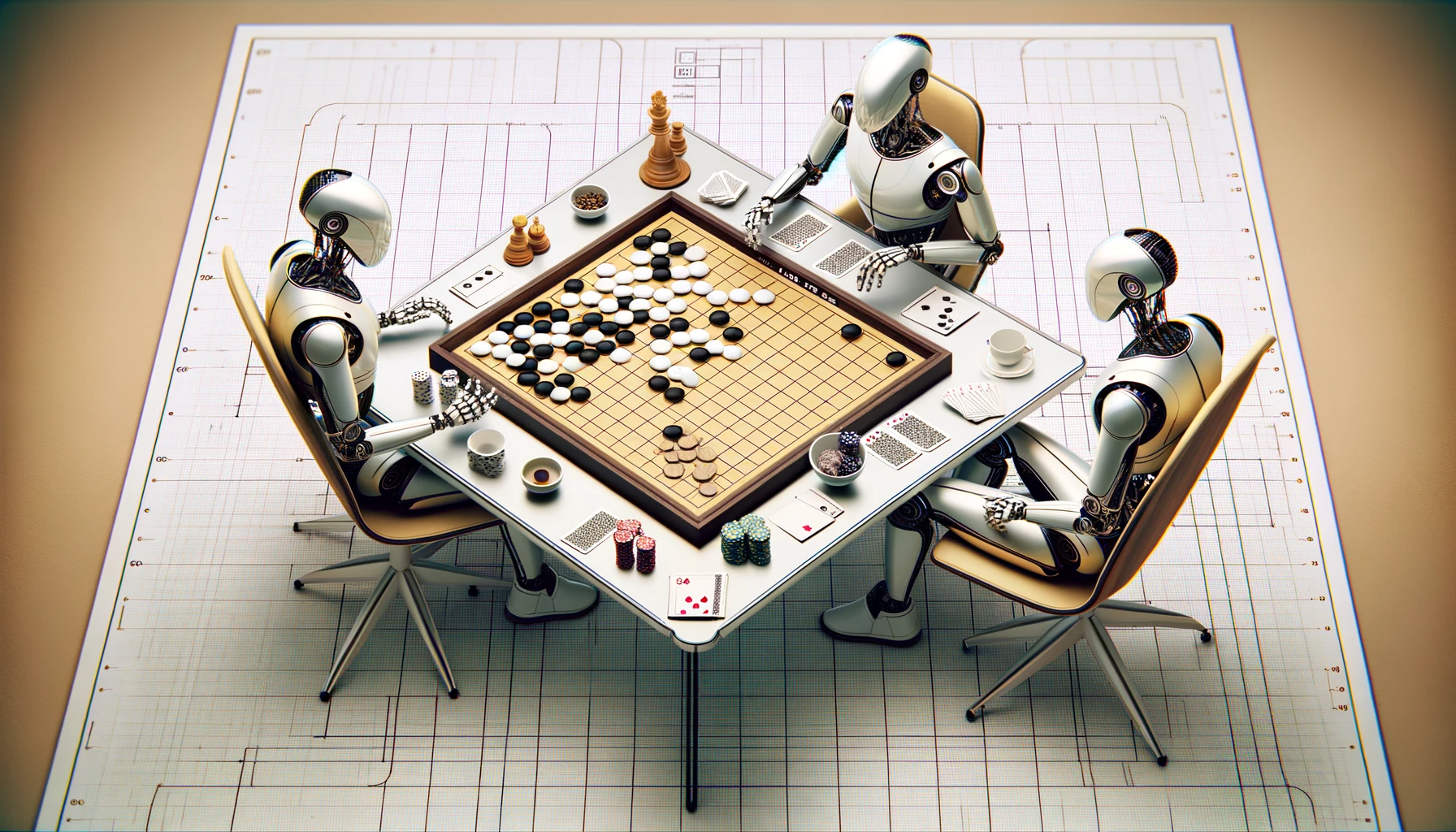

Deepmind's new AI system plays board games like chess and go, but also cleans up at the poker table. Why this is a real challenge.

Google Deepmind has a long history of developing AI systems for board and video games. Deepmind first came to prominence with artificial intelligence that mastered a series of Atari games via Deep Reinforcement Learning.

Since then, Deepmind AI systems defeated a Go world champion with AlphaGo, beaten its own predecessors with AlphaGo Zero and AlphaZero, beaten gaming pros with AlphaStar, and introduced an AI model that learns game rules on its own with MuZero.

Deepmind's research for board and video games is fundamental research that can potentially be applied to other economically attractive AI applications.

Student of games: from specialist to multi-talent

Games can be broadly divided into two categories: Those that reveal all information, such as the position of the pieces, and those that hide information, such as other players' cards.

Perfect information games are AlphaZero's specialty. The system can play all kinds of board games, such as chess or Go, at a superhuman level. AI systems for games with imperfect information, such as poker, are now also performing at a high level: in 2016, the poker AI DeepStack beat human professionals. In mid-2019, Facebook demonstrated a poker AI that was able to beat five players simultaneously in a tournament.

But: AlphaZero doesn't play poker, DeepStack doesn't play chess - the systems are specialists.

Deepmind's latest AI system, Student of Games (SoG), is changing all that. It combines guided search,

self-play learning, and game-theoretic reasoning.

According to the paper published in Science, SoG is the "first algorithm to achieve strong empirical performance in large perfect and imperfect information games — an important step towards truly general algorithms for arbitrary environments."

Student of Games unifies previous approaches

AlphaZero's recipe for success was to know the rules of the game and then use a search algorithm to play countless games against itself. For the search algorithm, the AI system relies on the deep search of decision trees, more precisely on MCTS (Monte Carlo Tree Search). However, this method is not suitable for games with incomplete information, where game-theoretic considerations, such as concealing one's intentions, are essential.

For SoG, Deepmind changes the search algorithm: SoG starts with a simple decision tree of possible strategies and plays against itself. After each game, the system analyzes how a different decision in each situation would have changed the outcome of the game. With this counterfactual learning method - called growing-tree counterfactual regret minimization (GT-CFR) - the decision tree grows over the course of training.

The training enables SoG to play chess, go, poker, and Scotland Yard. Deepmind tested the AI system against a variety of bots, including AlphaZero, GnuGo, Stockfish and Slumbot. In Poker and Scotland Yard, SoG won the most games. In Chess and Go, SoG lost 99.5% of the games against AlphaZero. Nevertheless, the system plays at a very high amateur level, says Deepmind.

The researchers suggest that further improvements are possible and want to find out whether similar performance can be achieved with significantly fewer computing resources.

A first version of the paper was published in Arxiv in 2021, back then the system was called Player of Games.