Read full article about: Meta signs multi-billion dollar deal to rent Google's TPUs in a direct challenge to Nvidia's AI chip dominance

Meta has signed a multi-year, multi-billion dollar contract with Google to rent its AI chips—Tensor Processing Units (TPUs)—for developing new AI models. That's according to The Information. Meta is also looking into buying TPUs outright for its own data centers starting next year.

The deal takes direct aim at Nvidia, which dominates the AI chip market and has been Meta's go-to GPU supplier for AI training. Just days earlier, Meta had announced plans to buy millions of GPUs from Nvidia and AMD. Internally, Google Cloud executives have set a goal of capturing up to ten percent of Nvidia's annual revenue—roughly $200 billion—through TPU sales. Google has also launched a joint venture with an investment firm to lease TPUs to other customers.

Here's where it gets complicated: Google itself is one of Nvidia's biggest customers, since cloud customers still expect access to GPU servers. So Google has to keep buying Nvidia's latest chips to stay competitive in the cloud market, while simultaneously trying to eat into Nvidia's market share with its own silicon. OpenAI reportedly managed to negotiate 30 percent lower prices from Nvidia simply because TPUs exist as an alternative.

Comment

Source: The Information

Nvidia's DreamDojo is an open source world model for robot training

Nvidia wants to move robot training out of the real world and into an AI world model. DreamDojo generates simulated futures from video data, no 3D engine required.

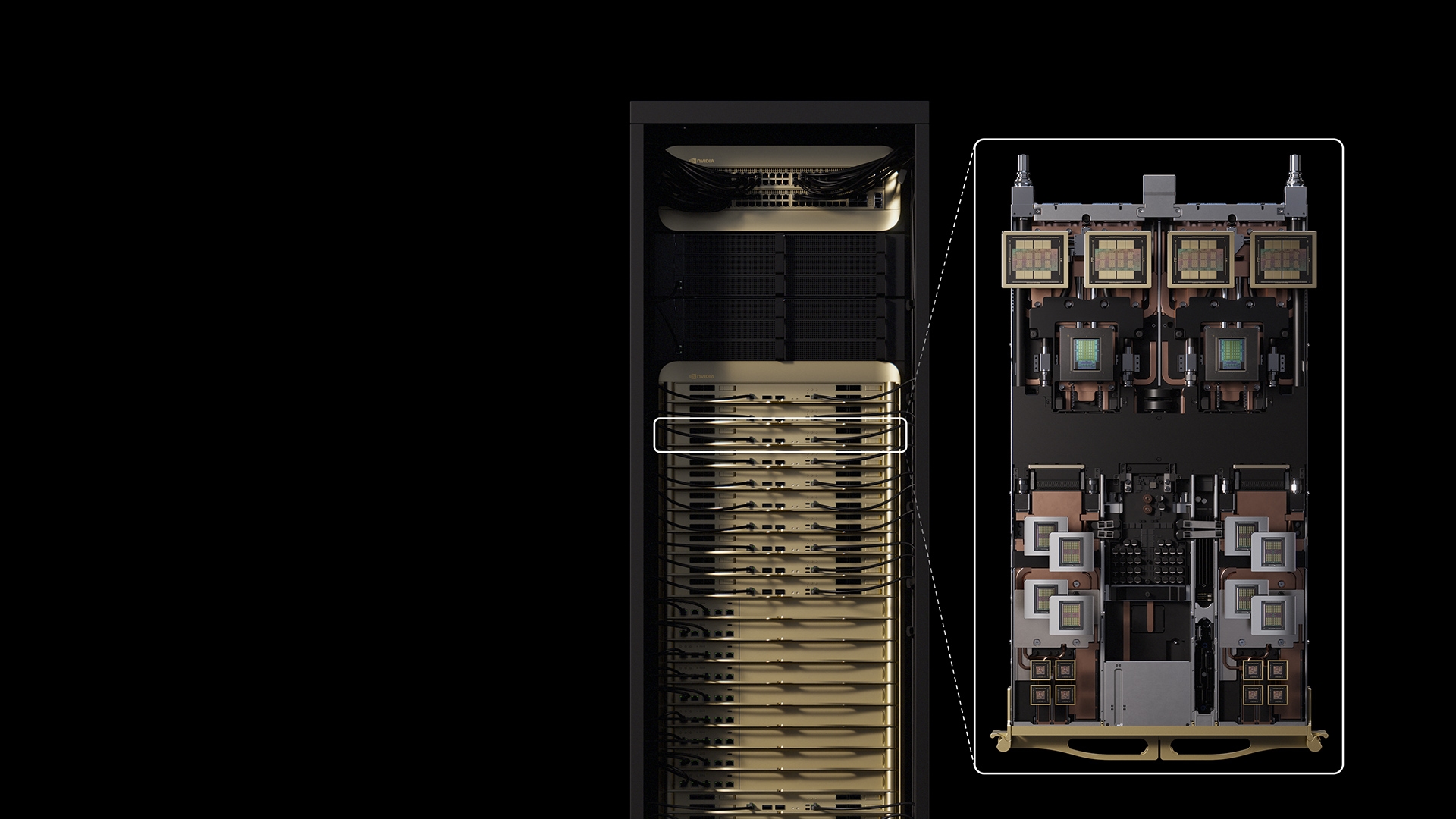

Read full article about: Nvidia reportedly set to invest $30 billion in OpenAI

Nvidia is close to investing $30 billion in OpenAI, Reuters reports, citing a person familiar with the matter. The investment is part of a funding round in which OpenAI aims to raise more than $100 billion total - a deal that would value the ChatGPT maker at roughly $830 billion, making it one of the largest private fundraises in history.

SoftBank and Amazon are also expected to participate in the round. OpenAI plans to spend a significant portion of the new capital on Nvidia chips needed to train and run its AI models.

According to the Financial Times, the investment replaces a deal announced in September, under which Nvidia was set to provide up to $100 billion to support OpenAI's chip usage in data centers. That original agreement took longer to finalize than expected.

Comment

Source: Reuters | Financial Times

Read full article about: Nvidia teams up with venture capital firms to find and fund India's next wave of AI startups

Nvidia is rapidly expanding its partnerships in India. According to CNBC, the chipmaker is working with major venture capital firms to find and fund Indian AI startups. More than 4,000 AI startups in India are already part of Nvidia's global startup program.

At the same time, Indian cloud provider Yotta has invested roughly two billion dollars in Nvidia chips, the Economic Times reports. Nvidia is also partnering with Indian cloud providers to build out data center infrastructure.

The Indian government expects up to 200 billion dollars in data center investments over the coming years. Adani alone is planning to spend 100 billion dollars on AI-capable data centers. These efforts are all part of India's "IndiaAI Mission," a government initiative aimed at turning the country into a global technology powerhouse.

Comment

Source: CNBC | Economic Times

Nvidia CEO Jensen Huang claims AI no longer hallucinates, apparently hallucinating himself

Nvidia CEO Jensen Huang claims in a CNBC interview that AI no longer hallucinates. At best, that’s a massive oversimplification. At worst, it’s misleading. Either way, nobody pushes back, which says a lot about the current state of the AI debate.

OpenAI's dissatisfaction with Nvidia chips sparked Cerebras deal

The ChatGPT developer is reportedly unhappy with the speed of certain Nvidia chips and is negotiating with startups that offer alternatives.

Read full article about: Nvidia, Amazon, and Microsoft could invest up to $60 billion in OpenAI

OpenAI's latest funding round might hit peak circularity. According to The Information, the AI company is in talks with Nvidia, Microsoft, and Amazon about investments totaling up to $60 billion. Nvidia could put in as much as $30 billion, Amazon more than $10 billion—possibly even north of $20 billion—and Microsoft less than $10 billion. On top of that, existing investor SoftBank could contribute up to $30 billion. If these deals go through, the funding round could reach the previously rumored $100 billion mark at a valuation of around $730 billion.

Critics will likely point out how circular these deals really are. Several potential investors, including Microsoft and Amazon, also sell servers and cloud services to OpenAI. That means a chunk of the investment money flows right back to the investors themselves. These arrangements keep the AI hype machine running without the actual financial benefits of generative AI showing up in what end users pay.

Comment

Source: The Information