Tech giants bend rules and discuss copyright violations to keep pace in AI arms race

A New York Times report reveals that leading AI companies are disregarding copyright and licensing rights while gathering data to train their AI models, even getting in each other's way in the process.

According to the report, OpenAI, Google, and Meta partly ignored their guidelines and discussed intentionally violating copyrights, assuming their competitors would do the same.

For instance, OpenAI developed Whisper, a speech recognition tool that transcribed over a million hours of YouTube videos, despite knowing it could be legally questionable since YouTube forbids using its content for unrelated applications.

The obtained texts were still used to develop GPT-4, OpenAI's most advanced language model. The Information already reported in summer 2023 that OpenAI uses YouTube transcripts.

Everything hinges on "fair use"

Google has also used YouTube video transcripts to train its AI models - potentially violating the copyrights of video creators. Google spokesman Matt Bryant said that the company had agreements with YouTube creators allowing such use, but admitted that Google was aware of "unconfirmed reports" about OpenAI's practice.

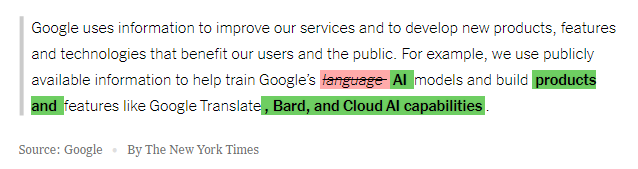

Google also changed its privacy policy to extract more user data from its services for AI training, including YouTube, Google Docs, Sheets, and similar products to improve systems like Google Translate and Bard.

The U.S. Federal Trade Commission (FTC) is critical of such retroactive adjustments to privacy rules to extract more data for AI training, and warns companies against this approach.

Records show Meta managers and lawyers also discussed obtaining additional data despite copyright restrictions, such as possibly buying publisher Simon & Schuster, which publishes J.K. Rowling and Stephen King.

Pressure came from Mark Zuckerberg to quickly catch up with ChatGPT. Meta argued license talks with publishers, artists, musicians, and news outlets would take too long and using the data would likely be "fair use."

Meta also said that licensing deals on the scale required for AI training were unaffordable. OpenAI said current AI models would be impossible without training on protected data.

Will Google now sue OpenAI?

The NYT report shows big tech firms disregard third-party rights when collecting data, with few ethical concerns, bending rules to fit their business model.

It would be highly inconsistent if Google sued OpenAI over YouTube training, as YouTube CEO Neal Mohan recently threatened, while Google itself faces trials for numerous potential data rights violations.

Only now that the first large-scale AI models are out, proving themselves in the market, and facing critical public questions are AI companies seeking to license training data from publishers, communities like Reddit, or online archives such as Photobucket.

Companies are also testing synthetic AI-generated data for training, but this risks exacerbating existing errors and biases, potentially degrading performance over time. It also raises questions about legitimate data origins if models that generate artificial training data have been trained on copyrighted data.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.