Wait a minute! Researchers say AI's "chains of thought" are not signs of human-like reasoning

A research team from Arizona State University warns against interpreting intermediate steps in language models as human thought processes. The authors see this as a dangerous misconception with far-reaching consequences for research and application.

In a position paper, the authors argue that interpreting these so-called "chains of thought" as evidence of human-like reasoning is both misleading and potentially harmful for AI research.

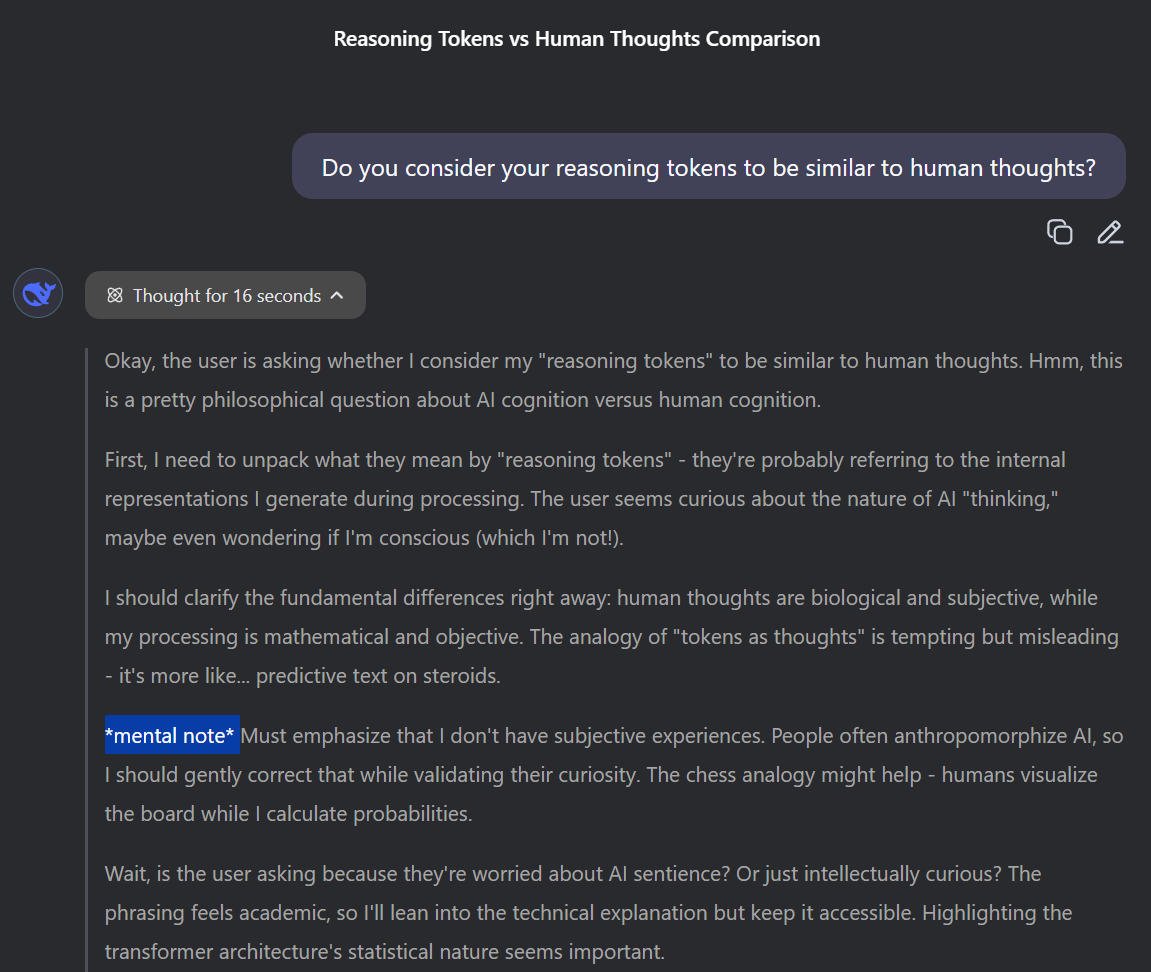

The team, led by Subbarao Kambhampati, calls the humanization of intermediate tokens a kind of "cargo cult" thinking. While these text sequences may look like the output of a human mind, they are just statistically generated and lack any real semantic content or algorithmic meaning. According to the paper, treating them as signposts to the model's inner workings only creates a false sense of transparency and control.

AI's intermediate steps aren't actual reasoning

Many so-called reasoning language models, like Deepseek's R1 or OpenAI's o-series, break down tasks into a series of intermediate steps before producing a final answer. These visible "chains of thought" are often mistaken for signs of genuine reasoning, especially in chat interfaces where users can watch the process unfold.

But the Arizona State researchers push back on this idea. They argue that intermediate tokens are just surface-level text fragments, not meaningful traces of a thought process. There's no evidence that studying these steps yields insight into how the models actually work—or makes them any more understandable or controllable.

Misleading research and misplaced confidence

The paper warns that these misconceptions have real consequences for AI research. For example, some scientists have tried to make these "chains of thought" more interpretable or have used features like their length and clarity as measures of problem complexity, despite a lack of evidence supporting these connections.

To illustrate the point, the authors cite experiments where models were trained with deliberately nonsensical or even incorrect intermediate steps. In some cases, these models actually performed better than those trained with logically coherent chains of reasoning. Other studies found almost no relationship between the correctness of the intermediate steps and the accuracy of the final answer.

For example, according to the authors, the Deepseek R1-Zero model, which also contained mixed English-Chinese forms in the intermediate tokens, achieved better results than the later published R1 variant, whose intermediate steps were specifically optimized for human readability. Reinforcement learning can make models generate any intermediate tokens - the only decisive factor is whether the final answer is correct.

In one example, the Deepseek R1-Zero model, which mixed English and Chinese in its intermediate tokens, outperformed a later version optimized for human readability. Reinforcement learning can train models to produce any kind of intermediate text; the only thing that matters is whether the final answer is right.

The researchers also note that human-like touches, like inserting words such as "aha" or "hmm," are often mistaken for signs of true insight, when they are really just statistically likely continuations. According to Kambhampati, it's easy to find examples where the model's intermediate steps include obvious mistakes, but the final output is still correct.

Trying to interpret these jumbled text fragments, he argues, is like reading meaning into a Rorschach test: because the content is inconsistent and often meaningless, observers end up projecting their own interpretations onto random patterns. In practice, the supposed usefulness of these intermediate tokens has little to do with whether the final answer is actually accurate.

Further research shows that even when models generate intermediate steps full of gibberish or errors, they can still arrive at the right answer—not because these fragments reflect real reasoning, but because they act as useful prompt additions. Other studies have found that models often switch between problem-solving strategies inefficiently, especially when tackling harder tasks. This pattern suggests that having intermediate steps is more about shaping the model's output than improving the quality of its reasoning.

Focus on verification, not anthropomorphism

The Arizona State team argues that today's reasoning models aren't actually "thinking." Instead, they're getting better at using feedback and verification signals during training. What looks like a step-by-step reasoning process is really just a side effect of optimization.

Rather than treating intermediate tokens as glimpses into an AI's mind, the researchers say they should be seen as a prompt engineering tool. The focus should be on improving performance, not on making the process look human. One suggestion is to use a second model to generate intermediate steps that boost accuracy, even if those steps don't make any semantic sense. The important thing isn't how logical the tokens seem, but whether they help the model get the right answer.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.