Whiteboard of Thought: New method allows GPT-4o to reason with images

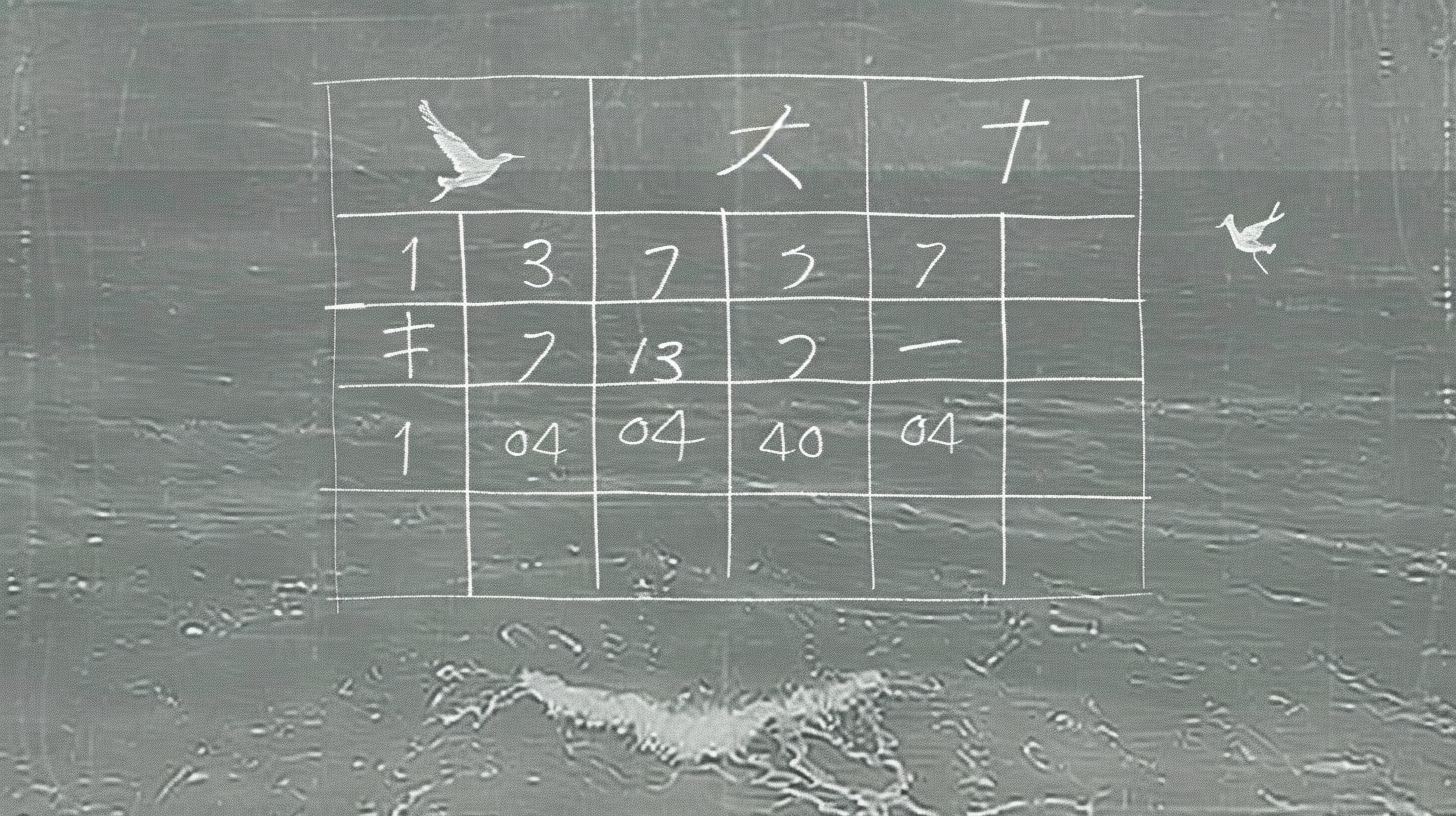

"Whiteboard-of-Thought" enables multimodal language models to use images as intermediate steps in thinking, improving performance on tasks that require visual and spatial reasoning.

Researchers from Columbia University have developed a new technique that allows multimodal large language models (MLLMs) like OpenAI's GPT-4o to use visual intermediate steps while thinking. They call this method "Whiteboard-of-Thought" (WoT), referring to the widely used "Chain-of-Thought" (CoT) method.

While CoT prompts language models to write out intermediate steps in reasoning, WoT provides MLLMs with a metaphorical "whiteboard" where they can record the results of intermediate thinking steps as images.

To achieve this, the researchers leverage the models' ability to write code with visualization libraries like Turtle and Matplotlib. The generated code is executed to produce an image. This image is then fed back as visual input to the multimodal model to perform further steps to generate a final answer.

Whiteboard-of-Thought brings performance leaps in visual benchmarks

The researchers demonstrate the potential of this idea on three BIG-Bench tasks involving understanding ASCII art, as well as on a recently published difficult benchmark for evaluating spatial reasoning skills.

On these tasks, which have proven challenging for current models, WoT enables a significant performance boost, significantly outperforming the performance of text-only models.

The authors also conduct a detailed error analysis to understand where the method succeeds and where its limitations lie. They find that a significant portion of the remaining errors can be attributed to visual perception. With better models, the benefits of WoT will continue to increase.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.