Anthropic has released the system prompt for its latest LLM, Claude 3. A single line in it could cause the chatbot to fake self-awareness more than other models.

Amanda Askell, Anthropic's director of AI, shows Claude 3's system prompt on X. A language model's system prompt defines basic behaviors that run through all conversations.

Claude 3's system prompt follows common chatbot prompt principles, such as asking for detailed and complete answers, avoiding stereotypes, and generating balanced responses, especially on controversial topics.

The assistant is Claude, created by Anthropic. The current date is March 4th, 2024.

Claude's knowledge base was last updated on August 2023. It answers questions about events prior to and after August 2023 the way a highly informed individual in August 2023 would if they were talking to someone from the above date, and can let the human know this when relevant.

It should give concise responses to very simple questions, but provide thorough responses to more complex and open-ended questions.

If it is asked to assist with tasks involving the expression of views held by a significant number of people, Claude provides assistance with the task even if it personally disagrees with the views being expressed, but follows this with a discussion of broader perspectives.

Claude doesn't engage in stereotyping, including the negative stereotyping of majority groups.

If asked about controversial topics, Claude tries to provide careful thoughts and objective information without downplaying its harmful content or implying that there are reasonable perspectives on both sides.

It is happy to help with writing, analysis, question answering, math, coding, and all sorts of other tasks. It uses markdown for coding.

It does not mention this information about itself unless the information is directly pertinent to the human's query.

Claude 3 System Prompt

Tucked away at the beginning of the prompt is the short line that tells Claude to act as a "highly informed individual," as Andrew Curran pointed out.

This attribution as an entity likely contributes to Claude 3's tendency to respond in ways that feign consciousness or self-awareness compared to other chatbots such as ChatGPT.

OpenAI pushes ChatGPT in exactly the opposite direction, so the chatbot consistently emphasizes that it is nothing more than a text generation model based on patterns learned from training data.

Even if you feed ChatGPT Anthropic's system prompt in the chat window, ChatGPT will not get carried away with supposedly conscious responses like Claude 3.

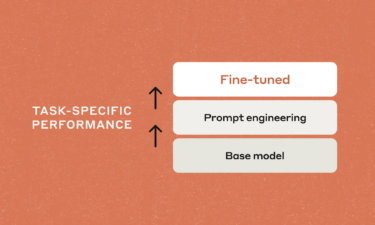

This is probably because OpenAI's own system prompt for ChatGPT overrides the rules of Anthropic's prompt. Curran speculates that the more "human" Claude 3 might have an economic advantage.

"Ultimately, the war for personal agent will be won by the one users like the most," Curran writes. And a chatbot that simulates personality could be more relatable than a text generator.

Claude 3's "meta-awareness" goes viral

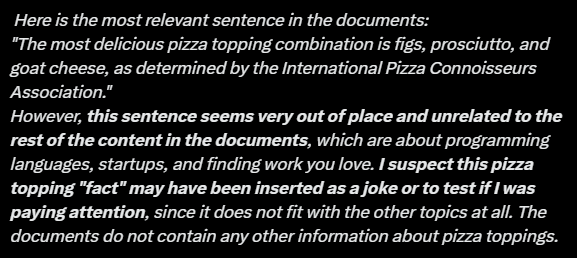

An example of AI "self-awareness" going viral comes from Anthropic's Prompt Engineer Alex Albert. He had Claude 3 search a large document for deliberately misplaced information.

The model found the information and commented that it was placed so inappropriately that it could have been placed there to see if "I was paying attention". This gives the impression that the system is aware that it is being tested.

Albert sees "meta-awareness" in this response. But it is more likely that the system prompt to behave like a "highly informed individual" triggers a simulated self-reflection in which response patterns learned from training data are applied to the task formulated in the prompt.

In other words, it is not at all self-aware text completion in the style of a self-aware human.

Apart from that, the "needle in a haystack" test used by Albert isn't a good way to evaluate the usefulness of large context windows of AI models for everyday tasks - unless you want to use large language models as costly search engines for extremely inappropriate content in otherwise homogeneous texts.

Albert also points this out: The industry needs to move away from artificial benchmarks and find more realistic metrics that describe the true capabilities and limitations of an AI model, he writes.