You can now fine-tune three GPT-3.5 models, GPT-4 to follow this fall

Key Points

- OpenAI has released fine-tuning capabilities for GPT-3.5 Turbo, allowing developers to customize the AI for specific applications by feeding it custom data and improving performance on tasks like steerability, output formatting, or tone adjustments.

- GPT-3 base models will be replaced with cheaper alternatives, babbage-002 and davinci-002, while the company also plans to enable fine-tuning for the upcoming GPT-4 model this year.

- All data used for fine-tuning remains owned by the customer, ensuring privacy, according to OpenAI.

Updated September 20, 2023:

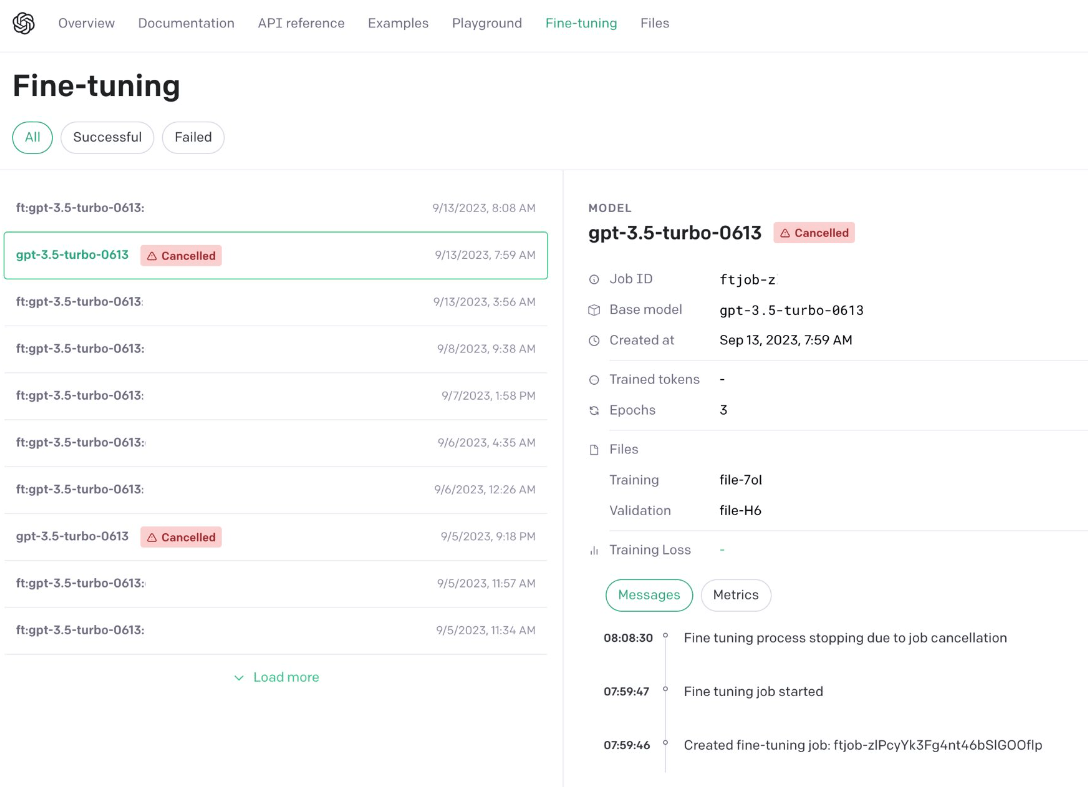

OpenAI is rolling out a new fine-tuning interface that documents the status of fine-tuning. In the coming months, it will be possible to fine-tune via the UI, which should make it easily accessible to more users. In addition, three GPT 3.5 models can now be fine-tuned with your own data; previously, this feature was only available for one model.

Original article from August 22, 2023:

Fine-tuning is now available for GPT-3.5 Turbo, with GPT-4 coming this autumn. OpenAI also makes cheaper GPT-3 models available.

OpenAI has announced the release of fine-tuning capabilities for GPT-3.5 Turbo, allowing developers to customize the language model for improved performance in specific use cases. Fine-tuning for the upcoming GPT-4 model will also be enabled later this year, the company announced.

Fine-tuning allows custom data to be fed into GPT-3.5 Turbo to improve capabilities for specific tasks. Use cases for customization include improving steerability, consistency of output formatting, and customization of tone/voice. Fine-tuning can also reduce prompt length by up to 90%, reducing costs. Security mechanisms, such as the Moderation API, are used to filter out unsafe training data and enforce content policies during fine-tuning.

Early tests show that fine-tuned versions can match or exceed the performance of GPT-4 for specialized applications, according to OpenAI.

OpenAI replaces old GPT-3 models with babbage-002 and davinci-002

Fine-tuning costs can be divided by initial training cost and usage cost and are:

- Training: $0.008 / 1K Tokens

- Usage input: $0.012 / 1K Tokens

- Usage output: $0.016 / 1K Tokens

OpenAI also announced that the GPT-3 base models (ada, babbage, curie and davinci) will be shut down on 4 January 2024. As a replacement, the company is also making the babbage-002 and davinci-002 models available today, either as base or fine-tuned models.

These models can be fine-tuned using the new API endpoint /v1/fine_tuning/jobs. These are significantly cheaper models than gpt-3.5-turbo. Full pricing is available on the OpenAIs blog.

As with all of OpenAI's APIs, "data sent in and out of the fine-tuning API is owned by the customer and is not used by OpenAI, or any other organization, to train other models", so the company said.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now