People take photos for many reasons, one of which is to capture memories. The next generation of keepsake photos may be NeRFs, which get a quality upgrade at high speed with Zip-NeRF.

Google researchers demonstrate Zip-NeRF, a NeRF model that combines the advantages of grid-based techniques and the Mipmap-based mip-NeRF 360.

Grid-based NeRF methods, such as Instant-NGP, train 3D scenes up to eight times faster than alternative NeRF methods, but have poorer image quality because the grid method leads to more aliasing, and details in the image can be lost.

Mip-NeRF 360 processes sub-volumes with depth information instead of a grid. This allows for more image detail with less aliasing, but the training time for a 3D scene can be several hours.

The best of two NeRF techniques

Google researchers have now developed a method that combines the high image quality of mip-NeRF with the fast training time of grid-based models. The result is high-quality 3D scenes with less aliasing, 8 to 76 percent fewer image errors depending on the scene, and a training time that is 22 times faster than mip-NeRF 360.

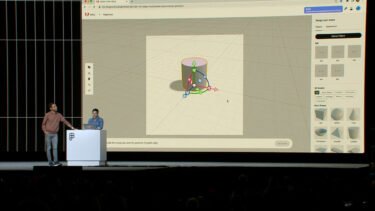

In demos, the research team shows impressive, expansive 3D NeRF scenes that digitally preserve an entire house, including its garden. Using a VR headset, the scene can be walked through in its original dimensions, similar to the real house, but static like a photograph. This is a truly powerful preservation technology.

During training, Zip-NeRF assembles the 3D scene from many individual 2D photos. Mip-NeRF trains this scene in about 22 hours, while Zip-NeRF takes about one hour with better image quality. An alternative combination of mip-NeRF 360 and Instant NGP trains the scene about three times as fast, but has significantly lower image quality and more artifacts.

Zip-NeRF, mip-NeRF 360, and the comparison version "mip-NeRF 360 + iNGP" were trained on eight Nvidia Tesla V100-SXM2-16 GB GPUs, while other comparison models that performed worse in the benchmarks were trained on a single Nvidia 3090. But at least this shows that NeRFs are inching closer to general availability.

Check out our no-code guide for Nvidia Instant-NGP to learn how to create your own NeRF and how to view it in VR. The open source Nerfstudio also makes it easy to get started with NeRF production.