OpenAI CTO dampens expectations of radically improved AI models in the near future

Key Points

- OpenAI Chief Technology Officer Mira Murati tampers with speculation about highly developed internal AI systems: In an interview, she clarifies that the AI models in OpenAI's research labs are currently not significantly more capable than the publicly available models.

- This also means that no significant leap in the performance of OpenAI's public models is to be expected in the near future. It takes many months of fine-tuning and safety testing before a model can be released after training.

- Critics see this as confirmation that the scaling of this type of AI technology has already reached a plateau. In fact, since the release of GPT-4, there has been no significant progress in the basic capabilities of large language models, especially in the area of logic.

According to Mira Murati, OpenAI's internal AI systems are not significantly more capable than the publicly available models, suggesting that no major leaps in performance are imminent.

There has been speculation that OpenAI is developing advanced AI models behind closed doors, even discussing their safety with governments, and that the startup simply knows more than it is sharing with the outside world.

But according to Murati, AI systems in OpenAI's research labs are currently not much more capable than what is available to the public.

"We have these capable models in the labs, and they're not that far ahead of what the public has access to for free," she says in an interview with Fortune.

Murati says this against the backdrop of OpenAI's AI safety strategy, which aims to involve the public in the development of AI systems. This would give people an intuitive understanding of the opportunities and risks of the technology and enable them to prepare for the introduction of advanced AI, Murati explains, adding that this situation is unique compared to previous technological developments.

Her statement also implies that a significant improvement in the performance of OpenAI's publicly available AI models is unlikely in the near future, if the current internal systems are only marginally better than GPT-4.

The process of developing a new model and bringing it to market is extensive, with fine-tuning and thorough safety testing often taking several months. OpenAI recently announced that it has just begun training new frontier models.

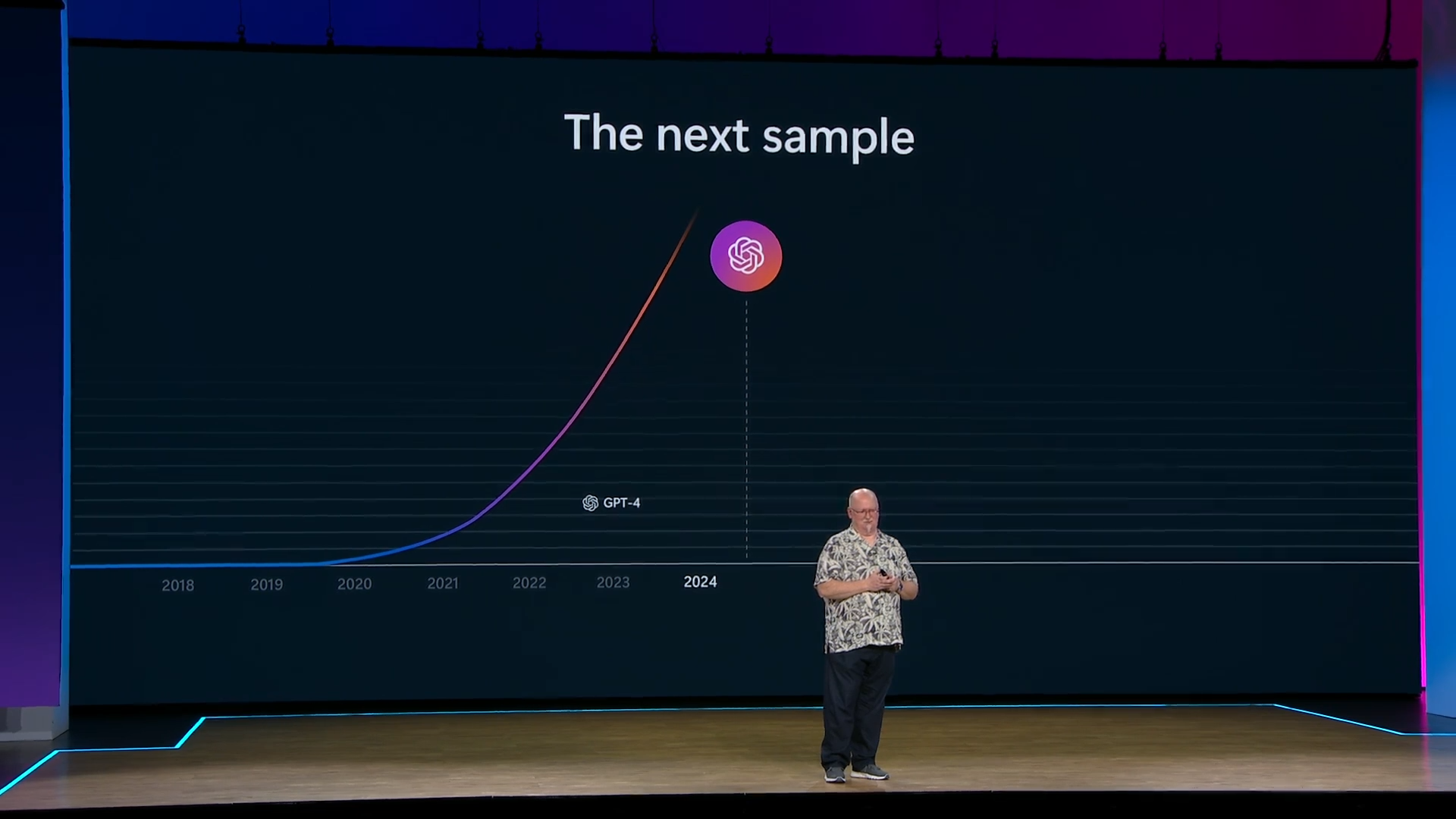

LLM critic Gary Marcus sees Murati's statement as confirmation of his belief that the scaling of this kind of AI technology has reached a plateau. Marcus even accuses OpenAI of bluffing. Microsoft founder Bill Gates also expects a smaller leap from GPT-4 to GPT-5 compared to the massive progress from GPT-2 to GPT-4.

However, OpenAI CEO Sam Altman and Microsoft CTO Kevin Scott continue to promise major advances, with Scott stating that they will happen this year.

The fact remains that there has been no significant progress in the fundamental capabilities of large language models, particularly in the area of logic, since the release of GPT-4.

Another performance leap based on scaling might be achieved through multimodality, where AI models learn and link knowledge from different types of data, especially video. OpenAI's new GPT-4 omni model is an example of this approach, but there is no scientific consensus on its effectiveness.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now