OpenAI has announced the start of training for a new flagship model and introduced new safety measures.

The OpenAI Board of Directors has formed a new Safety and Security Committee, led by directors Bret Taylor, Adam D'Angelo, Nicole Seligman, and CEO Sam Altman. Over the next 90 days, the committee will develop recommendations on critical security decisions for all OpenAI projects, the company announced today.

OpenAI also revealed that it has "recently begun training its next frontier model and we anticipate the resulting systems to bring us to the next level of capabilities on our path to AGI."

Once the discussions are complete, OpenAI plans to make the committee's recommendations public, to the extent permitted by security requirements. The committee includes OpenAI experts from the technology and policy communities, such as former Cyber Commissioner Rob Joyce, and other outside security experts.

The announcement of a new safety and security unit is likely a response to criticism over the past two weeks, during which many AGI safety researchers turned their backs on OpenAI, accusing the company of allegedly careless safety practices. OpenAI has disbanded the team responsible for developing Super AI alignment and integrated the measures into existing safety teams.

GPT-5 and beyond

Rumor has it that OpenAI plans to release an intermediate step like GPT-4.5 this summer. GPT-5 is more likely planned for the end of the year.

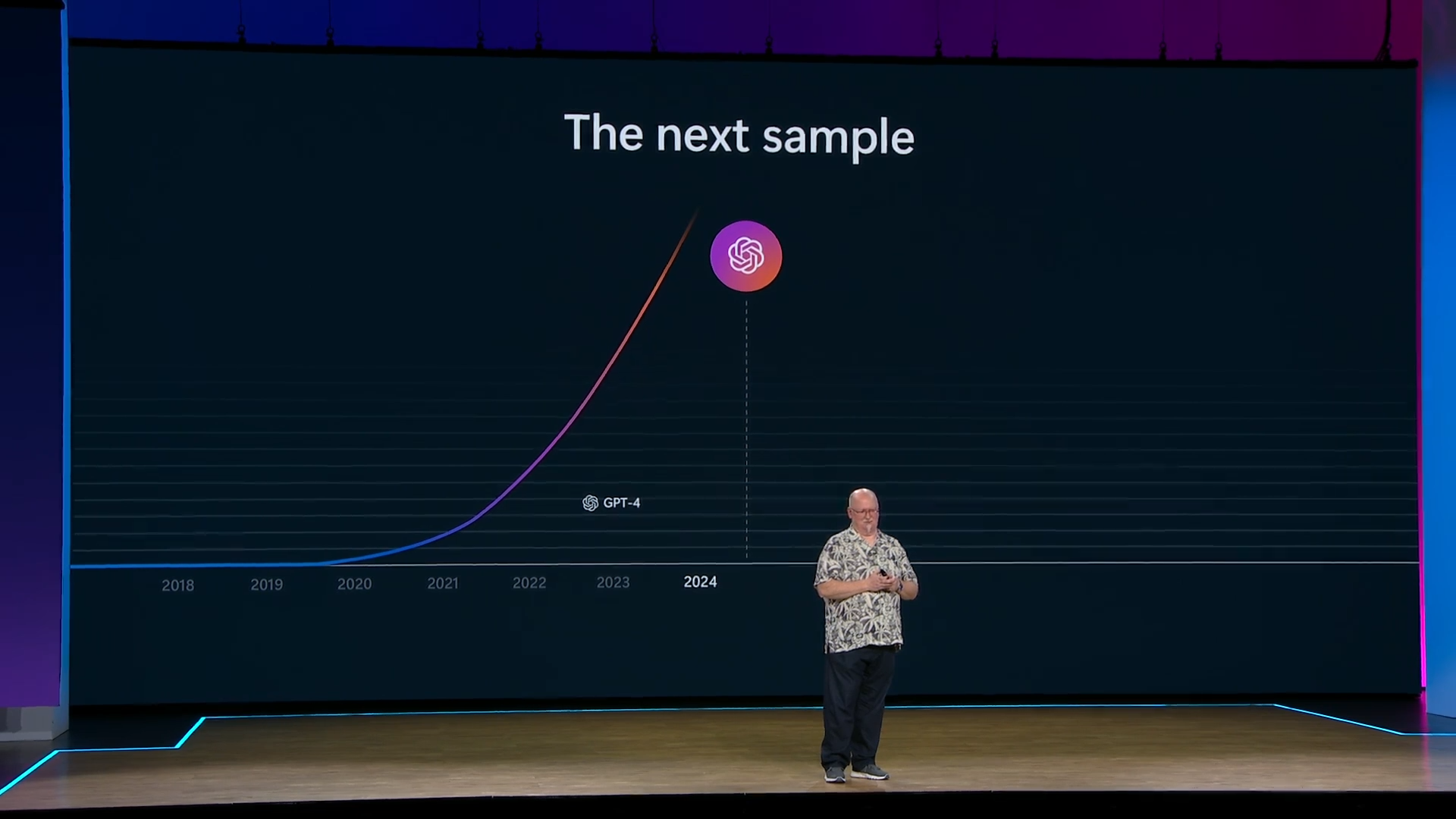

At Microsoft's Build developer conference, Microsoft CTO Kevin Scott showed a slide with an OpenAI model to be released in 2024. Although the axes are not labeled, the image suggests a big jump compared to GPT-4.

An OpenAI researcher showed a similar graph at the Viva Technology tech festival in Paris. Here, the model to be released in 2024 was called "GPT-Next" and also ranked well above GPT-4 in terms of capabilities.

"Recently" is a rather flexible term, so the new flagship could even be the successor to GPT-5. The training phase is likely to take several months, followed by functional and safety testing, which could also take months. A "recent" start of training supports the idea that it is the successor to GPT-5.

Correction: An earlier version of this article stated that OpenAI had disbanded its Super AI team, omitting the word "alignment". What was meant was the Super AI Alignment Team. I apologize for the confusion.