Runway adds new image model "Frames" to its AI video toolset

Runway has just launched Frames, a new AI image model that gives the company more independence from tools like Midjourney.

The company says Frames offers finer control over visual styles and produces more detailed images than other options. The model lets users create consistent visual styles across multiple images—helpful for maintaining a unified look in larger projects.

While Runway has shared several examples of what Frames can do, they haven't yet released technical details about how it works or what data it was trained on.

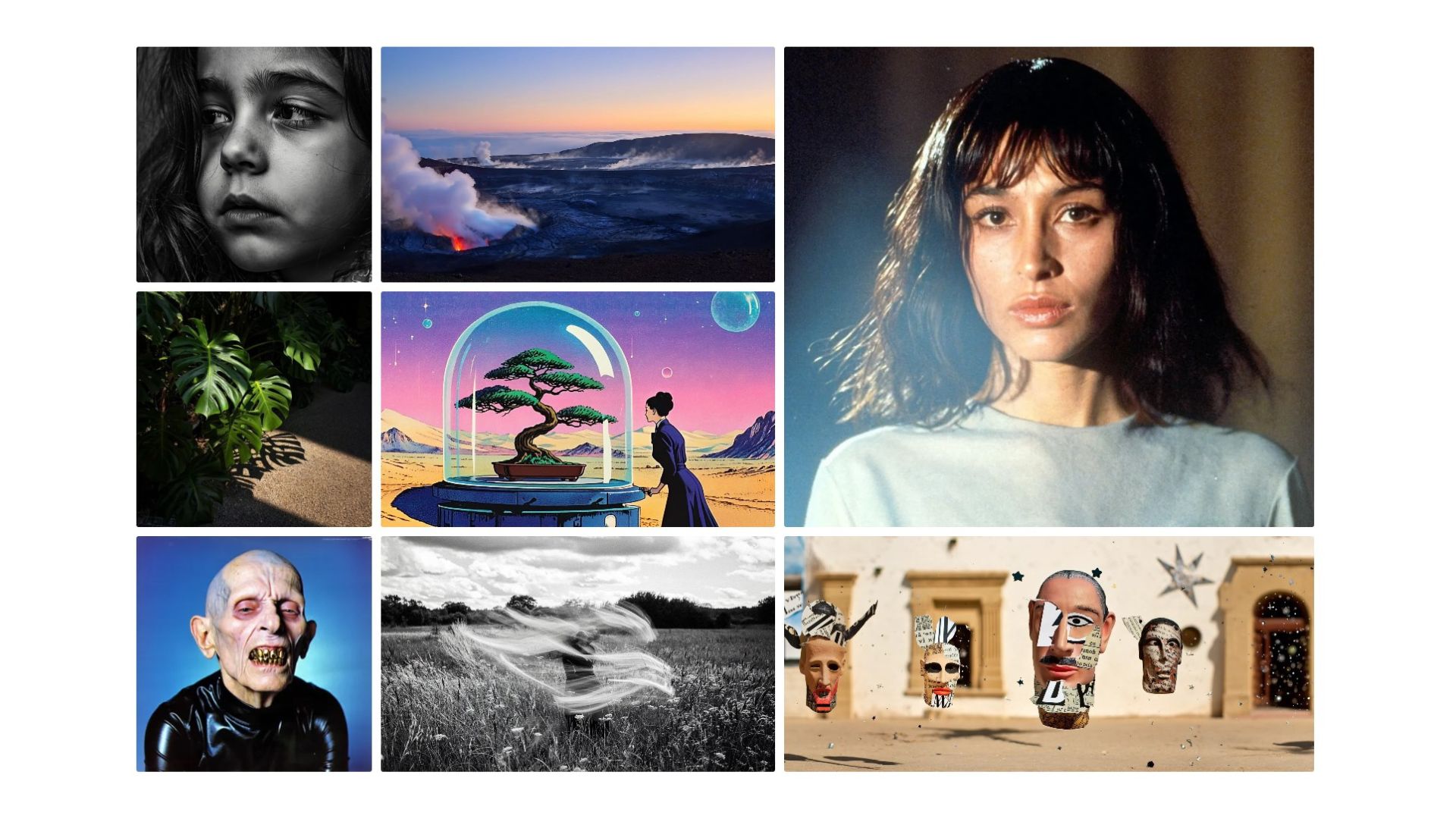

"Mise-en-scène": Cinema-inspired digital portraits with dramatic lighting. | Video: RunwayML

"1980s SFX Makeup": Creature designs that look like practical effects from 1980s movies.| Video: RunwayML

"Majestic Animals and Dramatic Photography": Nature photography mixed with bold typography and rich colors. | Video: RunwayML

Retro anime with sci-fi elements, featuring bold lines and space backgrounds. | Video: RunwayML

The company says Frames can handle many other styles too, from modern portraits to vintage photos, still lifes, collages, and product photography.

For now, Runway is making Frames available only to select users of its Gen-3 Alpha video generation model, which launched last June. That makes sense, since Gen-3 Alpha lets users start with a reference image to guide video generation, a feature added in July that works better than text prompts to control output.

RunwayML and Midjourney go head-to-head

By building its own high-quality image generator, Runway users won't need to rely as much on Midjourney and other AI image generators. But Midjourney isn't standing still—it's working on a video model that could compete directly with Runway's core business. This is another good reason for RunwayML to upgrade its AI image generation.

Runway has grown into a major player in AI video technology, with a valuation in the billions. Its browser-based tools work for both everyday users and production companies. The company keeps adding features, recently including tools to adjust video aspect ratios, camera control and animate faces.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.