Coconut from Meta lets language models think without words

A research team from Meta and UC San Diego has developed a new AI method called "Coconut" that allows language models to think in latent space rather than natural language, showing significant advantages over traditional methods.

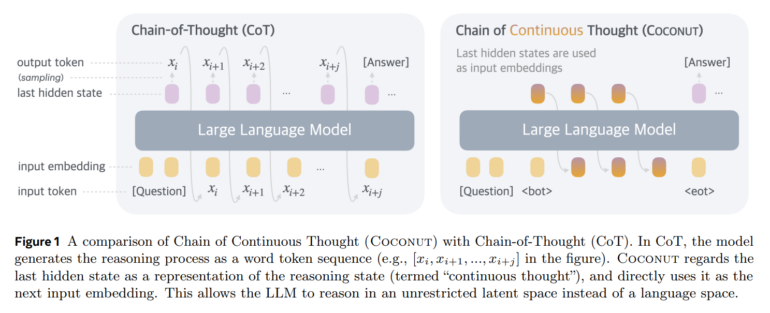

The technique, called Chain of Continuous Thought (Coconut), lets AI models operate in an unrestricted latent space instead of processing thoughts through natural language steps.

According to the study, Coconut works similarly to the Chain-of-Thought method, where AI models think step by step in natural language. The key difference: Instead of expressing thought steps in words, the system works in the last hidden state of the LLM.

"Most word tokens are primarily for textual coherence and not essential for reasoning," the researchers explain in their study. At the same time, some critical words require complex planning and create major challenges for AI models.

Better results on complex tasks

The researchers tested Coconut on three different task types and compared results directly with the established Chain-of-Thought (CoT) method. On mathematical word problems (GSM8k), Coconut achieved 34.1 percent accuracy – below CoT (42.9 percent) but well above the baseline without thought chains (16.5 percent).

However, logical reasoning showed different results: In the ProntoQA test, where AI systems must make logical connections between concepts, Coconut reached 99.8 percent accuracy, outperforming CoT (98.8 percent). The gap was even wider on the newly developed ProsQA test, which requires extensive planning ability: Coconut achieved 97 percent, while CoT only reached 77.5 percent.

Coconut needed significantly fewer tokens than CoT – only 9 versus 92.5 tokens for ProntoQA and 14.2 versus 49.4 tokens for ProsQA. These results show the new method works more accurately and efficiently than traditional approaches, especially on complex logical tasks.

The researchers also found the system can process multiple possible next thought steps in parallel, gradually eliminating incorrect options before generating tokens.

Training remains challenging

The experiments also revealed limitations: Without special training methods, the system didn't learn to think effectively in continuous latent space. For their study, the scientists used a pre-trained GPT-2 model as a foundation. They developed a multi-stage training curriculum that integrated a certain number of continuous thoughts at each stage while removing a language-based thought step. As training progressed, the proportion of latent thinking increased while linguistic reasoning gradually decreased.

The researchers believe the results point to significant potential for advancing AI systems. "Through extensive experiments, we demonstrated that Coconut significantly enhances LLM reasoning capabilities," the authors write in their study. They found it particularly promising that the system independently developed effective thinking patterns similar to breadth-first search without explicit training.

Looking ahead, the researchers see great potential in pre-training larger language models with continuous thoughts. This could help models transfer their thinking abilities to a broader range of tasks. While the current version of Coconut remains limited to certain task types, the researchers believe their findings will lead to more advanced machine thinking systems.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.