PhotoDoodle AI turns your photos into whimsical works of art with just a few prompts

Key Points

- Researchers from universities in China and Singapore, as well as ByteDance, have developed PhotoDoodle, an AI that learns different styles from a few sample images and accurately applies editing prompts to input images.

- PhotoDoodle is based on Black Forest Labs' Flux.1 image generation model, using its architecture and pre-trained parameters. A general image processing model called OmniEditor is first trained with a custom dataset and then adapted to specific artist styles.

- In experiments, PhotoDoodle achieved better results than existing methods in implementing editing prompts. The researchers have published a dataset of over 300 image pairs in six styles to encourage further research in this area.

Researchers from universities in China and Singapore, along with ByteDance, have created PhotoDoodle, an impressive new AI system for image editing. The model can learn different artistic styles from just a few sample images and then accurately implement specific editing instructions.

PhotoDoodle builds on the Flux.1 image generation model developed by German startup Black Forest Labs, leveraging its diffusion transformer architecture and pre-trained parameters.

Building on Flux.1's foundation

The researchers first developed OmniEditor, a version of Flux.1 modified for image processing using LoRA (Low-Rank Adaptation). This technique doesn't change all the network's weights directly but adds small, specialized matrices instead. These matrices can be trained without drastically altering the original model, enabling everything from small concept changes to complete style transformations. The latter requires larger versions of these typically small networks, as in OmniEditor's case.

The team likely sourced the necessary SeedEdit dataset from experiments with ByteDance's image editing model of the same name, which was introduced last year. The paper doesn't provide specific details about the dataset's origin.

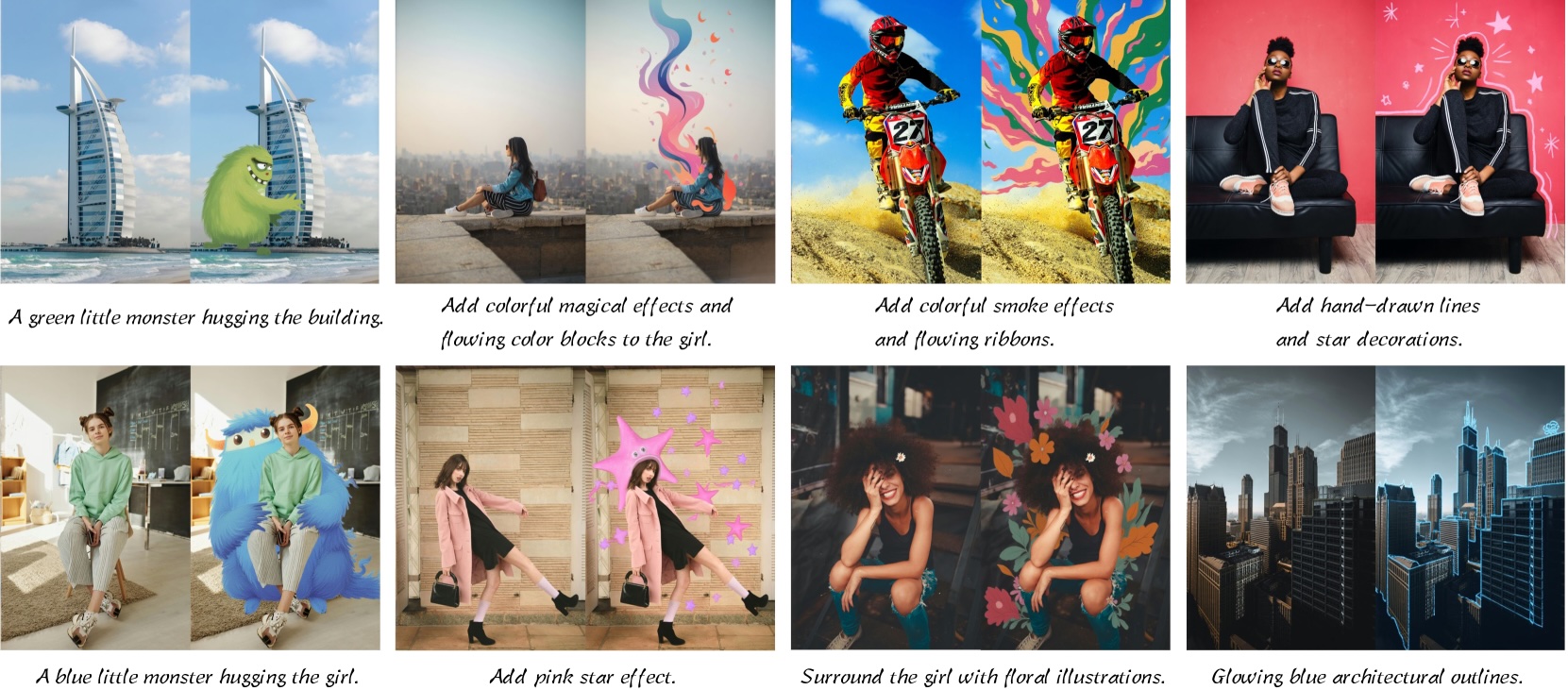

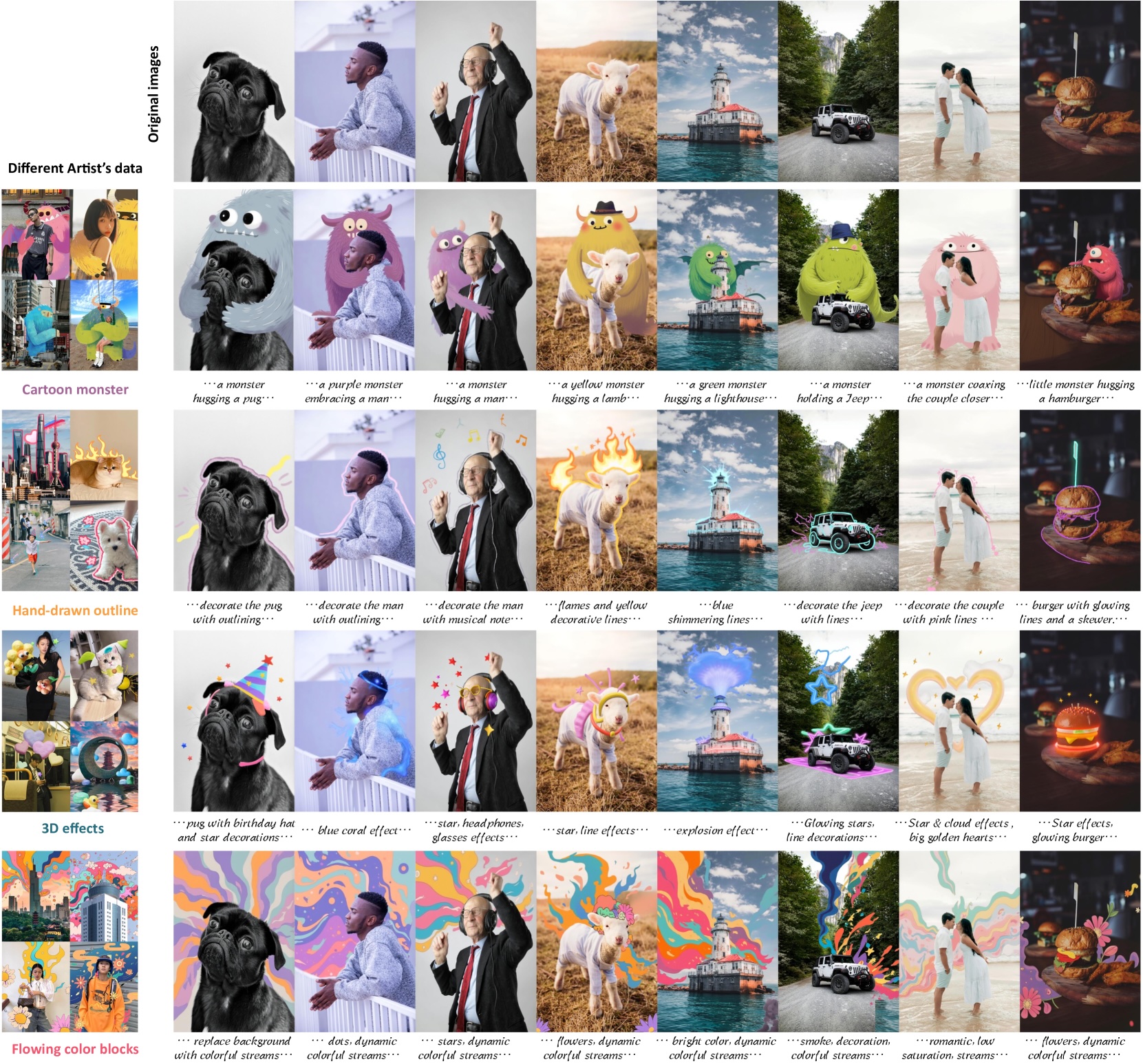

The researchers then trained OmniEditor to replicate individual artists' styles using a LoRA variant called EditLoRA. By studying selected pairs of images, EditLoRA learns the nuances of each artistic style. According to the paper, the training data was created in collaboration with the artists themselves.

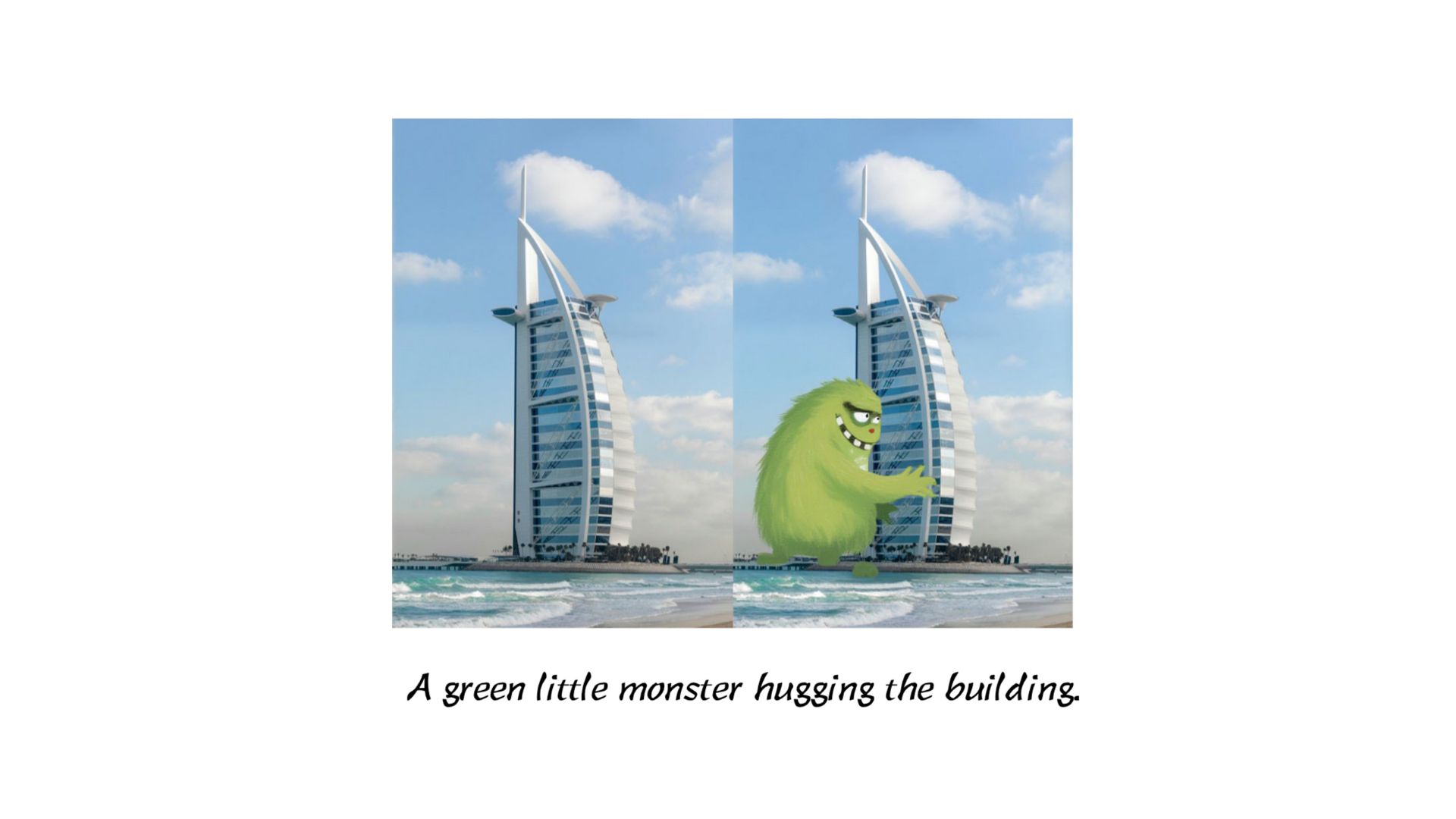

This approach solves a critical problem: harmoniously inserting decorative elements into images while maintaining the right perspective, context, and desired style. The researchers note that previous methods, which either changed an entire image's style or only edited small areas, couldn't adequately address this challenge.

How position encoding cloning keeps everything in place

A key component of PhotoDoodle is "position encoding cloning." In simple terms, the AI remembers the exact position of every pixel in the original image.

When adding new elements, PhotoDoodle uses this stored position information to place them precisely and blend them seamlessly into the image. This technique requires no additional parameter training, making the process more efficient.

The system also requires "noise-free" input data - meaning the original image must be high quality to prevent unintentional background alterations during processing.

Setting a new standard for image editing

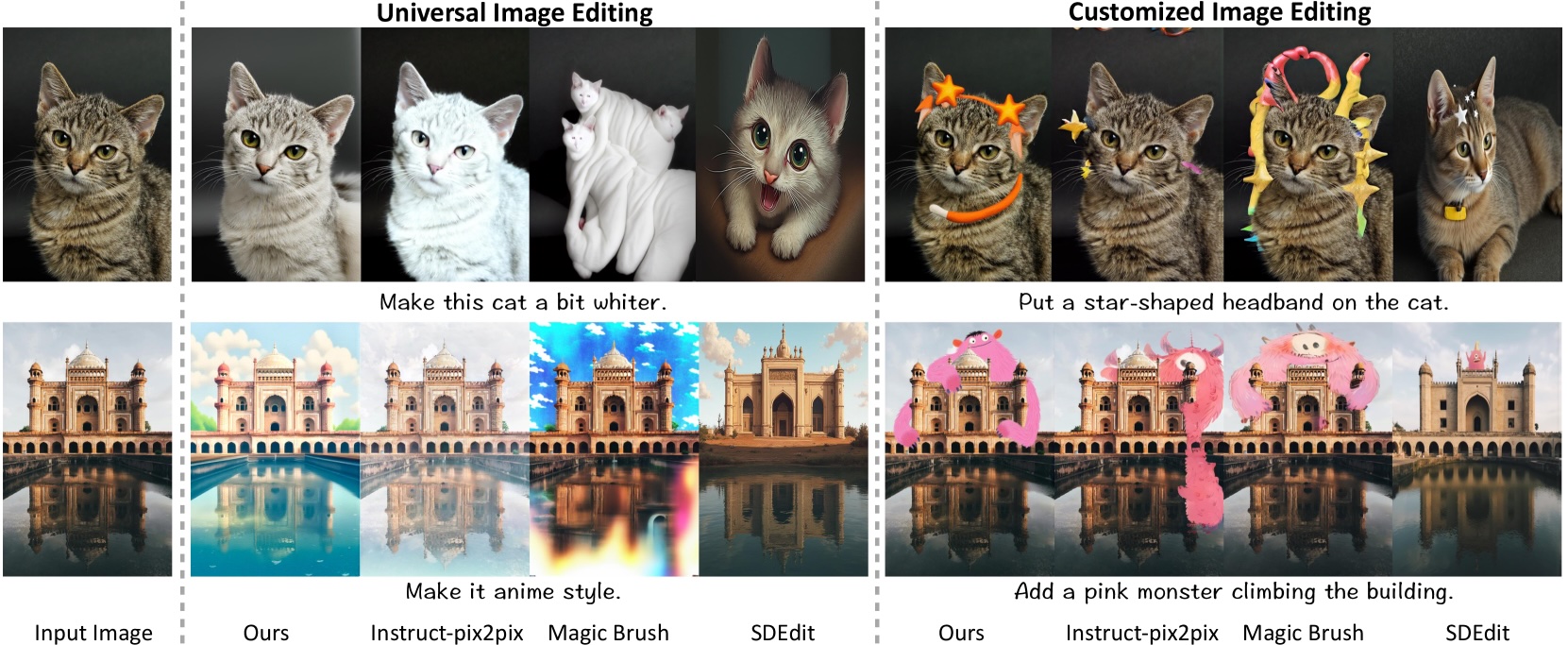

The team conducted extensive testing to demonstrate PhotoDoodle's capabilities. The system accurately implemented prompts like "Make the cat a little whiter" and "Add a pink monster climbing on the building."

When compared to existing methods, PhotoDoodle achieved superior results across various benchmarks measuring aspects like the similarity between images and text descriptions. It significantly outperformed comparison models in both targeted edits and global image changes.

Looking toward single-image training

The research team acknowledges that PhotoDoodle currently requires dozens of image pairs and thousands of training steps. Their next goal is to develop a system that can learn styles from just a single pair of images.

To support further research in this area, the scientists have published a dataset containing six different artistic styles and more than 300 image pairs. The code is available on GitHub.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now