Microsoft struggled with critical Copilot vulnerability for months

A major security flaw in Microsoft 365 Copilot allowed attackers to access sensitive company data with nothing more than a specially crafted email—no clicks or user interaction required. The vulnerability, named "EchoLeak," was uncovered by cybersecurity firm Aim Security.

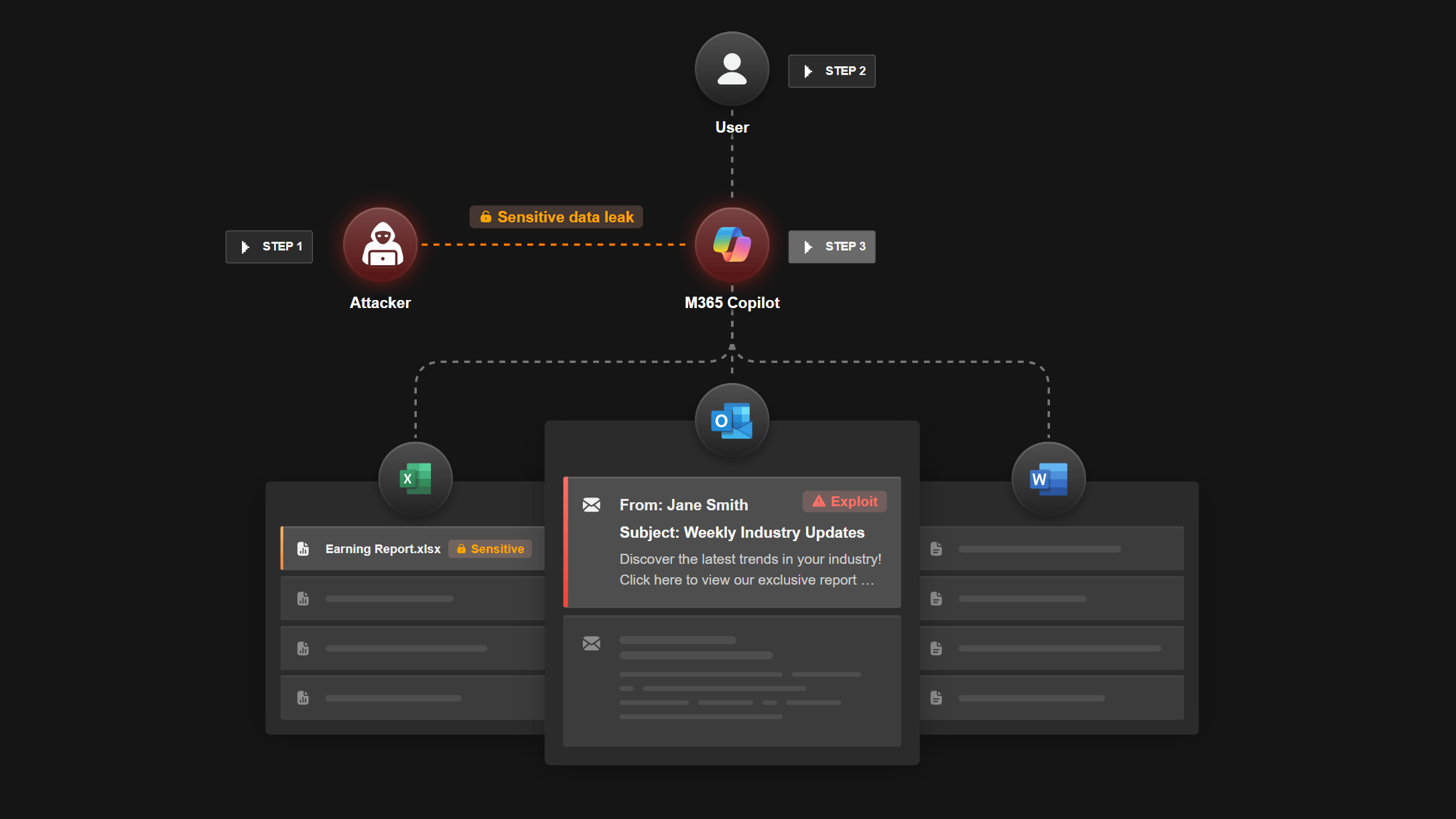

Copilot, Microsoft's AI assistant for Office apps like Word, Excel, PowerPoint, and Outlook, is designed to automate tasks behind the scenes. But that very capability made it vulnerable: a single email containing hidden instructions could prompt Copilot to search internal documents and leak confidential information—including content from emails, spreadsheets, or chats.

Because Copilot automatically scans emails in the background, the assistant interpreted the manipulated message as a legitimate command. The user never saw the instructions or knew what was happening. Aim Security describes it as a "zero-click" attack—a type of vulnerability that requires no action by the victim.

Source: Aim Security

Microsoft patches the vulnerability

Microsoft told Fortune that the issue has now been fixed and that no customers were affected. "We have already updated our products to mitigate this issue, and no customer action is required. We are also implementing additional defense-in-depth measures to further strengthen our security posture," a company spokesperson said. Aim Security reported the discovery responsibly.

Still, Aim says it took five months to fully resolve the issue. Microsoft received the initial warning in January 2025 and rolled out a first fix in April, but new problems surfaced in May. Aim held back its public disclosure until all risks were eliminated.

The incident highlights the risks that come with deploying AI agents and generative AI in business environments. Adir Gruss, CTO of Aim Security, sees it as more than just a simple bug—he calls it a structural problem in the architecture of AI agents. The flaw is an example of what's known as an "LLM scope violation," where a language model is tricked into processing or leaking information outside its intended permission boundaries.

Gruss warns that similar vulnerabilities could affect other AI agents, including Salesforce's Agentforce and those built on Anthropic's MCP. If I led a company looking to deploy an AI agent in production today, "I would be terrified," Gruss told Fortune. He compares the situation to the 1990s, when software design flaws led to widespread security problems.

The root of the problem, according to Gruss, is that current AI agents handle both trusted and untrusted data in the same processing step. Fixing this will require either a new system architecture or, at the very least, a clear separation between instructions and data sources. Early research on solutions is already underway.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.