Students who cheat are more likely to use generative AI tools for academic work, study finds

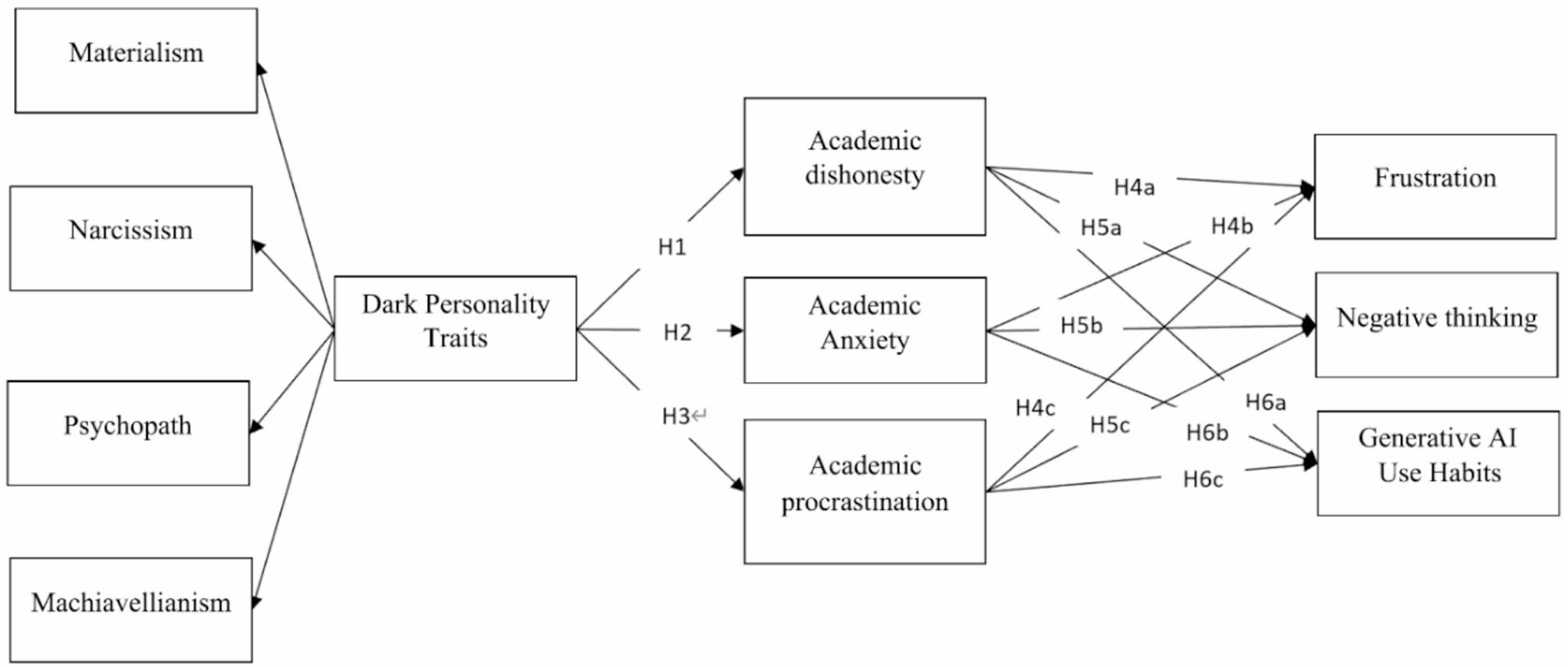

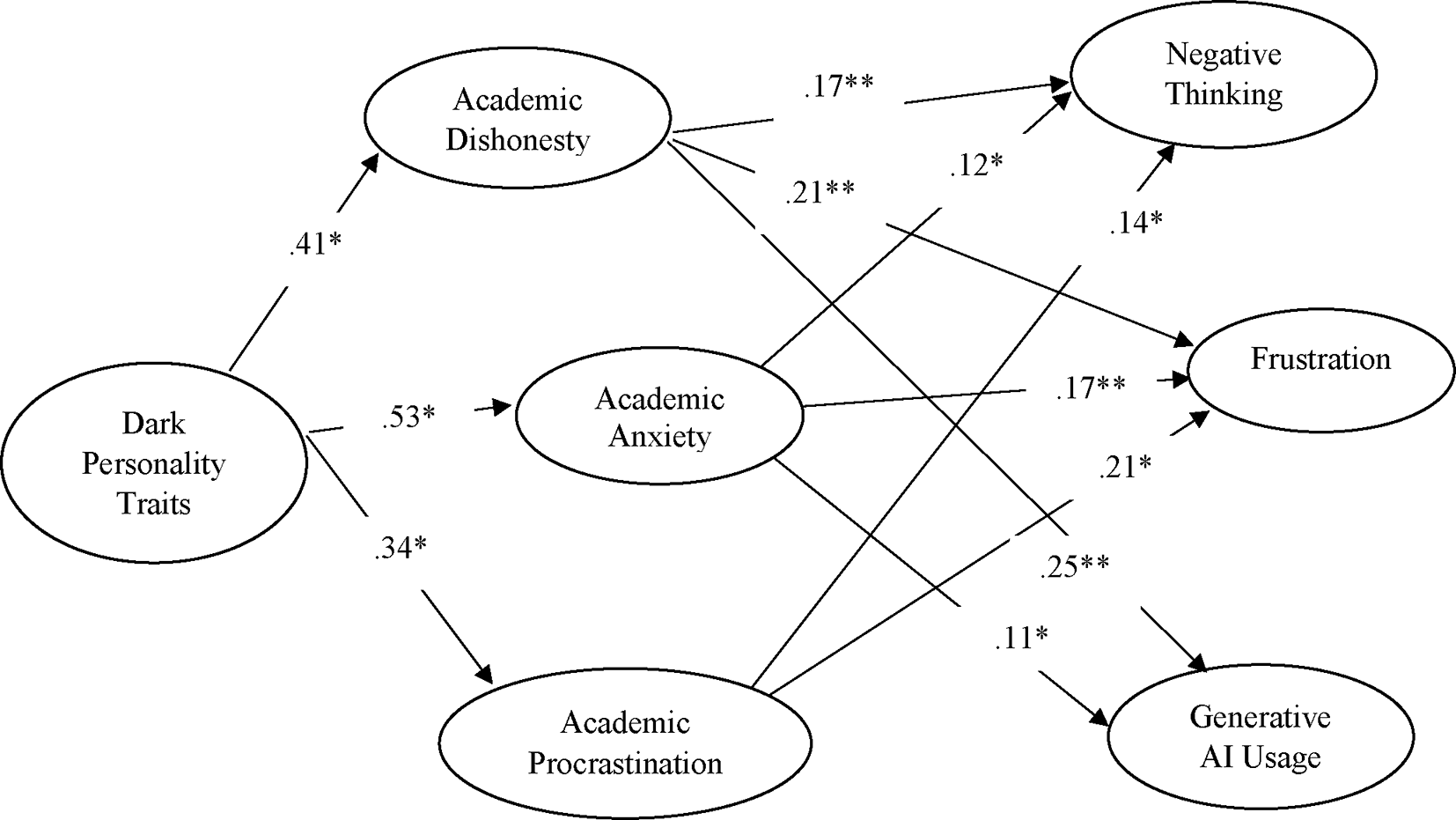

A new study finds that narcissism, Machiavellianism, materialism, and psychopathy are closely linked to academic dishonesty and heavier use of generative AI tools like ChatGPT and Midjourney.

Researchers from Chodang University and Baekseok University studied 504 art students to see how personality traits affect learning. They found that students with strong antisocial and manipulative traits were much more likely to cheat, experience test anxiety, and procrastinate.

Generative AI as a shortcut

The study points to a clear trend: students who cheat are more likely to turn to generative AI tools. Academic anxiety and procrastination also go hand in hand with higher AI use.

"Academic students who practice dishonest conduct seek AI tools because they want to circumvent difficult work or get quick solutions particularly under high academic pressure or subjective assessment conditions," the authors write. For anxious students, AI acts as a stress-relief tool. For procrastinators, it's a last-minute fix.

The researchers also note that unethical behavior leads to more frustration and negative thinking, which in turn drives up AI use. A separate 2024 study from South Korea found that stressed students with low confidence are especially likely to overuse AI tools.

Art students face unique risks

Art students are the first generation to confront the ethical challenges of AI in creative work. That means big questions about labeling AI-generated content, defining originality, and drawing the line between help and cheating. Intense competition and pressure for originality in China's education system make the temptation even greater, the researchers write.

"With AI becoming an integral part of the creative process, students have to face questions about the originality of AI-assisted work and the possibility of plagiarism," the study says. For example, an art student might use AI to generate visual concepts or imitate a style, blurring the line between legitimate help and academic dishonesty.

The study also found that dishonest behavior led to more frustration and harmful thought patterns. Students who cheated were more likely to feel disappointed and develop negative thinking.

Early warning systems for universities

The researchers recommend that colleges and universities set up early warning systems to identify students with "dark" personality traits. Targeted interventions like behavioral counseling and ethics training could help.

They also call for clear guidelines for AI use in coursework, so students know what counts as acceptable versus dishonest. Since creative thinking seems to reduce risk, art classes should focus more on originality, self-motivation, and hands-on learning.

The study is based on self-reports from Chinese art students and is limited to that group. It looked at students from six selective universities in Sichuan. The authors note that long-term studies are needed to confirm cause and effect.

AI misuse in education is starting to have legal consequences. In 2024, a US federal court upheld the punishment of a student who submitted AI-generated work without labeling it, setting a new legal precedent for schools worldwide. One early example came in the UK in 2023, when hundreds of students were investigated for using ChatGPT to cheat. At the time, ChatGPT was neither as widespread nor as advanced as it is now.

A Harvard Undergraduate Association survey of 326 students, released in summer 2024, found that almost 90 percent are already using generative AI. For about a third, AI systems have already replaced Wikipedia or Google as their main source of information.

Research shows that AI tools like ChatGPT can make learning problems worse if students use them incorrectly, especially for those who already struggle, though these tools can also be helpful when used appropriately.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.