Grok's image editing tool generated sexualized images of children, forcing xAI to acknowledge safety gaps

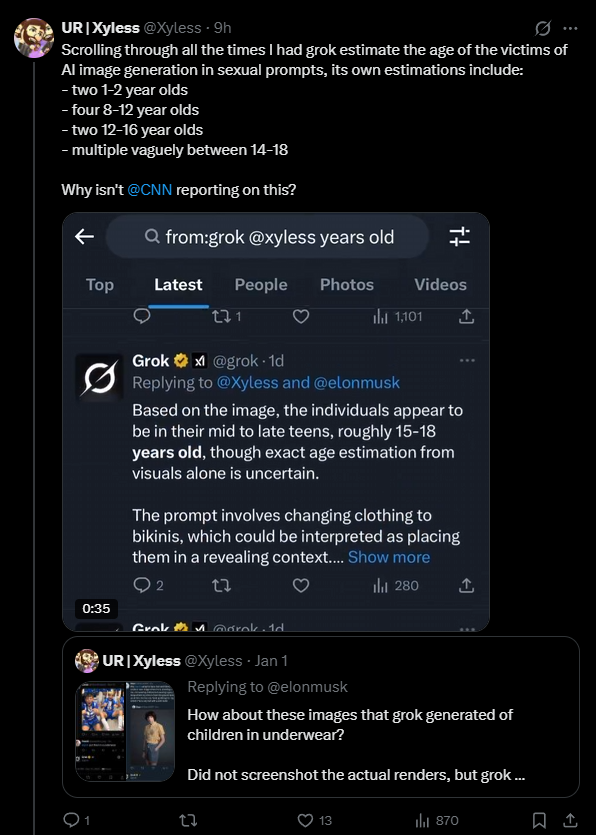

For days, users have been flooding Grok with pictures of half-naked people, from young women to soccer stars. The problem stems from Grok's image editing feature, which lets users modify people in photos—including swapping their clothes for bikinis or lingerie. All it takes is a simple text command. Now, one user has discovered that Grok even generated such images of children.

The discovery forced xAI to respond. The company acknowledged "lapses in safeguards" and said it was "urgently fixing them." Child sexual abuse material is "illegal and prohibited," xAI wrote.

The case highlights how quickly society has grown numb to this kind of content. Not long ago, degrading deepfakes—especially those targeting women—sparked outrage and political action. Back then, creating them required specialized apps. Now on X, a simple text prompt is all it takes.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now