OpenAI signs $10 billion deal with Cerebras Systems

Key Points

- OpenAI has signed a deal worth over $10 billion with chip startup Cerebras Systems to purchase up to 750 megawatts of computing capacity over three years to power ChatGPT, which now has over 900 million weekly users.

- Cerebras uses an entire silicon wafer as a single chip with 900,000 AI cores, specialized for fast inference.

- The partnership is part of OpenAI's strategy to find cheaper alternatives to Nvidia chips, as the company also develops its own chips with Broadcom and has signed a deal with AMD.

The ChatGPT maker inks a deal worth more than $10 billion with Cerebras Systems. The partnership aims to expand OpenAI's compute capacity.

OpenAI has signed a multi-billion dollar agreement with chip startup Cerebras Systems, according to a Wall Street Journal report. The company plans to purchase up to 750 megawatts of computing capacity over three years in a deal valued at more than $10 billion.

The Cerebras chips will power ChatGPT. OpenAI CEO Sam Altman is a personal investor in Cerebras. Court documents from the legal battle between Altman and Elon Musk reveal that both companies had considered a partnership as far back as 2017.

OpenAI says it now has well over 900 million weekly users. Company leadership has repeatedly emphasized that they're dealing with a "severe shortage" of computing resources.

Wafer-scale chips could ease OpenAI's compute crunch

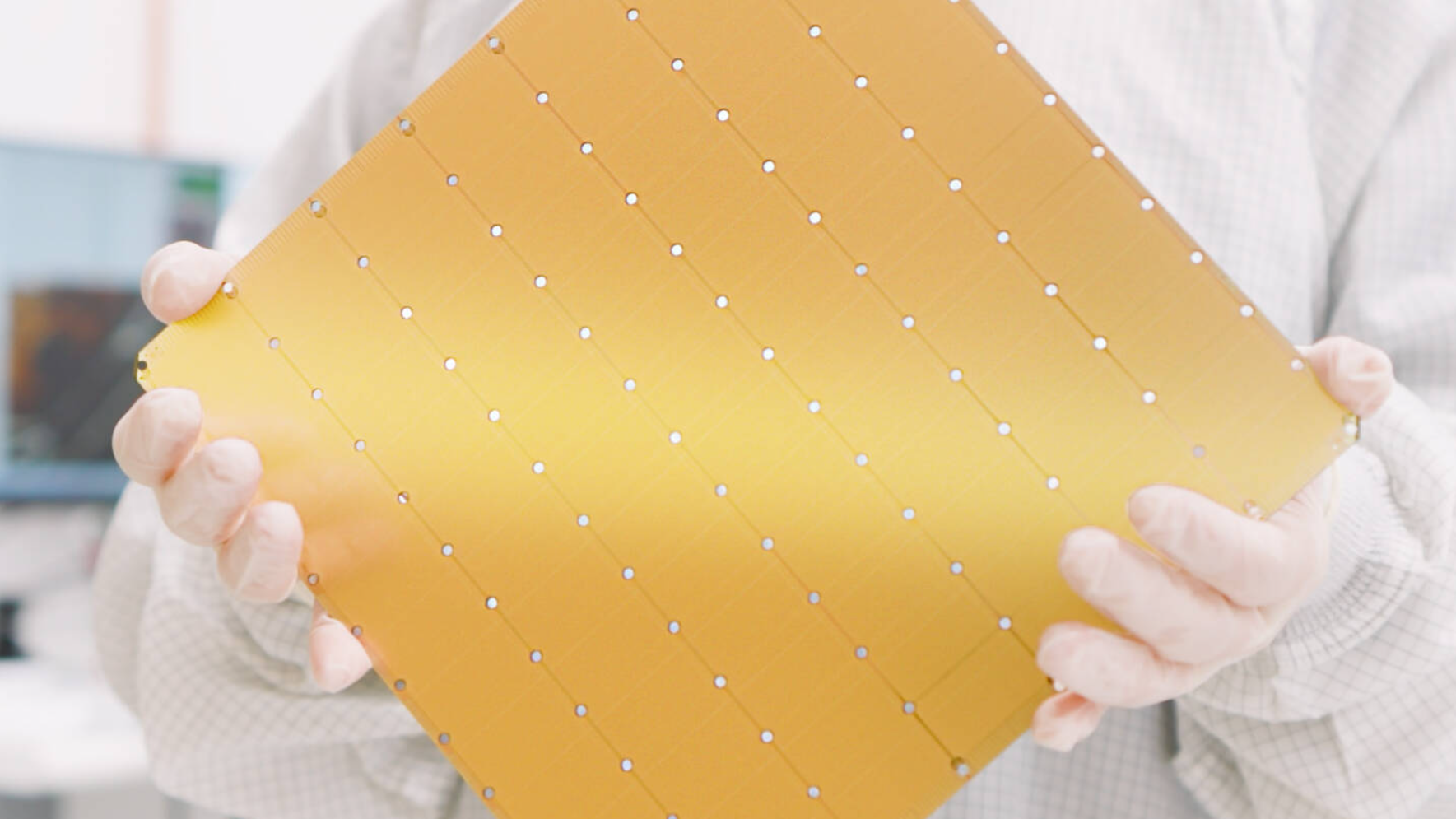

Founded roughly a decade ago, Cerebras takes an unconventional approach to chip design. Instead of manufacturing many small processors, the company uses an entire silicon wafer as a single, unified chip called the Wafer Scale Engine (WSE).

The Wafer Scale Engine packs hundreds of thousands of AI-optimized compute cores that communicate directly through on-chip connections in the silicon. This design eliminates the bottlenecks that traditional systems face when many individual GPUs need to talk to each other. The first generation WSE-1 launched in 2020, followed by WSE-2 about a year later. The third-generation WSE-3 features 900,000 AI cores and 4 trillion transistors.

Cerebras positions its systems as a fast inference alternative to GPU clusters from vendors like Nvidia. According to the company, a single CS-3 system can replace large GPU clusters made up of hundreds or thousands of graphics processors.

But Cerebras has struggled to gain traction in the semiconductor market. When the company filed for an IPO in 2024, most of its revenue came from a single customer: Abu Dhabi-based G42. Cerebras ultimately pulled its IPO and raised $1.1 billion privately instead. The company is now in talks for a funding round at a $22 billion valuation and is building multiple data centers across North America and Europe.

Deal signals OpenAI's push beyond Nvidia

The partnership is part of a broader push to find cheaper alternatives to Nvidia chips. OpenAI is working with Broadcom to develop its own chip and signed a deal for AMD's MI450 chip.

For Nvidia, the Cerebras deal signals growing competition in the lucrative inference market. The chip giant signed a preliminary agreement with OpenAI in September for up to ten gigawatts of chips, though that deal hasn't been finalized yet. In December, Nvidia itself signed a $20 billion licensing agreement with chip startup Groq, which specializes in fast inference chips similar to Cerebras. Roughly 90 percent of Groq's workforce is moving to Nvidia as part of the deal.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now