DALL-E 2 open-source alternative Stable Diffusion is now available

Stable Diffusion is an open-source alternative to OpenAI DALL-E 2 that runs on your graphics card.

You have a whole range of products available if you want to use artificial intelligence to generate images via text input. Besides the forerunners, DALL-E 2 from OpenAI and the weaker Craiyon, especially Midjourney is very popular.

Recently, startup StabilityAI announced the release of Stable Diffusion, a DALL-E 2-like system initially available through a closed Discord server.

What makes Stable Diffusion special is that the powerful generative model was jointly developed by researchers from Stability AI, RunwayML, LMU Munich, EleutherAI and LAION. It is open-source and runs on a standard graphics card.

Stable Diffusion is available on many platforms

To train the Stable Diffusion model, Stability AI used 4,000 Nvidia A100 GPUs and a variant of the LAION-5B dataset. Stable Diffusion can therefore generate images of prominent people and other subjects that OpenAI does not allow with DALL-E 2.

After testing via Discord, Stability AI released access via the web interface Dreamstudio. Here, however, there is a NSFW filter ("not safe for work") and some restrictions on input. HuggingFace also offers a rudimentary web interface for Stable Diffusion.

Delighted to announce the public open source release of #StableDiffusion

!

Please see our release post and retweet! https://t.co/dEsBX7cRHwProud of everyone involved in releasing this tech that is the first of a series of models to activate the creative potential of humanity

- Emad (@EMostaque) August 22, 2022

Now the Stable Diffusion team has published the fully trained model on HuggingFace including the related code on Github. Less than a week ago, an older version of the model had already been leaked on 4chan.

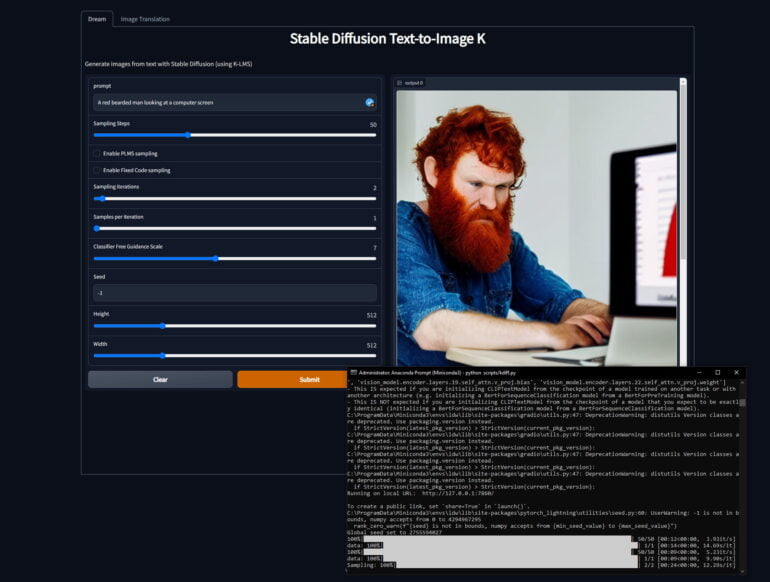

Stable Diffusion runs on a local graphics card with a graphical user interface

With the nearly four gigabyte model and associated repos, anyone with an Nvidia graphics card with more than 4 gigabytes of VRAM can run Stable Diffusion locally. However, higher image resolutions require more VRAM.

AMD graphics cards are not officially supported, but can still be used with a few tricks. Apple's M1 chips are also supposed to be supported in the future.

Thanks to the open-source community, there is an option to run Stable Diffusion with a few lines of code in a local browser window with a functional interface (instructions for running Stable Diffusion locally). If you need help with prompts, you can use the excellent prompt builder.

Those without a suitable graphics card can continue to use Dreamstudio or resort to one of the numerous Google Collabs. More information and instructions can be found in the Reddit thread on running Stable Diffusion.

Midjourney's new beta model also uses Stable Diffusion as part of its graphics pipeline.

Midjourney just launched a beta version of their system, which combines Midjourney and Stable Diffusion. Here are a few examples. Made only by writing text prompts to their discord bot. To test it yourself, just write --beta after your Midjourney prompt.#midjourney #conceptart pic.twitter.com/Wj5PJ4npgR

— Martin Nebelong (@MartinNebelong) August 23, 2022

Stable diffusion ushers in a media revolution

Many people will use Stable Diffusion to create interesting images. Some will create questionable material with appropriately specialized Stable Diffusion variants. But Stable Diffusion is more than that: the enhancements added in the few hours after release, such as the user interface described above, are just the beginning.

The open-source community will develop numerous applications that will surprise and reach new media formats. After the first version of Stable Diffusion, there will be others that surpass the capabilities of the current version. Stability AI is already working on other open-source models, for example, for HarmonAI generative audio tools.

There's a sense of optimism in open-source AI research because until now, powerful generative AI systems have been limited by filters, access, and hardware requirements. The release of Stable Diffusion marks the beginning of a new era in which the open-source community has a free hand, as noted German AI researcher Joscha Bach points out on Twitter.

The public release of the Stable Diffusion model is not just the death knell of the stock photo industry. Unless there are significant legal changes, an ecosystem of apps that let everyone generate and modify audio, 3d, animations, video will trigger a media revolution.

- Joscha Bach (@Plinz) August 22, 2022

The researcher predicts nothing less than a media revolution. Anyone who has followed the development of generative AI in recent years knows that Bach has good reasons for his prediction.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now