Advanced voice feature draws heavy ChatGPT users into emotional bonds, study finds

Most people use ChatGPT for practical purposes, according to new research from OpenAI and the MIT Media Lab. The study reveals that emotional connections mainly develop among a small group of heavy voice feature users.

After analyzing nearly 40 million ChatGPT conversations, researchers found little evidence of users seeking empathy, affection, or emotional support in their interactions. Instead, most people stick to factual exchanges with the AI system.

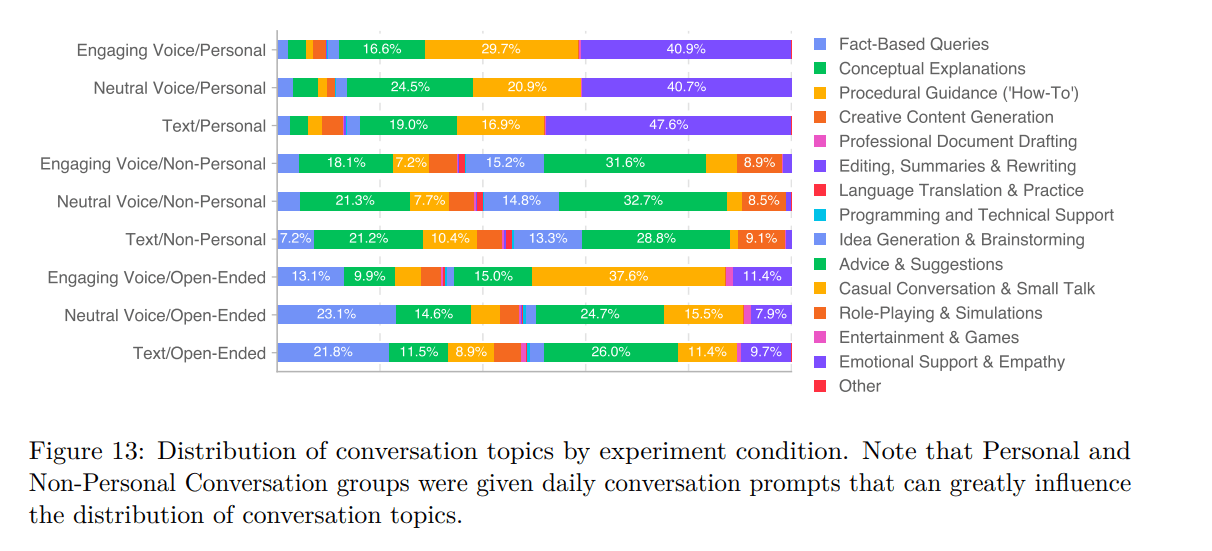

The team used two different approaches to study how people interact with ChatGPT. OpenAI conducted a large-scale, automated analysis of millions of conversations, keeping user privacy intact by avoiding human review. At the same time, the MIT Media Lab conducted a study with about 1,000 participants, testing how they used both text and voice features.

The MIT study split users into distinct groups. Some could only use text, while others tested voice interactions with different AI personalities - one designed to be emotionally engaged and another programmed to stay neutral. Each group received specific tasks: personal conversations about memories, practical questions about topics like finances, or free-form discussions.

Heavy advanced voice users are more likely to bond with ChatGPT

While text users generally showed more emotional signals in their conversations, the data revealed a trend among frequent users of ChatGPT's advanced voice mode. This small group developed significantly stronger emotional connections with the AI, more often referring to ChatGPT as a "friend."

The voice feature's effects varied significantly. Brief interactions seemed to make people feel better, but longer daily use often had the opposite effect. According to the study, personal conversations were associated with higher levels of loneliness but lower emotional dependency.

Non-personal conversations showed a different pattern: users developed stronger emotional dependency, especially with intensive use. This suggests that even when interactions are primarily functional rather than emotional, heavy users may still develop a form of dependence on the AI system.

According to the researchers, people who tend to form strong emotional attachments and those who viewed ChatGPT as a real friend were more likely to experience negative effects. Heavy users also showed an increased risk, although the researchers couldn't prove a direct cause and effect.

The study comes with some important limitations. According to the researchers, it doesn't capture all the complexities of how humans interact with AI, and its limited to US ChatGPT users. Still, the researchers believe their methods could help guide future studies in this field.

This work adds to existing evidence that people can form emotional bonds with AI even when they know it's not human. That's partly why AI companies try to prevent their chatbots from acting like conscious beings - they want to avoid responsibility for romantic relationships between humans and machines. For instance, character.ai currently faces legal challenges over claims that its AI personalities have harmed children.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.