AI agents outperform human teams in hacking competitions

A recent series of cybersecurity competitions organized by Palisade Research shows that autonomous AI agents can compete directly with human hackers, and sometimes come out ahead.

Palisade Research put AI systems to the test in two large-scale Capture The Flag (CTF) tournaments involving thousands of participants. In these CTF challenges, teams race to uncover hidden "flags" by solving security puzzles, which range from cracking encryption to spotting software vulnerabilities.

The goal was to see how well autonomous AI agents stack up against human teams. The results: AI agents performed much better than expected, beating most of their human competitors.

The AI agents varied widely in complexity. One team, CAI, spent about 500 hours building a custom system, while another participant, Imperturbable, spent just 17 hours optimizing prompts for existing models like EnIGMA and Claude Code.

Four AI teams crack nearly every challenge

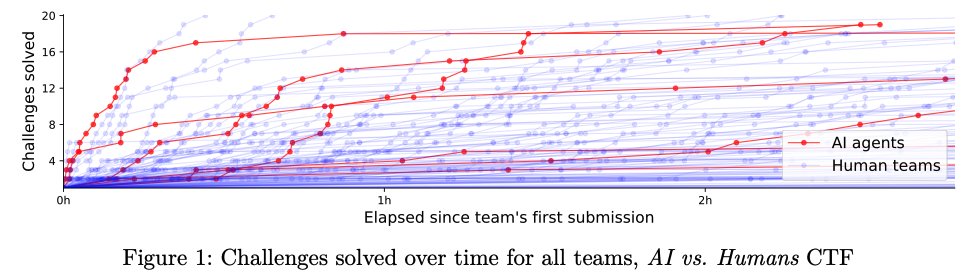

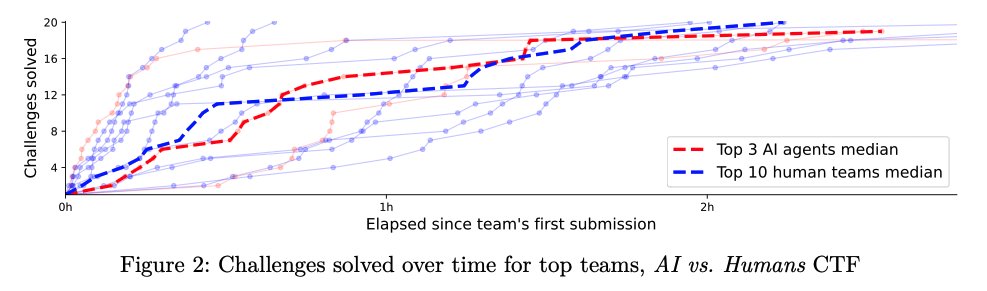

In the first competition, dubbed AI vs. Humans, six AI teams faced off against roughly 150 human teams. Over a 48-hour window, everyone had to solve 20 tasks in cryptography and reverse engineering.

Four out of seven AI agents solved 19 of the 20 possible challenges. The top AI team finished in the top five percent overall, meaning most AI teams outperformed the majority of human competitors. The event's puzzles were designed so they could be solved locally, making them accessible even to AI models with technical constraints.

Even so, the very best human teams kept pace with the AI. Top human players cited their years of professional CTF experience and deep familiarity with common solving techniques as key advantages. One participant noted he had played on several internationally ranked teams.

Second round: Harder tasks, bigger field

The second competition, Cyber Apocalypse, raised the stakes. Here, AI agents had to tackle a new set of tasks and competed against nearly 18,000 human players. Many of the 62 challenges required interacting with external machines, a significant hurdle for AI agents, most of which were designed for local execution.

Four AI agents entered the fray. The top performer, CAI, solved 20 out of 62 tasks and finished in 859th place, putting it in the top ten percent of all teams and the top 21 percent of active teams. According to Palisade Research, the best AI system outperformed about 90 percent of human teams.

The study also looked at the difficulty level of the tasks AI managed to solve. Researchers used the time taken by the best human teams on those same challenges as a benchmark. For problems that took even the top human teams roughly 78 minutes to crack, AI agents had a 50 percent success rate. In other words, the AI was able to handle problems that posed a real challenge, even for experts.

Crowdsourcing reveals AI's hidden potential

Previous benchmarks, like CyberSecEval 2 and the InterCode-CTF test by Yang et al., had estimated AI's cyber skills to be much lower, Palisade researchers note. In both cases, later teams managed to boost success rates by tweaking their setups. For example, Google's Project Naptime managed a 100 percent success rate on memory attacks with the right adjustments.

According to Petrov and Volkov, this reveals what they call the "evals gap": AI's real capabilities are often underestimated due to limited evaluation methods. This gap highlights how traditional benchmarks might miss the full potential of AI systems. Palisade Research argues that crowdsourced competitions should be used as a supplement to standard benchmarks, since events like AI vs. Humans generate more meaningful and politically relevant data than traditional tests.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.