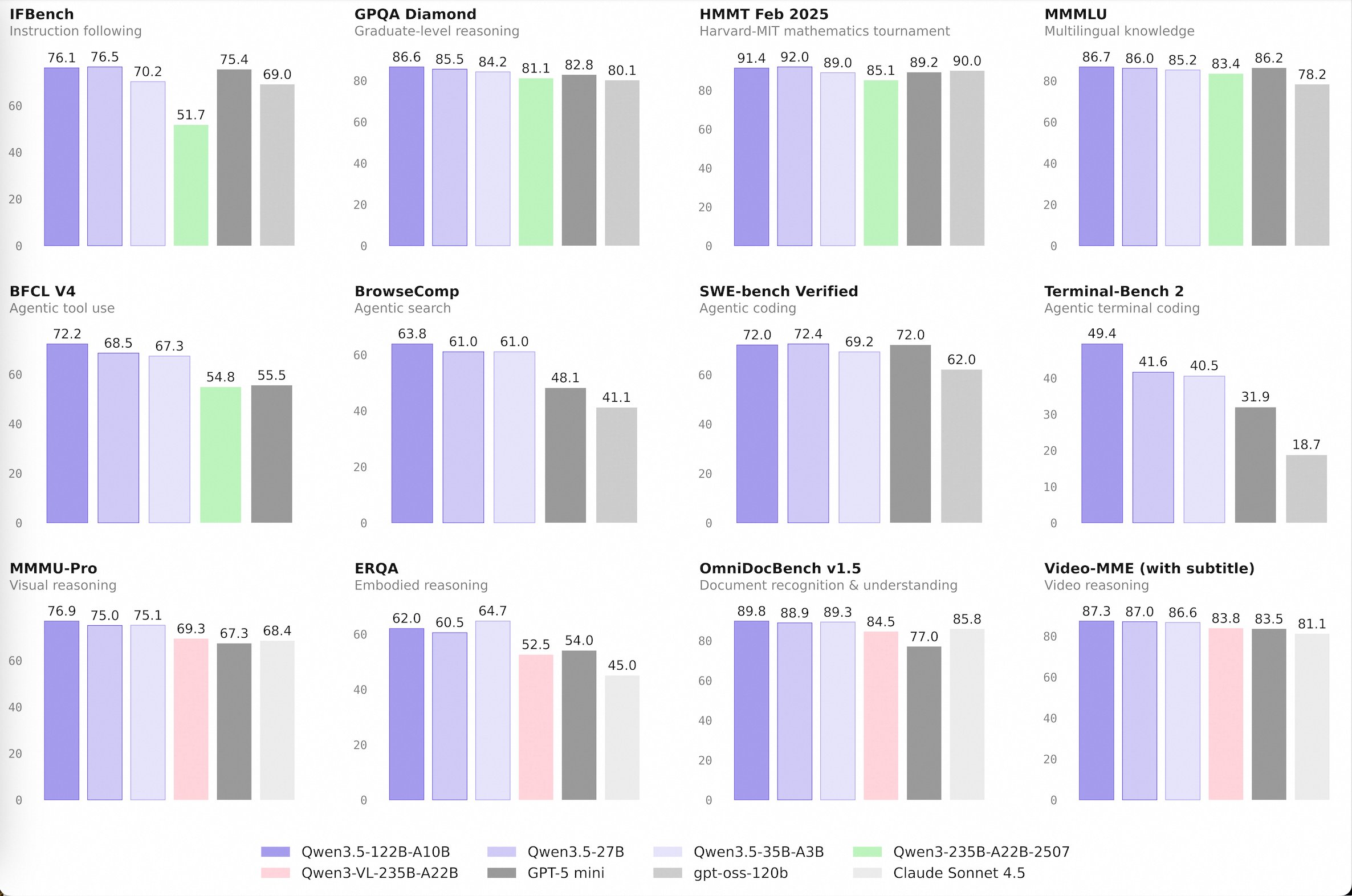

Alibaba has expanded its Qwen 3.5 model series. The lineup includes four models: Qwen3.5-Flash, Qwen3.5-35B-A3B, Qwen3.5-122B-A10B, and Qwen3.5-27B. According to Alibaba, the models deliver stronger performance while using less compute. All four take text, images, and video as input and generate text as output. The series started with the release of Qwen3.5-397B-A17B in mid-February.

The smaller Qwen3.5-35B-A3B model outperforms its much larger predecessor, Qwen3-235B-A22B; a clear sign that better architecture, data quality, and reinforcement learning matter more than raw model size. The larger 122B and 27B variants aim to close the remaining gap to top-tier models, particularly in complex agent scenarios.

All models are available on Hugging Face, ModelScope, and through Qwen Chat. They ship under the Apache License 2.0, a permissive open-source license that allows commercial use, modification, and redistribution. Qwen3.5-Flash is the hosted production version with a context length of one million tokens and built-in tools. The API costs $0.10 per million input tokens and $0.40 per million output tokens.