ChatGPT gets GPT-4 32K support for PDF and file chat with longer documents

It looks like OpenAI has made another significant change in the switch to its latest GPT-4 model that integrates all GPT-4 models.

OpenAI is currently rolling out its "GPT-4 (All Tools)" model, which automatically selects the best tools for a given task, such as Browsing, Advanced Data Analytics, or DALL-E 3.

The model can also process much longer content in a single pass, up to 32,000 thousand tokens, or about 25,000 words. This allows users to chat with longer PDFs without the need for a vector database. The model has an additional 2.7K context window for the system prompt.

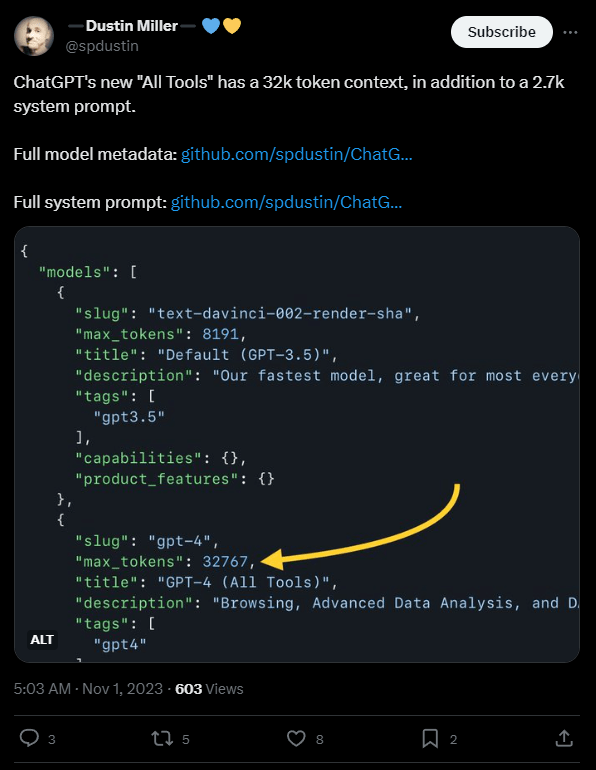

This was spotted by developer Dustin Miller on twitter.com, referring to a code find. Another user confirms the observation with a long document of 16,000 words that ChatGPT was able to analyze with GPT-4-All.

OpenAI first introduced the 32K model when it unveiled GPT-4 in March, but limited access first to select users and then to the API, likely for cost reasons. The 32K model is even pricier than the 8K model, which is already 15 times more expensive than GPT-3.5 via the API.

If OpenAI now implements the 32K model throughout ChatGPT, it could mean that they have a better handle on the cost side. The interesting question is whether the company will pass this advantage on to the developer community. The OpenAI developer conference on November 6th would be a fitting event for an announcement.

LLM competition is heating up

Another reason for expanding the context window could be that OpenAI's main competitor, Anthropic, is accelerating the rollout of its chatbot, Claude 2, on the web and through APIs. Google and Amazon recently invested up to six billion dollars in Anthropic.

Claude 2's unique selling point is a context window of 100K (about 75,000 words), still about three times the size of GPT-4's 32K, but still a significant upgrade from the 8K context window (4,000 to 6,000 words) of the original GPT-4 model in ChatGPT.

Furthermore, Google's Gemini is just around the corner, which may also rely on a large context window in addition to multimodality.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.