OpenAI CEO Altman, Nvidia and Trump weigh in on Deepseek's market impact

Update –

- Added statements from Sam Altman, President Trump, and Nvidia

Update January 28, 2025:

The buzz around Deepseek's R1 model hit Nvidia hard yesterday - the chipmaker's market value plunged by about $589 billion at its peak, setting what the Financial Times calls a record for single-day losses on U.S. exchanges.

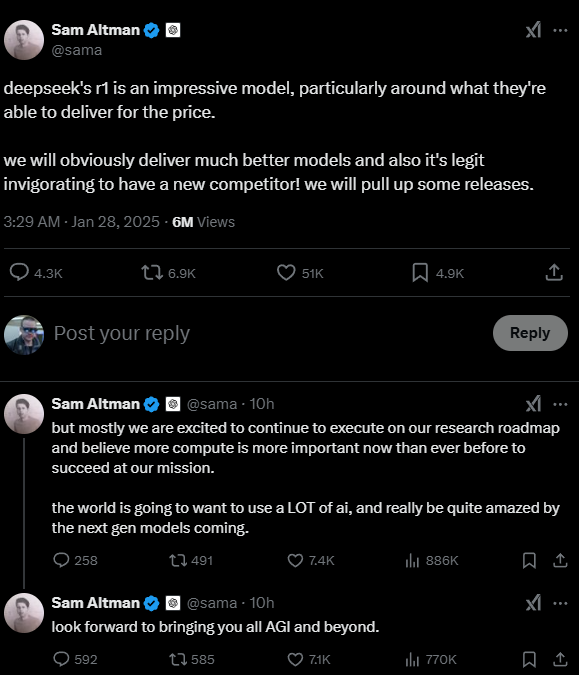

After Microsoft CEO Satya Nadella's seemingly unsuccessful attempt to calm nervous investors (see story below), OpenAI chief Sam Altman also weighed in. In a post on X, he called Deepseek-R1 an "impressive model," especially considering its price/performance ratio.

While noting that OpenAI would "obviously deliver much better models," he welcomed the new competition and said his company would move up some planned releases.

Altman stressed that OpenAI isn't changing its research focus, adding that "more compute is more important now than ever before." His argument echoes Nadella's earlier point about the Jevons paradox: even as AI technology gets more efficient, its growing popularity means overall computing costs keep climbing.

President Trump called Deepseek a "wake-up call for our industries," assuming the Chinese startup's claims are "true, and nobody knows, but I view that as a positive."

Nvidia weighed in as well, describing Deepseek's work as "excellent AI advancement" and a "perfect example" of test-time scaling, a method that still requires "significant numbers of Nvidia GPUs and high-performance networking."

Original article from January 27:

Chinese AI startup Deepseek rattles US tech sector with superior efficiency and quality

Meta has established multiple emergency response teams after Chinese AI company Deepseek demonstrated AI models that are both more efficient and significantly cheaper to operate than Western alternatives.

According to The Information, Meta's AI division has entered crisis mode as company executives worry their upcoming Llama model won't match Chinese competitor Deepseek's capabilities.

Meta has set up four specialized "war rooms" to analyze and respond to Deepseek's technology. Two teams are studying how to replicate Deepseek's cost-effective training and operational methods, while a third investigates what training data the Chinese startup might be using. The fourth team is exploring how to restructure Meta's models to match Deepseek's efficiency.

Meta's internal scramble was first revealed through an employee leak on the anonymous platform "Blind," where a staffer described panic within Meta's AI department. Meta spokesperson Jon Carvill downplayed these concerns, telling The Information that regularly evaluating competing AI models is standard practice.

Deepseek challenges Western AI dominance

What sets Deepseek apart is its aggressive pricing strategy – the company's cloud API costs a mere fraction of comparable OpenAI services, with prices 17 to 27 times lower. In addition, Deepseek's impressive R1 performance, its first "reasoning" model similar to OpenAI o1, has propelled its app to the top of the iPhone charts, surpassing even the widely popular ChatGPT.

The company's success is already affecting US markets. Nvidia and other AI-related chip stocks dropped several percentage points overnight as investors processed Deepseek's ability to run powerful AI models with fewer chips.

While not directly addressing Deepseek, Microsoft CEO Satya Nadella shared his thoughts on the situation through the lens of the Jevons Paradox. This economic principle suggests that increased efficiency in resource use often leads to higher demand for that resource.

Nadella applied this concept to AI, stating, "As AI gets more efficient and accessible, we will see its use skyrocket, turning it into a commodity we just can't get enough of."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.